- Community Home

- >

- Servers and Operating Systems

- >

- Servers & Systems: The Right Compute

- >

- HPE Elastic Platform for Analytics: Why infrastruc...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

HPE Elastic Platform for Analytics: Why infrastructure matters in big data pipeline design

Are you ready to begin creating infras

Designing infrastructure for big data analytics brings with it no shortage of challenges. Not all enterprises have the in-house expertise to design and build large scale (PB+) data lakes ready to quickly move into production. A litany of open source tools creates enormous complexity in design and integration. Most legacy data and analytics systems are ill-equipped to handle new data and workloads. Old design principles such as using core/spindle ratios are no longer a reliable guide with newer workloads.

Modern data pipelines will require extensive use of machine learning, deep learning and artificial intelligence frameworks to analyze and perform real-time predictive analytics against both structured and unstructured data. Next-generation, real and near-real time analytics requires a scalable, flexible high-performing platform.

Enterprises need to look beyond traditional commodity hardware, particularly with latency sensitive tools like Spark, Flink and Storm, along with NoSQL databases like Cassandra and HBase where low latency is mandatory. Data locality, data gravity, data temperature and the network all have to be part of the overall design. Add in data protection and data governance and you have a large number of variables to consider.

The traditional approach with Hadoop 1.0 was to use co-located compute and storage, which worked six-to-eight years ago when the focus was on batch analytics using HDFS and MapReduce. With the wave of technologies in the current Hadoop 3.0 ecosystem and beyond, co-locating compute and storage can be extremely inefficient and have negative implications on performance and scaling.

Here’s the new reality: there is no typical or single “big data workload” that you can use as a guide upon which to base design decisions. Different workloads will have different resource requirements, ranging from batch processing (a balanced design), interactive processing (more CPU), and machine learning (more GPUs). The traditional symmetric design (co-located storage and compute) leads to trapped resources and power/space constraints. You end up with multiple copies of data due to governance, security and performance concerns. The transition must be to a flexible, scalable, high performing architecture.

Address these needs with the HPE Elastic Platform for Analytics (EPA) architecture

HPE EPA is a modular infrastructure foundation designed to deliver a scalable, multi-tenant platform by enabling independent scaling of compute and storage through infrastructure building blocks that are optimized for density and running disparate workloads.

HPE EPA environments allow for the independent scaling of compute and storage and employ higher speed networking than previous generation Hadoop Clusters. They also enable consolidation and isolation of multiple workloads while sharing data, improving security and governance. In addition, workload-optimized nodes help with optimal performance and density considerations.

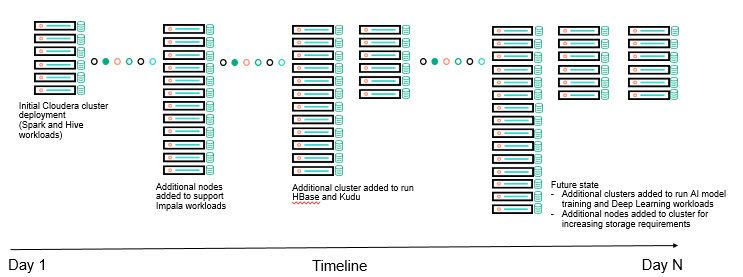

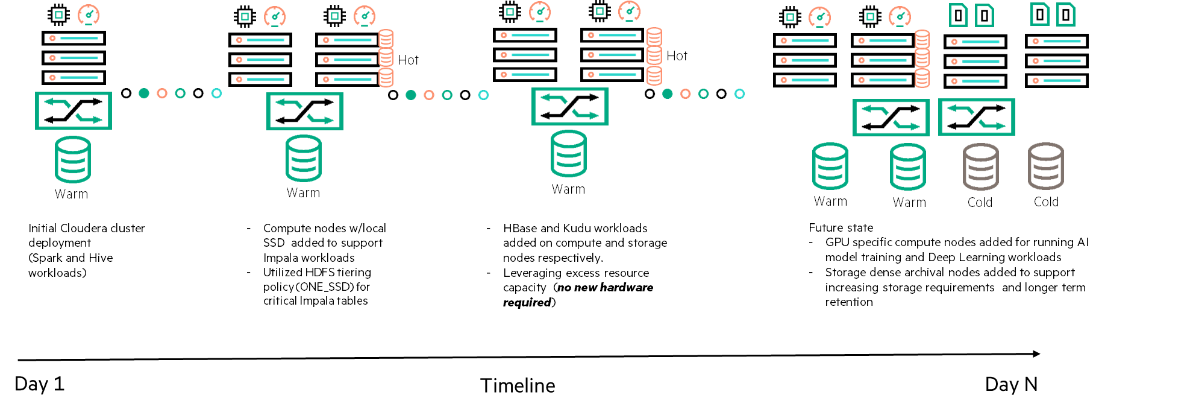

We recently had a customer requirement where the organization wanted to build a next-generation analytics environment for its business. Part of the challenge included changing architectural and business requirements. In particular, the initial design focused on Spark workloads and the final design focused on both Spark and Impala with critical SLAs attached to response time on Impala table scan queries.

The day-one cluster was primarily running Spark and Impala, plus services like HBase, Kudu, etc. got added over time. This is where an architecture like HPE EPA comes in handy. We were able to use purpose-built compute tiers for running Spark and Impala jobs and a separate storage tier for HDFS and Kudu. HPE EPA provided elastic scalability to grow and/or add workload specific compute and storage nodes. Here is a pictorial representation of the customer scenario and solution.

Challenges with a traditional cluster design

Solution with HPE EPA Elastic cluster

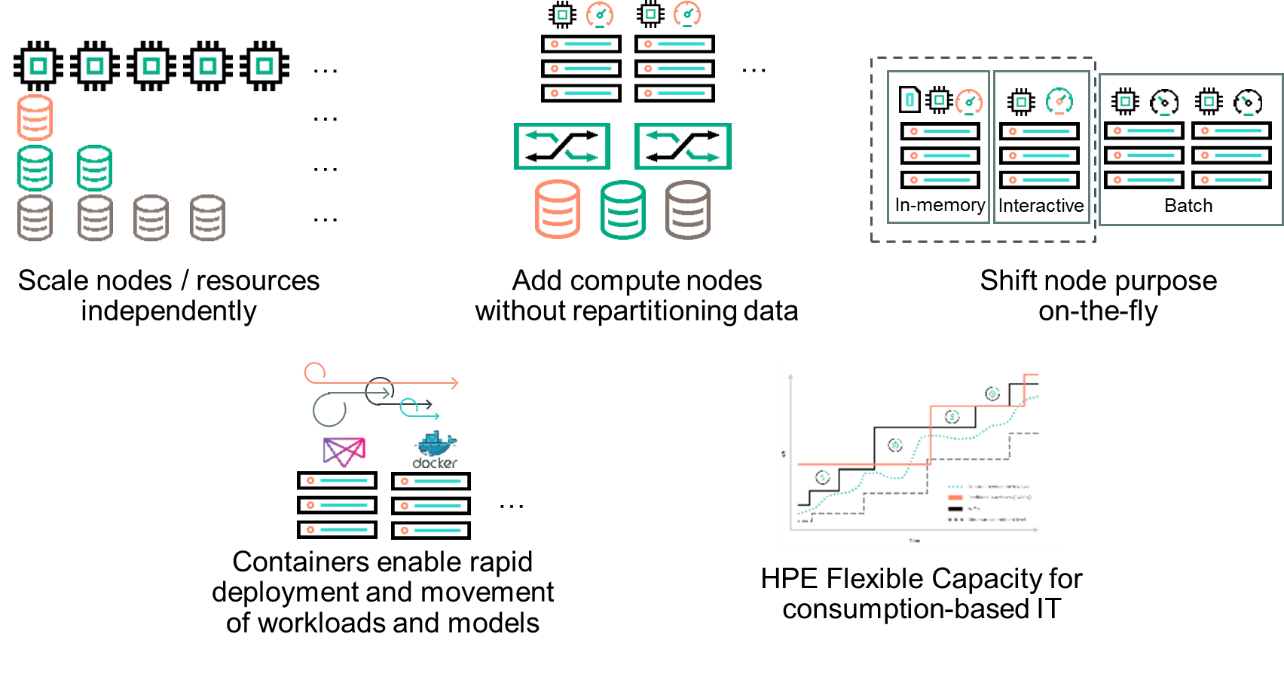

What exactly makes this architecture elastic?

HPE EPA allows the scaling of distinct nodes and resources independently which is critical with the diversity of tools and workloads in the big data ecosystem. It even allows you to change the node function on the fly (as described in the previous example). You can also add compute nodes without repartitioning the data. Containers enable rapid deployment and movement of workloads and models in line with fast data analytics requirements.

In summary, multi-tenant, elastic and scalable data lakes built on the HPE EPA architecture and a big data pipeline meet your next-generation requirements. Here is a pictorial representation.

Get more information on the HPE EPA architecture or refer to this reference architecture. Or contact your local HPE sales representative.

For suggestions on optimized hardware based on workload, check out the HPE EPA Sizing Tool.

Meet Infrastructure Insights blogger Mandar Chitale, HPE Solution Engineering Team.

- Back to Blog

- Newer Article

- Older Article

- PerryS on: Explore key updates and enhancements for HPE OneVi...

- Dale Brown on: Going beyond large language models with smart appl...

- alimohammadi on: How to choose the right HPE ProLiant Gen11 AMD ser...

- ComputeExperts on: Did you know that liquid cooling is currently avai...

- Jams_C_Servers on: If you’re not using Compute Ops Management yet, yo...

- AmitSharmaAPJ on: HPE servers and AMD EPYC™ 9004X CPUs accelerate te...

- AmandaC1 on: HPE Superdome Flex family earns highest availabili...

- ComputeExperts on: New release: What you need to know about HPE OneVi...

- JimLoi on: 5 things to consider before moving mission-critica...

- Jim Loiacono on: Confused with RISE with SAP S/4HANA options? Let m...

-

COMPOSABLE

77 -

CORE AND EDGE COMPUTE

146 -

CORE COMPUTE

171 -

HPC & SUPERCOMPUTING

140 -

Mission Critical

88 -

SMB

169