- Community Home

- >

- HPE AI

- >

- AI Unlocked

- >

- Better data tiering: a new approach to erasure cod...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Better data tiering: a new approach to erasure coding

A better question is, why can’t you have both? Turns out, you can--and you should.

To explain how, let’s look at why resource optimization via data tiering with some systems is inefficient, resulting in unnecessary costs, and how a new approach changes that.

Large-scale data can be huge, and with even highly scalable systems, there comes a time when you want to free up space for more efficient resource utilization. One way to do this, of course, is to delete data. There are times when data is no longer needed, or when you are required to delete it. In very large systems, even data deletion needs to be done efficiently.

But much of your data needs to be retained, either to meet regulatory requirements or because it still has value, perhaps to retrain AI models or for new analytics projects. It’s useful for data that is not accessed frequently but which needs to be retained to be stored in a more resource-efficient and cost-effective manner. That’s the basic idea behind data tiering.

The most frequently accessed file data can be thought of as a hot data tier, using normal file storage. Data used less often can be moved to low-cost storage alternatives in different ways, depending on the relative frequency of access. Some data is rarely accessed or modified but needs to be archived for future projects, for verification purposes in audits or to meet regulatory requirements. This cold data could be tiered to a low-cost object storage in a public cloud, such as Amazon’s Simple Storage System (S3).

But this article focuses on another option for tiering, erasure coding, which keeps data on the same system but in a more resource-efficient form. Erasure coding is particularly useful for data accessed or modified at moderate frequency – a warm tier – or even for cold data.

Erasure coding isn’t new, but there is a new way to do it. This new approach delivers the advantages without many of the costs of traditional erasure coding. Here’s a look at how erasure coding can save resources and how the trade-offs of traditional erasure coding are addressed by a new approach to the process.

Erasure Coding for Resource Optimization

People often think of erasure coding as a means of data protection. This is because erasure coding involves breaking a file into fragments and using a mathematical approach to encode additional pieces – called parity fragments – from which lost data could be reconstructed in the case of hardware failure. Erasure coding is related to RAID (Redundant Array of Independent Disks) in which data is divided into fragments (data fragments and parity fragments) distributed across disk drives on one server in order to improve performance and provide redundancy. With erasure coding, file fragments generally are distributed across multiple servers, and the choice of parity fragments determines how many servers could fail and still be able to reconstruct data.

In that case, why consider erasure coding as a way to conserve resources rather than just for data protection? With the data infrastructure I work with, HPE Ezmeral Data Fabric, data protection is a given, data is written in triplicate by default. Think of erasure coding as a way to retain that data durability but with less storage space required as compared to 3x replication.

The Costs of Traditional Erasure Coding

While traditional approaches to erasure coding (including various RAID forms) do provide data protection with reduced overhead for data storage, this comes at a cost. Some of the typical trade-offs encountered with traditional erasure coding include:

- You must decide at the time of writing data whether it will be erasure coded.

- Traditional erasure coding causes a performance hit at the time data is written (typically about 50%.)

- To read erasure coded data, applications must know where the erasure coded data is located.

- The decision to implement erasure coding is made manually by the user and usually at the directory level. (This is a management burden in systems with millions of files and directories.)

These costs of traditional erasure coding motivate the need for a new approach that provides the advantages of resource optimization along with data durability but without the trade-offs listed here.

A Better Approach to Erasure Coding

A new way to do erasure coding across multiple servers is available as a built-in feature of the HPE Ezmeral Data Fabric. In data fabric erasure coding, the costs listed previously have been addressed by leveraging a key management construct, the data fabric volume. (To learn more about how data fabric volumes are used, read “What’s your superpower for data management?”)

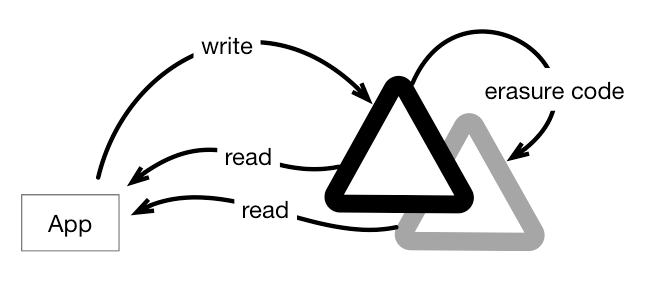

In the data fabric, file data is written to a designated data volume. Volumes can include millions of files and directories and are distributed across a cluster of servers for better performance and durability. At the time a data volume is created, the user designates the desired policy relative to erasure coding at the volume level. This policy is then implemented automatically file-by-file when particular files satisfy the requirements designed in the policy. A new shadow volume is automatically created for the erasure coded data, as shown in the simple diagram in the following figure.

One advantage of this new approach to erasure coding is applications read from files via the same pathname before and after data is erasure coded, regardless of the format used to store the file.

Another advantage of data fabric erasure coding is policy is set at a higher level – at the level of the volume. This high level makes data management more convenient and efficient, but it is executed at the fine-grained level of individual files. Furthermore, by delaying the actual erasure coding of the data until triggered automatically by data usage patterns, the encoding process is not in the critical write path, so it doesn't affect workloads.

That clever design means data fabric erasure coding will not have an impact on critical applications, thus doing away with the apparent performance hit of traditional systems. In short, built-in erasure coding with HPE Ezmeral Data Fabric gives you the advantages of resource optimization and data durability but without the traditional costs.

For a more detailed explanation of erasure coding for data tiering with HPE Ezmeral, watch the short video “Practical Erasure Coding in a Data Fabric” by Ted Dunning. To learn more about how the data fabric deals with challenges of large-scale systems, read “If HPE Ezmeral Data Fabric is the answer, what is the question?”

Ellen Friedman

Hewlett Packard Enterprise

Ellen_Friedman

Ellen Friedman is a principal technologist at HPE focused on large-scale data analytics and machine learning. Ellen worked at MapR Technologies for seven years prior to her current role at HPE, where she was a committer for the Apache Drill and Apache Mahout open source projects. She is a co-author of multiple books published by O’Reilly Media, including AI & Analytics in Production, Machine Learning Logistics, and the Practical Machine Learning series.

- Back to Blog

- Newer Article

- Older Article

- Dhoni on: HPE teams with NVIDIA to scale NVIDIA NIM Agent Bl...

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.