- Community Home

- >

- HPE AI

- >

- AI Unlocked

- >

- Operationalize machine learning to reap the benefi...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Operationalize machine learning to reap the benefits of AI

Artificial intelligence (AI) applications are now mainstream and ready for real-world deployments. Early movers have already reaped the benefits of AI — reporting profit margin improvements of 1–to-5 percentage points over their industry peers. On the flip side, companies behind in their AI adoption report profit margins up to 5% lower than their industry peers. [1]

Enterprises that do not prioritize and invest in infusing AI into their business processes suffer the risk of stagnation and market share loss. Findings from a recent Forrester Research study show that 75% of enterprises plan to increase their investments in AI and machine learning (ML) over the next two years. [2]

However, merely investing in AI will not immediately result in business success. Many enterprises invest a lot of time and effort in building out the tooling and teams to build accurate ML models. However, they soon realize that building the model is only part of the solution. Business value is not delivered until that model is infused into an existing business application or business process and is operationalized.

The operationalization of a model refers to the process of putting the model into use in a repeatable way. This not only applies to the initial process of deploying a model, but also to the process of retraining the model to ensure accuracy and performance.

Enterprise challenges with ML

An ML model is a mathematical function that makes a prediction based on input data, and data scientists use statistical algorithms and historical data to build their ML models — a process typically referred to as model training. And depending on the nature of the use case and the type of data sets, data scientists may need to use a variety of different tools and model training frameworks to build these models.

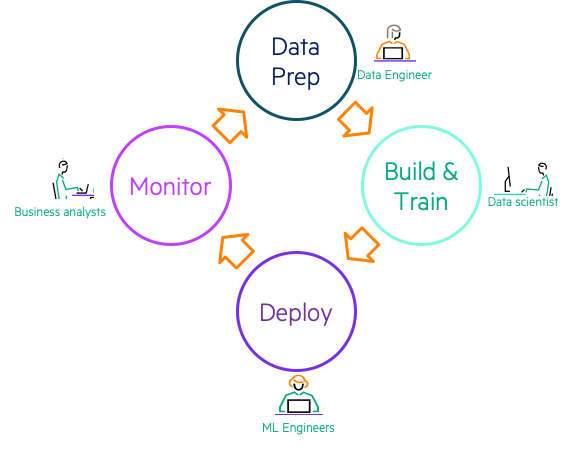

Let’s look at the ML lifecycle (Figure 1) to understand the level of collaboration necessary to build and deploy a model.

In the data prep stage, data from multiple sources is joined, transformed, and made ready for model training. In the build and train stage, data scientists experiment with different tools and frameworks to train the models. Once the model is trained, it has to be deployed – typically integrated into another application or made available through API calls. On-going monitoring and retraining are needed to ensure model accuracy and performance.

As is evident from the diagram, data science is a team sport. Different personas are involved in each stage of the ML lifecycle. Each of these users works with a different set of tools and require different environments. To ensure continued business success with machine learning, enterprises need to build repeatable, efficient, and scalable ML lifecycle processes; this is where MLOps – machine learning ops – comes in. MLOps refers to a set of practices that combine Machine Learning, DevOps, and Data Engineering to efficiently and reliably deploy and maintain ML systems in production.

Enterprises understand that MLOps is critical — 98% of enterprise leaders surveyed expect MLOps to give them a competitive edge. But at the same time, they are struggling with operationalizing their models — with 62% reporting that they lack the processes to move beyond PoCs (proof of concept) to operationalize their ML models. [2]

Only 14% of enterprises feel they have competent processes around machine learning deployment.[2]

How do enterprises solve for these challenges with MLOps?

These three areas of focus can help eliminate roadblocks when operationalizing ML models:

- Simplify IT: getting up and running with the right data science environments can take time with traditional bare-metal or VM based provisioning. Leverage technology solutions that rapidly provision infrastructure and ready-to-use templates for popular data science development environments.

- Eliminate technology roadblocks: Leverage open-source innovation. Adopt technology solutions that allow you to adopt the best-of-breed innovations from the open-source community while allowing you to focus on the business problem. Your MLOps solution needs to support a broad set of use cases without any heavy lifting from your IT Ops or data science teams.

- Reliable, standardized processes around collaboration: this includes standardized hand-off for model deployments, real-time model performance, and drift monitoring with automated notification or model retraining.

HPE addresses these challenges with HPE Ezmeral ML Ops

HPE understands these challenges. We have helped our enterprise customers overcome the barriers to enterprise-scale ML by offering HPE Ezmeral ML Ops, a software solution that leverages containers to bring speed and agility to the ML lifecycle.

HPE Ezmeral ML Ops dramatically speeds up the process of provisioning environments for data science workloads by leveraging containers for model development and deployment. With an integrated App Store or Application Catalog, data scientists, ML Engineers, and software engineers can deploy develop, test, or production environments with their choice of open-source or ISV tools in a matter of minutes.

Utilizing open-source Kubernetes, HPE Ezmeral ML Ops allows you to stay current with open-source innovation while ensuring enterprise-grade security for data and computing resources.

HPE Ezmeral ML Ops includes native capabilities such as a model registry, project repository, and source code control to standardize collaboration processes and allow for the implementation of DevOps processes.

Register to download this paper for a deeper dive into the results from the survey conducted by Forrester Research: Operationalize Machine Learning.

[1] Artificial intelligence: Why a digital base is critical, McKinsey Global; [2] Operationalize Machine Learning, Forrester Research

Matheen Raza

Hewlett Packard Enterprise

twitter.com/HPE_Ezmeral

linkedin.com/showcase/hpe-ezmeral

hpe.com/ezmeral, hpe.com/mlops

Matheen_Raza

Matheen Raza is a Senior Product Marketing Manager at Hewlett Packard Enterprise (HPE) in the Enterprise Software Business Unit. A technology enthusiast, he is extremely passionate about cutting edge technology such as containers, AI, machine learning, and deep learning to improve business outcomes in enterprises.

- Back to Blog

- Newer Article

- Older Article

- Dhoni on: HPE teams with NVIDIA to scale NVIDIA NIM Agent Bl...

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.