- Community Home

- >

- HPE AI

- >

- AI Unlocked

- >

- Self-healing data fabric: automagical business con...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Self-healing data fabric: automagical business continuity and easy expansion

Sadly, I have. And the result wasn’t just a cracked screen; instead, I had a non-working phone.

This scenario is even sadder if it happens while you are traveling, about to go to a business meeting or the airport. You probably will be able to recover the data you had on the phone (if it was backed up properly). But how will you show your boarding pass, your hotel reservation, or conference registration? How will you call a taxi? You’re left with a major interruption until you can repair or replace the physical device.

Wouldn’t it be great if, instead of an interruption, another phone magically appeared in your hand to replace the broken one?

Self-healing or auto-cloned phones are not really a thing (I wish!) but another area of your business life does offer this magic: the self-healing capabilities of a unifying data infrastructure for your large-scale enterprise. Here’s what that means to you in a practical sense.

Self-healing data layer protects business continuity

How does your data system deal with a hardware failure? Consider the challenge. An efficient, modern system needs a unifying data layer that stretches across the enterprise, from edge to data center, on premises to cloud deployments. Many kinds of applications will be running on the system, sharing the same data, system administration, and security – even while accessing data via different APIs. But what happens to data and applications when hardware fails (a disk or machine stops working) as is inevitable from time to time in a large-scale system?

Automatic failover of the data layer avoids interruptions in business processes.

In the best case, when hardware fails, not only would data be secure from loss, but applications and processes would continue without significant interruptions. That’s where a self-healing data infrastructure is important. An example of such a data infrastructure is the HPE Ezmeral Data Fabric File and Object Store. This highly scalable, software-defined data infrastructure is engineered with the combination of data replication and automatic failover to guard against data loss and to ensure business continuity.

Let’s take a look at why a self-healing data fabric matters and how it works. Failure events in a system built on a self-healing data fabric look very different from different points of view. Consider, for instance, the view from the following:

- Applications and data users

- System administrators and IT teams

- The data fabric itself

For developers, analysts, and data scientists using data stored in HPE Ezmeral Data Fabric File and Object store, when hardware fails, it appears as if nothing has happened. The failure is essentially invisible for data users. Applications and key processes continue to run without interruption and data continues to be accessible in this high availability system.

For the data fabric administrators, no specific action is required in order for the system to be reliable and highly available. Failover happens automatically. Administrators and IT teams will get an alert to indicate that a disk or machine has failed, but they can replace or repair whenever they choose. If the data fabric is in a public or private cloud environment, replacement hardware can even be provisioned automatically. The self-healing function of data fabric allows this maintenance to happen while the system remains online.

In contrast, from the point of view of the data fabric itself, there’s a lot to be done! Here’s what happens behind the scenes as self-healing magic kicks in.

A look inside: data fabric automagically replicates data

HPE Ezmeral Data Fabric stores data redundantly so it becomes resilient to hardware failure. The data replication factor is configurable, but by default, data fabric makes 3 replicas of data at the level of storage constructs known as data containers (not to be confused with Kubernetes-orchestrated computation containers).

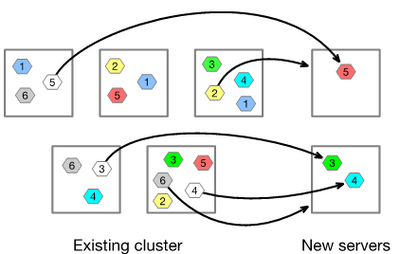

Each replica of a data container is located on different machines spread across the cluster in different failure domains, thereby protecting against data loss if a machine fails. In the following figure, hexagonal shapes represent replicas of different data containers (distinguished by color and number). Notice the 3 replicas of data container number 4 are located on different machines (large squares in the figure). What does the data fabric do when a machine fails?

As shown in the above figure, if a machine fails, data fabric goes into action, automatically making new replicas of the data containers that were located on the failed node. In the example illustrated here, new replicas of data containers 1 and 5 (blue and red) have already been duplicated onto healthy machines from remaining replicas on other nodes, and data container 2 (yellow) will be replicated shortly. When the failed hardware is replaced, data replicas will repopulate it, and unneeded replicas will be deleted. That way, load balancing happens automatically.

In the meanwhile, applications continue because they can access the remaining data replicas on other nodes, so operations are not interrupted. That’s how this self-healing data fabric makes it seem to data users and applications as though nothing has happened when a machine fails. Importantly, the strain of compensating for lost hardware is spread evenly across the cluster.

Read the solution overview HPE Ezmeral Data Fabric

Self-healing data infrastructure is an essential capability for high reliability and availability of large-scale systems, whether on-premises, at the edge, or in cloud deployments. But it turns out to offer a bonus benefit.

Self-healing also makes expansion easy

The automatic failover function that triggers data replication from replica data containers not only provides self-healing and automatic load balancing for the replacement hardware, but the same processes also make it easy to add nodes to a cluster without having to take a maintenance outage.

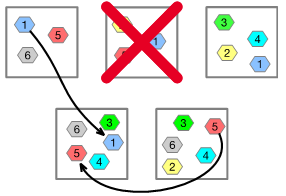

As new nodes are added, the data fabric can sense the need to rebalance the distribution of data. Replicas are copied onto the new nodes by temporarily increasing the replication of selected data objects. Once these new replicas are complete, some of the old replicas are deleted, restoring the desired replication factor. The result is an expanded and well-balanced cluster. The following figure illustrates this process as it might appear part way through the addition of two new nodes to the five node cluster I showed earlier.

Automatic rebalancing of data replicas makes cluster expansion easy.

The figure above shows that when two new servers (squares on the right) are added to a data fabric cluster, data automatically begins to populate the new machines. In this example, data containers 3 (green), 4 (turquoise) and 5 (red) have already been replicated to the new machines, and one of the original replicas has been deleted (shown in figure as white hexagons), thus restoring the normal replication factor of three. Data containers 2 and 6 (yellow and grey) are about to be replicated.

Watch “How to size a data fabric” video.

Two benefits from the same capability

Self-healing data fabric makes a big difference in ensuring business continuity and in maintaining high performance by helping with load balancing. But true scalability for large systems is important as well as dealing with current needs, and self-healing’s advantage in ease of cluster expansion is a key part of scalability. And remember, it’s all done automagically!

To find out more about data fabric:

Watch this short video that illustrates the use of data fabric as a modern data platform.

Read the blog post “Business continuity at large scale: data fabric snapshots are surprisingly effective”

For more on scalability, download free pdf of the O’Reilly ebook AI and Analytics at Scale: Lessons from Real-World Production Systems

Ellen Friedman

Hewlett Packard Enterprise

Ellen_Friedman

Ellen Friedman is a principal technologist at HPE focused on large-scale data analytics and machine learning. Ellen worked at MapR Technologies for seven years prior to her current role at HPE, where she was a committer for the Apache Drill and Apache Mahout open source projects. She is a co-author of multiple books published by O’Reilly Media, including AI & Analytics in Production, Machine Learning Logistics, and the Practical Machine Learning series.

- Back to Blog

- Newer Article

- Older Article

- Dhoni on: HPE teams with NVIDIA to scale NVIDIA NIM Agent Bl...

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.