- Community Home

- >

- HPE AI

- >

- AI Unlocked

- >

- The benefits of industrializing data science

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

The benefits of industrializing data science

By industrializing data science, organizations can automate the data science processes to improve its quality, repeatability, throughput, and time to value for the business.

In the first post in my blog series, Mind the analytics gap: A tale of two graphs, I took a look at the yawning gap that

While there is no silver bullet, one way organizations can close the gap considerably is through the adoption of an industrialized approach to the problem. By thinking about data science as a process, with distinct steps and handoffs, you can look to automate it—and in doing so, improve its quality, repeatability, throughput, and time to value for the business.

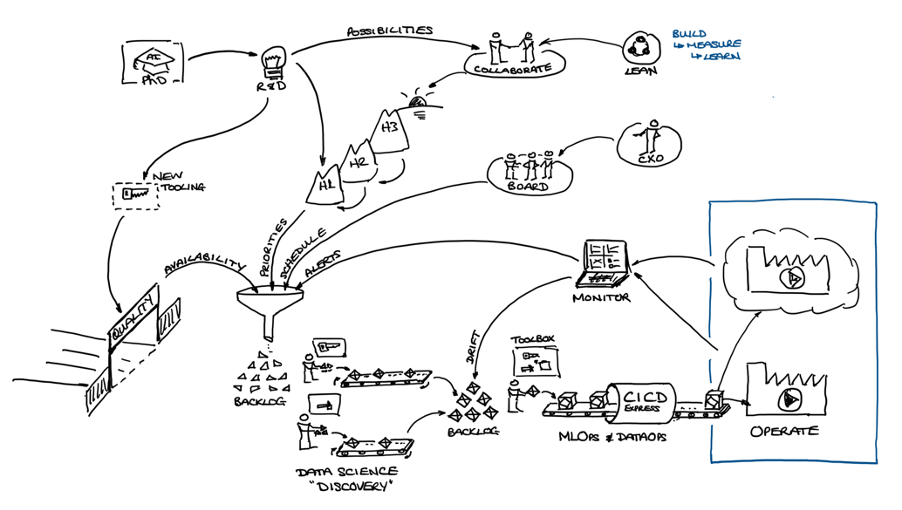

Exponentially growing data science requires industrialization

The following diagram (Figure 1) shows the full production flow from the inbound raw materials and tools onto the data science “Discovery” production line where our model is initially built and placed on the backlog for the second step in the production process. In this second step, our MLOps and DataOps teams will operationalize the model and place it into a production setting on-prem, in the cloud, at the edge, or perhaps all of the above. The full model lifecycle management is also of critical importance. The performance of the model "in the wild" is monitored and any model drift is alerted so the model can be re-evaluated and refreshed, or replaced, as necessary.

Given the extreme rate of change that exists in the market today around data science tools, sustainability is another critical aspect to consider. In the illustration, you can see how new tools and frameworks are evaluated by R&D to understand their potential for inclusion in the range of tools available to the data science, MLOps, and DataOps teams. These new tools and frameworks may also adjust our current business planning horizon priorities (marked H1 on the diagram) by making previously challenging projects more possible, or potentially improving the performance of existing models. At the more strategic level, they could open up new business possibilities so profound that they impact options for growth in the much longer planning horizon (marked H3 on the diagram), which in many organizations may be developed through collaboration with senior management and a "lean" team of some kind.

The goal is to drive value creation through the application of analytics, and that requires an exponential growth in data science capabilities. With that in mind, looking at the illustration, a number of key questions come to mind if you are to be successful.

- Scale: How can you scale the number of data scientists and infrastructure to support them without them getting in each other’s way?

- Balance agility and security: How can you quickly introduce new data science tools and frameworks into the business but still ensure you keep control and meet regulatory and security requirements?

- Data quality: How can you ensure data scientists can find and understand the data that’s available on which to build models, including aspects of data quality and availability?

- Model operationalization: How should you best organize and manage the operationalization and monitoring of the data science models into a production environment? This is a critical step, with some analysts reporting that some 60% of models that were expressly developed to be operationalized into an application failed to make it over that last mile.

- End-to-end lifecyle: How should you best manage the full lifecycle of models, including the integration with business Intelligence (BI) and standard enterprise reporting systems?

- Analytic accuracy: How can you ensure the results obtained by the data scientist in the lab are reproduced when applied to the production setting?

- Portability: How can you retain flexibility so you can deploy models to your own data center or into the cloud without having to worry about the location of the data?

- Building an analytics culture: What do you need to do to grow the level of support at all levels of the business for being “data-“ rather than “eminence-“ driven?

- Sustainability: How can you keep up with the pace of change in data science tooling, build an understanding of the value of new tools, integrate them together, and have them blessed from a corporate governance standpoint?

Driving the waste out of data science

To really achieve exponential growth in data science within your organization, you need to automate currently manual processes and remove waste from the system. Most waste typically occurs in the form of time wasted waiting for things to happen or in the form of trained models that never actually make it into production.

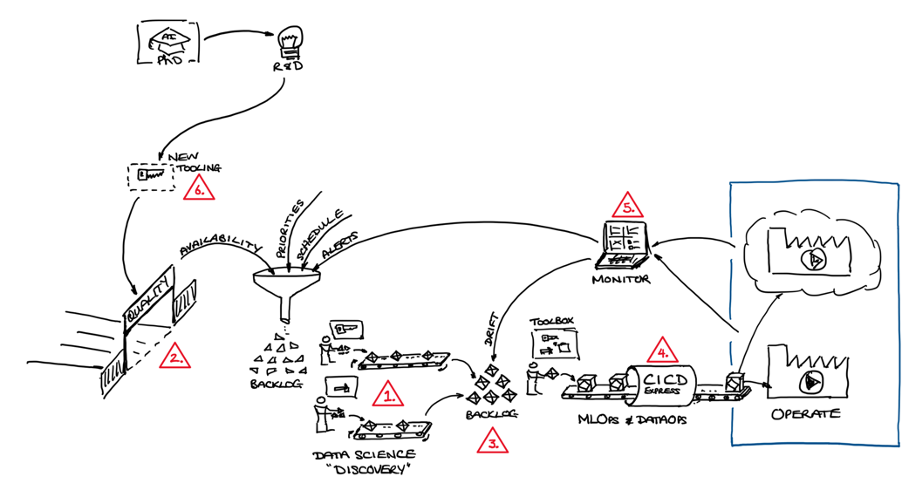

See the steps and descriptions in Figure 2 to understand the many places in the process that can cause delays. Most of the major delays are at the handoffs between groups with different responsibilities and priorities. Some organizations maintain distinct teams responsible for data science, data management, development, and operations. Many others have adopted blended teams that incorporate operational and support process such as MLOps, DevOps, and DataOps.

1. First comes waiting for the provision of new infrastructure and tools for the data scientist to begin work. It is typical for it to take months to provision a suitable environment for a new data scientist, which makes no sense. One way of overcoming this challenge is to over-provision a very large box for all the data scientists to share as this only requires the clusters to be created, secured, and integrated into the broader enterprise just once. But this is not ideal as data science workloads are often peaky, and experimentation can be destructive to the environment.

Rather than this “one-size-fits all” monolithic approach to infrastructure, a more modern one is to provide each project with its own purpose-designed and isolated infrastructure and tools. By making this provisioning process self-service, you can automate the steps and ensure you meet corporate requirements. This removes latency, speeds up the process, and drives efficiency for the Infrastructure team as well as the data scientists.

2. Now comes waiting for data or permission. Data scientists will often need a writable copy of data so they can perform the various transformations required. The replication task often performed by another team is likely to require some element of approval from the security team. In most enterprises, the data required is owned by more than one line of business, with each requiring a separate (often manual) approval process, rather than an automated workflow. These can all add considerable delay before the data scientist even gets visibility of any of the data to begin work and think about the problem.

3. Managing the development backlog, prioritizing work, and scaling operations can be a challenge. So can managing the multi-disciplinary blend of skills required to take the model previously created by the data scientist and reproduce it in a robust production context, including any data transformations. To do the work the MLOps team will also need to be provisioned for a suitable environment which may be a different shape and require different tooling to that of the data scientist. One key advantage of automating provisioning for all the steps along the pipeline is that it becomes easier to schedule work downstream as start delays due to infrastructure waits can be avoided, making the whole system more effective.

4. Continuous integration and continuous delivery automation (aka CI/CD) is now a familiar part of the way most organizations undertake IT development, but it is relatively new to the data science community. Tools and experience in this space are currently lacking, causing delays. Evaluating tools and methods in this domain is another area of study the R&D lab should undertake. Infrastructure and tools to complete the CI/CD process will also need to be provisioned which can again cause delays.

5. When it comes to alerting and BI integration, if your company is being driven by intelligent applications, their proper operation becomes of fundamental concern. Failure to instrument and monitor them appropriately can only end in disaster. Since it is not safe to put them into a production setting without BI tooling and alerting being in place, this last step in the process is critical if delays are to be avoided. In addition to real-time monitoring, adjustments to other data structures and offline reports may also be required.

6. Although the evaluation process the R&D Lab undertakes for new tools and frameworks isn’t normally time-critical, from time to time, situations can arise where an important project requires new tooling. It is therefore important for the R&D Lab to be in step with the business to enable it to quickly evaluate and prepare new tools and frameworks for operation within the enterprise framework.

With IT budgets under scrutiny like never before and an ever-increasing demand for analytics, it’s clear that you need to think differently about how to solve the problem if you are to “shift the needle” in the way that’s required.

Adopting an industrialized approach can help organizations focus attention on efficiency and driving out waste (often delays) in the value creation process—all the way from initial data science discovery through to model operationalization, monitoring, and wider aspects of sustainability and organizational learning.

This blog is the first in a series of blogs on industrializing data science. Subsequent blogs in the series include:

- Industrialized efficiency in the data science discovery process

- Industrializing the operationalization of machine learning models

- Transforming to the information factory of the future

You might also like to take a look at two earlier blogs exploring how IT budgets and focus needs to shift from business intelligence and data warehouse systems to data science and intelligent applications.

Doug Cackett

Hewlett Packard Enterprise

twitter.com/HPE_AI

linkedin.com/showcase/hpe-ai/

hpe.com/us/en/solutions/artificial-intelligence.html

Doug_Cackett

Doug has more than 25 years of experience in the Information Management and Data Science arena, working in a variety of businesses across Europe, Middle East, and Africa, particularly those who deal with ultra-high volume data or are seeking to consolidate complex information delivery capabilities linked to AI/ML solutions to create unprecedented commercial value.

- Back to Blog

- Newer Article

- Older Article

- Dhoni on: HPE teams with NVIDIA to scale NVIDIA NIM Agent Bl...

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.

-

AI

18 -

AI-Powered

1 -

Gen AI

2 -

GenAI

7 -

HPE Private Cloud AI

1 -

HPE Services

3 -

NVIDIA

10 -

private cloud

1