- Community Home

- >

- HPE AI

- >

- AI Unlocked

- >

- What’s your superpower for data management?

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

What’s your superpower for data management?

You’ve got an unsuspected superpower, one that doesn’t even require a radioactive spider.

Handling massive amounts of data at scale is a huge challenge. To do it well, you need an effective data strategy across your organization. The data architecture you design and the data infrastructure that supports it need to work for both your on-premises and in-cloud data centers. Plus, your data strategy must be able to encompass widely separated data sources, reaching back to the core in an affordable way that meets service level agreements (SLAs). HPE Ezmeral Data Fabric is a great way to meet that challenge, in part because of its unique superpower for data management: data fabric volumes.

Data Fabric Volumes vs Block Storage Volumes

The word volumes may sound like a familiar term, but HPE Ezmeral Data Fabric volumes have capabilities that most likely will surprise you. For example, volumes in a data fabric are radically different from volumes associated with a block storage device. Block storage volumes are largely intended to host a file system for a single virtual machine, that is, a non-distributed file system. Because the size of a block storage volume is inconvenient to change, you often need to over-provision them to accommodate data sets that may change in size. But this over provisioning can result in inefficient use of resources. Block storage, in general, is a good match with VM-based systems, but it doesn't let you manage or organize large-scale data according to name, content, or use case. In short, block storage volumes don’t give you the superpower you need for an effective, large-scale data strategy that meets the needs of modern applications.

In contrast, an HPE Ezmeral data fabric volume functions more like a directory in a distributed file system but with extra powers. It can do things no ordinary directory would imagine: HPE Ezmeral data fabric volumes let you move data easily and accurately between locations, including edge-to-cloud or on-premises core data centers to cloud -- even at the scale of hundreds of petabytes of data. Their flexibility lets you optimize resource use while providing you with convenient, fine-grained access control, and they support file-system-level snapshots (similar to what ZFS provides in local file system) but distributed across multiple machines. Data fabric volumes also provide a core foundation for practical multi-tenancy at scale. Here’s how they provide these superpowers for data management.

Think of data fabric volumes as expandable “bags” of data

A key characteristic of the HPE Ezmeral Data Fabric volume is that it is dynamic, and this trait provides you with great flexibility and efficiency in data management. (Please note that HPE Ezmeral Data Fabric was formerly the MapR Data Platform prior to acquisition of MapR Technologies by Hewlett Packard Enterprise). The data fabric volume – a logical unit in the data fabric – is like a “stretchable bag of data” rather than a rigid box of predetermined size. The data fabric volume will grow or shrink as needed in line with the amount of data it holds. Think of it as being automatically stretchable, only taking as much storage resources as needed and no more. That’s how it helps you optimize resource usage. Effective use of volumes is key to cluster performance.

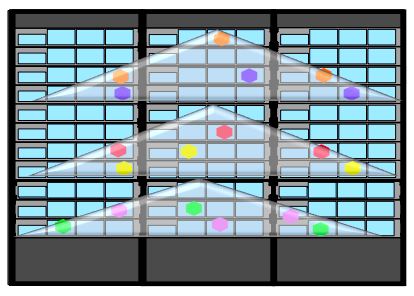

A data fabric cluster will contain many data volumes, and each volume can stretch across any or all the machines in a cluster. The following figure is a simplistic representation of the data fabric volumes spanning multiple machines on multiple racks. You’ll also find it easy to change how data fabric volumes are stored, for replication strategies, to take advantage of erasure coding or as an object store like S3.

Data is more than just bits being stored

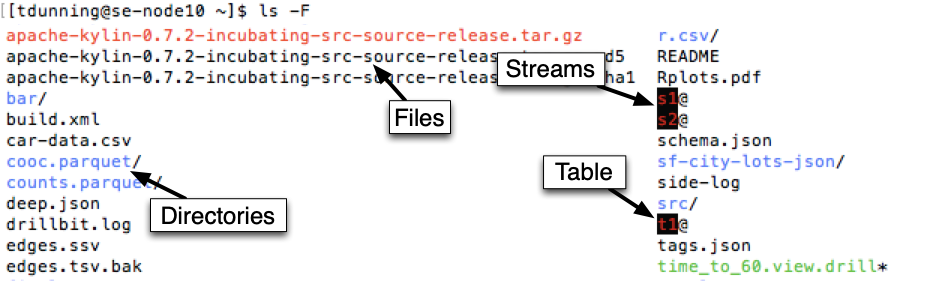

Another superpower of the data fabric volume is it lets you name your data in addition knowing where it is stored. This is an important way to think about data: rather than just storage, think about what the data is instead of thinking of it as just bits on disks. The data fabric efficiently and safely manages the meta data for directories, files, tables, and event streams, packaged together in a volume. Finding out what data is included in a particular volume is as easy as a familiar ls command, as shown in this screenshot:

Volumes go beyond just storage: they are also key to performance and scale.

Policies Are Set at the Volume Level

A single data fabric cluster can contain trillions of files plus tables and stream events. It would be maddening to try to manage all that data at the level of individual files or directories, but to manage at the cluster level lacks the granularity needed for effective control. A data fabric volume provides a middle ground, letting you apply several kinds of policies to an entire set of files, directories, or sub-volumes together. These volumes give you the power to manage data at the appropriate scale, providing a useful combination of convenience along with the granularity that is needed.

Policy administration via data fabric volumes lets you control who does (and who does not) have access. It also lets you set replication levels for data resiliency and establish ownership and accountability for data. Upcoming in the 6.2 release, volumes will also support policy-based security for better data governance. Importantly, you can also delegate aspects of control. This ability to delegate means that your core administrators maintain enough control but are out of the critical path for most operations.

Real world example: delegate control within bounds

A high-tech manufacturer was facing the challenge of optimizing performance in a process involving hundreds of steps, many of which were carried out on isolated systems. They switched to using HPE Ezmeral Data Fabric to unify much of the process by using a single data infrastructure. The result was 92% faster data analysis, a big win because that led to better yields. The improvement came in the form of eliminating many steps. For example, it was no longer necessary to make manual copies of data given the simplicity of a uniform data fabric that was accessible everywhere. The advantages provided by the data fabric had an impact from data acquisition to analytics to final steps in the manufacturing process.

Data fabric volumes play a big role in the management of a multi-team, multi-step system where the same data needs to be accessed by multiple groups, yet security is extremely important. HPE Ezmeral Data Fabric lets you devolve some aspects of data administration to users, as it’s easy to set up volumes for specific data needs. Yet, the HPE Ezmeral Data Fabric still allows you to retain granular control over who has access, an example of delegating control within bounds.

Applying policies at the volume level opens all sorts of possibilities for data management of large systems, including data placement across a cluster. Let’s see how that works and why it matters.

Volume topology for data placement control

Data fabric volumes give you the power to conveniently place data at the volume level on specific racks, specific machines, or groups of machines. You do this by assigning volume topology to match the node topology of interest. But why do it?

One important goal of volume topology is to control the impact of failure domains, such as individual racks. Notice in Figure 1 that a volume can stretch across machines housed in multiple racks and the data replicas (colored hexagons in the figure) land on different machines. By setting volume topology, you can ensure that if one particular rack fails, operations are uninterrupted, and data is not lost because replicas outside that failure domain continue to function as though nothing happened.

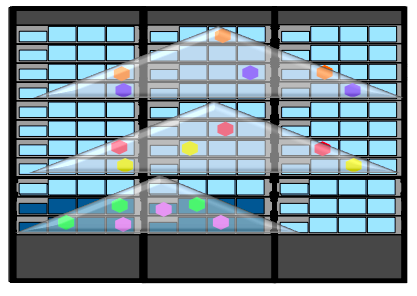

Another use for volume topology is to place particular data on specialized hardware, such as fast, solid state storage (SSDs) or to isolate SLA-critical workloads. This can be easily done in a mixed cluster by applying volume topology, as depicted in the following figure.

Data placement is about to become even more granular with the upcoming 6.2 release of HPE Ezmeral Data Fabric. The blog post “HPE Ezmeral Data Fabric: A sneak peek at what’s coming in 6.2” describes fine-grained data placement control for heterogenous machines containing a mixture of SSDs and slower, spinning devices (HDDs).

Next Steps

Stay tuned for more postings on data management superpowers with the data fabric. For now, you may want to use these resources to find out more about HPE Ezmeral Data Fabric and the HPE Ezmeral Container Platform, for which the data fabric serves as the data layer.

- Read the blog post If HPE Ezmeral Data Fabric is the answer, what is the question?

- Watch the video How to Size a Data Fabric with Ted Dunning, CTO for Data Fabric at HPE.

- Explore the HPE Ezmeral Data Fabric web page.

- Explore the new developer-related HPE Ezmeral Data Fabric content on the HPE Dev community.

- Read the HPE Ezmeral Container Platform solution brief or download this IDC report.

- Read the blog post How HPE Data Fabric (formerly MapR Data Platform) maximizes the value of data.

Ellen Friedman

Hewlett Packard Enterprise

Ellen_Friedman

Ellen Friedman is a principal technologist at HPE focused on large-scale data analytics and machine learning. Ellen worked at MapR Technologies for seven years prior to her current role at HPE, where she was a committer for the Apache Drill and Apache Mahout open source projects. She is a co-author of multiple books published by O’Reilly Media, including AI & Analytics in Production, Machine Learning Logistics, and the Practical Machine Learning series.

- Back to Blog

- Newer Article

- Older Article

- Dhoni on: HPE teams with NVIDIA to scale NVIDIA NIM Agent Bl...

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.