- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Designing Storage Quality Of Service For App Centr...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Designing Storage Quality Of Service For App Centric Infrastructure

How can you manage the resources for applications with flash storage and how can an app-centric storage QoS meet those requirements? With fair sharing of resources and noisy neighbors to contend with, is it possible to achieve without continuous involvement of administrators? Yes, it is. Read how HPE Nimble Storage provides app-centric storage QoS.

Flash in the data center has enabled faster access to data, which has led a great deal of infrastructure consolidation. Consolidation lends better utilization and reduces cost. However, it also brings together diverse applications contending for the same set of resources. These applications usually have different Service Level Agreements (SLA). For example, some of the challenges an administrator faces are:

- How to retrieve that most important email for the CEO from the past snapshot without disturbing several hour long Extract-Transform-Load (ETL) job running on the same storage array?

- How to take backup during normal business hours without disrupting access to home directories?

A modern flash optimized storage array needs to satisfy the SLAs of many consumers such as application Input Output operations (IOs), snapshot creation, replication, garbage collection, metadata processing etc. Some of these consumers are throughput demanding while others are sensitive to latency. Some are opportunistic and some have stricter deadlines.

The problem of meeting expectations of consumers translates to allocating storage system performance to them. In well-designed storage software architecture, performance is usually Central Processing Unit (CPU) bound. It is better to be CPU speed bound than the storage media speed bound, because increasing the amount of compute can increase performance of storage software and we can take advantage of Moore's law. However, at times, due to application IO profile or due to degraded mode of operation - such as rebuild after disk or flash drive failure - performance can also be limited by available disk or flash drive throughput. Overall, ensuring that SLAs of consumers are met depends on how the saturated resources are allocated to the consumers.

What are the requirements of an App centric storage QoS?

To find answer to this question, we turned to vast amount of data collected by HPE InfoSight from our HPE Nimble Storage install base of customers. We carefully analyzed application behavior at times of contention. We profiled storage resource consumption to find hot spots. We modeled changes in application behavior by experimenting with various resource allocation schemes. We also spoke to numerous customers about their pain points in deploying consolidated infrastructure and ensuring QoS to their applications. Based on our analysis and what we heard from our customers, it became clear to us that a good storage QoS solution needs to have the following properties:

A) Fair sharing of resources between consumers

At times of congestion, there are a couple of ways to ensure fairness. Fairness in outcome and fairness in opportunity. Let's look at each of them.

i. Fairness in outcome

Under this scheme, system ensures that each application gets its fair share of performance, measured either as Input/Output Operations Per Second (IOPS) Or Mega Bytes / Second (MB/s).

Typically, small random IOs consume more resources per byte while large sequential IOs consume more resources per IO. Due to this difference in IO characteristics, system could still be unfair to applications.

For example, if the system were to ensure fair share of IOPS, then an application doing large sequential IO would win more resources. On the other hand, if the system were to ensure fair share of MB/s, then an application doing small random IO would win more resources.

ii. Fairness in opportunity

Under this scheme, system ensures that each consumer gets its fair share of resources. The goal is not to focus on the outcome, but on the consumption. An application that efficiently utilizes resources will have consumed same amount of resources as an un-optimized application. However, due to its efficiency, it is able to produce better results.

For example, an application sending large sequential IOs will get same resources – such as CPU cycles – as the application sending small random IOs. But due to its efficiency, it will be able to produce better throughput measured in MB/s.

Under the first scheme, performance of applications is determined by the most un-optimized application in the system. However, the second scheme ensures that application performance is not influenced by other applications, providing isolation between them.

In other words, fair sharing of resources between consumers is the key to provide isolation from noisy neighbors.

B) Work conserving

In computing and communication systems, a work-conserving scheduler is a scheduler that always tries to keep the scheduled resource(s) busy.

Under this requirement, if there is resource available and there is demand for it, demand is always satisfied. Moreover, system lets a consumer consume resources when there is surplus, and does not penalize for consumption of surplus when there is contention in future.

For example, when backup job is the only application running on the system, it can consume all the resources available. However, when a production application - such as database - starts doing IO, resources are automatically fair shared. The backup job is not throttled because of its past consumption.

C) Balancing demands and SLAs between consumers

This requirement is about accommodating differing and often conflicting needs of applications. In the presence of throughput intensive applications, latency sensitive ones should not suffer. While ensuring fair sharing of resources, system should also absorb bursts and variations in application demand. Above all, it should consistently meet applications’ performance expectations.

D) Minimal user input in setting performance expectations

This is the most stringent requirement of all. The best solution is the one that works out of the box, with as little tinkering as possible. By reducing administrative overhead, this allows administrator to focus on his applications and users instead of infrastructure.

Note that it does not eliminate the need for an administrator to input exact performance specifications for applications – such as IOPS, MB/s or performance classes, which are required to convey the intent of administrator to the system. However, in most cases where the requirements are isolating noisy neighbors, providing performance insulation and protecting latency sensitive applications, exact performance specification is a burden on the administrator.

A review of existing storage QoS solutions in the market

When we explore existing storage QoS solutions, we see many variants of following designs being prevalent:

1. Slowdown admission to the speed of slowest bottleneck

As per M/M/1 queuing model, with exponential distribution of arrival time and service time, response time for requests increases non-linearly when resources are saturated. When the system has multiple resources involved in request processing, speed of the slowest resource determines the response time. However, at the time of request admission, it might not be possible to determine which resources are needed upfront. In order to avoid non-deterministic response time, this design throttles admission based on the speed of slowest resource.

Clearly, this design is wasteful. Since upper limit on consumption is based on the slowest bottleneck in the system, consumption of remaining resources cannot be maximized. E.g. Application IO requests that can be served purely from memory may be throttled to the speed of IOs that need to be served from storage media.

To give a real life analogy, this solution is similar to restricting the admission to a museum based on the capacity of the smallest gallery. Patrons not interested in visiting that particular gallery have no option but to wait in that single long line.

Comparing this design to requirements of QoS, we can see that this design does not meet requirement B (Work conserving).

2. Limit resource consumption

In order to ensure QoS, this design puts a limit on application throughput (IOPS or MB/s), so that their resource consumption is limited and none of the resources are saturated. There are few problems with this:

- Since limiting consumption is the way of resource allocation, this design requires limits to be set on every object/Logical Unit Number (LUN)/volume. This is simply an administrative headache.

- They are not work conserving. Since upper limit on consumption is purely arbitrary, the underlying resource utilization may be far from saturation. Yet applications cannot use those resources.

- If the limit is too high, it may be ineffective.

In real life, we experience throttling of video streaming by some Internet Service Providers as a strategy to avoid network congestion. However, throttling is in effect even when the network is not congested.

Comparing this design to requirements of QoS, we can see that this design does not meet requirements A (Fair sharing), B (Work conserving) and D (Minimal user input).

3. Guarantees on resource consumption

To avoid the problem of limiting every consumer, this solution gives guarantee (or reservation) on certain amount of resource consumption to a few consumers. Those who have guarantees can consume up to the guarantee even during resource contention. When there is surplus, they can consume more than the guarantee. In order to ensure that unimportant applications do not drive the system to saturation, limit still needs to be set on those.

There are a couple of disadvantages with this design:

- To ensure guarantees are met, this design forces a pessimistic estimation of performance headroom. As a result, the amount of reservable or guarantee-able consumption is much lower than system capabilities

- Though it eliminates the need to configure limits on every LUN or object, explicit guarantees still need to be configured on those who need it.

This design meets requirements A (Fair sharing), B (Work conserving) and C (Balancing competing SLAs) most of the time, if we ignore instances when applications are constrained due to limits set on them. However, it doesn't meet requirement D (Minimal input).

A review of HPE Nimble Storage's QoS solution

Keeping the requirements in mind and understanding limitations of existing solutions, we designed our QoS solution with the goal of having a fair sharing scheduler in front of each bottlenecked resource in the system. These schedulers will not restrict or limit consumption at times of contention, instead encourage and ensure responsible sharing. Those applications that don't have the need of a certain resource won't have to be throttled, should contention for that resource arise.

Here is how Nimble Storage fares against the requirements:

A) Fair sharing of resources

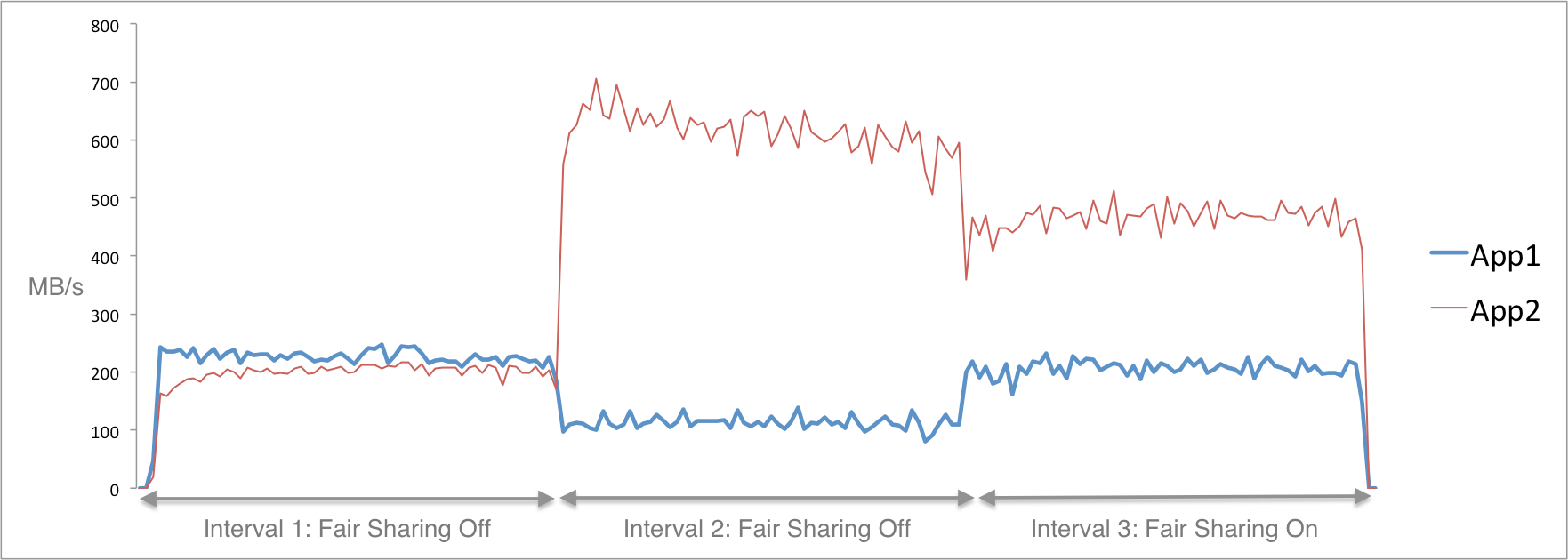

We tested our design for fairness using two diverse workloads: Virtual Desktop Infrastructure (VDI) and VMware’s Storage vMotion. While VDI is light on resource consumption with small random IOs (8K to 16K IO sizes), storage vMotion sends large IOs (128K or more IO sizes) that are resource hungry.

In the chart above, App1 and App2 represent two different workloads. While App1 represents VDI traffic through out the test, App2 starts out as VDI initially then transitions to VDI + storage vMotion traffic during the test.

At Interval 1, both App1 and App2 represent VDI traffic, sending equal amount of load. As you can see in the graph, performance of App1 and App2 are nearly identical even with fair sharing turned off.

At Interval 2, App2 transitions to VDI + storage vMotion and starts sending large sequential storage vMotion IOs in addition to small random VDI IOs. With Fair Sharing disabled in this interval, we can see App2’s IOs consume most of the resources, effectively starving resources for App1’s IOs. As a result, performance of App1 is impacted. This is a typical ‘noisy neighbor’ scenario.

At Interval 3, we enabled fair sharing. As a result, we can see that App1’s performance rises back to the level at Interval 1, when both App1 and App2 were using equal resources. In effect, App1’s performance is isolated from noisy neighbors. App2 still enjoys higher performance because it is more efficient with larger IOs.

B) Work conserving

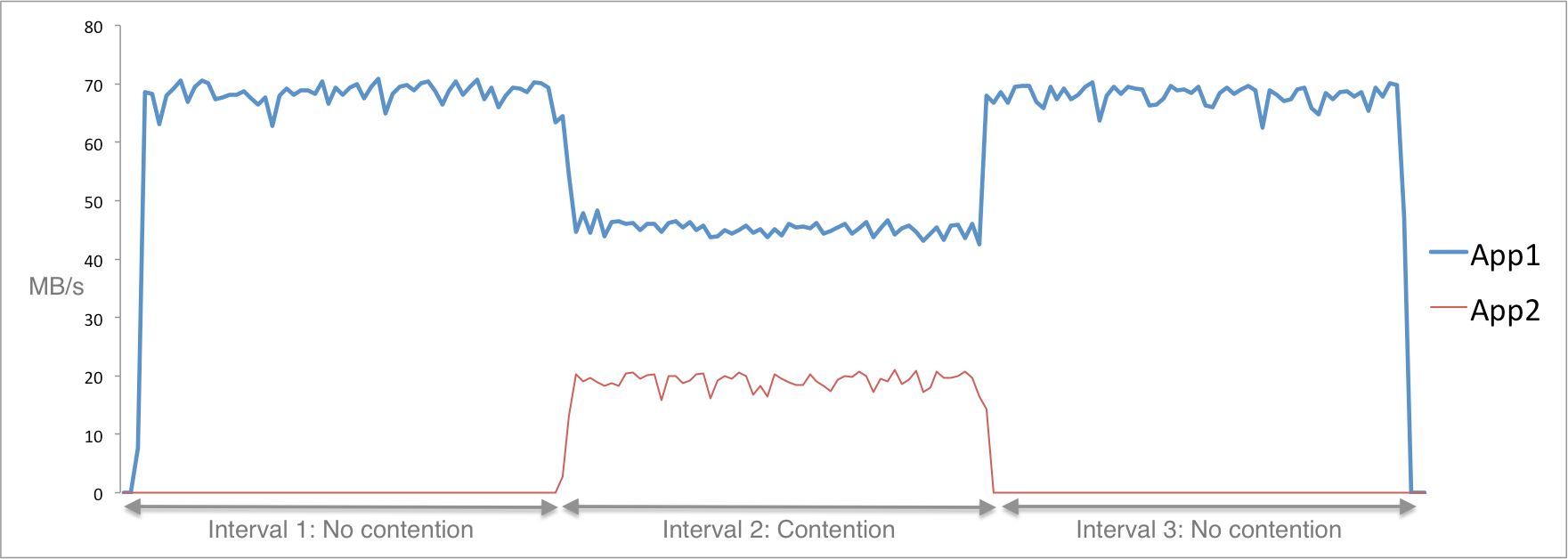

To test work-conserving nature of our QoS solution, we ran an experiment that involves a backup job and a database workload. Backup job is long running and sends large sequential IOs, while database workload sends intermittent random IOs that are latency sensitive.

In the chart above, App1 represents backup job and App2 represents database workload.

At Interval 1, App1 is sending large sequential IOs from backup job. Since there is no one else in the system competing for resources, it could consume all the resources to get the maximum throughput.

At Interval 2, App2 starts sending intermittent random IOs from database workload. At this time, due to resource contention, QoS kicks in to ensure fair allocation of resources between the two workloads.

At Interval 3, App2 stops and App1 starts consuming all the resources again. We can see:

- At no instance, we had to cap the throughput of App1

- At Interval 1 and Interval 3, App1 was allowed to consume all available resources

- At Interval 2, App1 was not punished for consuming resources when there was no contention

C) Balancing demands and SLAs

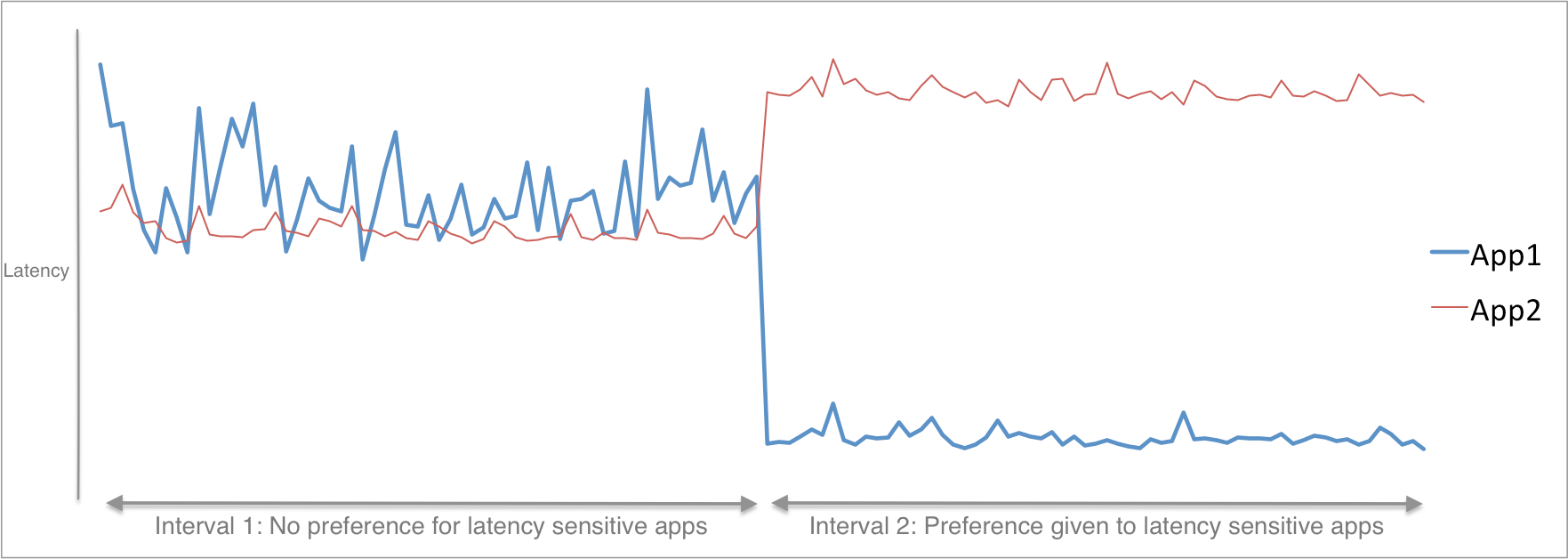

To verify that our design meets diverse SLAs of applications, we ran a latency sensitive database query along with a throughput intensive backup job.

In the chart above, App1 represents database query workload, App2 represents backup job.

At Interval 1, we have QoS turned off. App1 sends small random database query IOs. App2 sends large sequential backup IOs. Since there is no QoS, we can see that App1 suffers high latency

At Interval 2, we have QoS enabled. We can see that App1 enjoys latency that is 5x lower.

The QoS was automatically able to identify that App1’s IOs are latency sensitive and was able to ensure lower latency without any explicit configuration.

D) Minimal user input

In none of the experiments above did we have to explicitly specify application demands, or put a limit on their IOs to avoid contention. The system was able to identify contention and allocate resources as per the demands. There were no user-facing knobs to control.

As we can see, the result of meeting all these requirements is an adaptive system that automatically satisfies application requirements, isolates them from greedy or resource hungry applications (i.e., noisy neighbor isolation) without needing the administrator to intervene. This way, an administrator can focus more on delivering service to their customers and less on the infrastructure. This is what makes an infrastructure app centric.

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...