- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Handle massive IoT data with HPE BlueData and Qumu...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Handle massive IoT data with HPE BlueData and Qumulo

You can create a unified and secured environment for all of your AI workloads.

Supporting different AI/ML and analytics workloads, while also meeting the need of Data Analysts to securely access all the data using their preferred AI tools, is quite a challenging task for any IT manager or team. Many times, IT organizations find themselves addressing these needs using multiple clusters, or a combination of compute and storage resources. Unfortunately, such environments are complicated to operate, create data silos and replication, don’t facilitate hardware and software optimization, and are intrinsically unsecure.

That’s the kind of stuff that keeps an IT manager awake night.

I do have good news to share, though. With HPE’s experience in complex AI and analytics environments, we have been able to integrate the HPE BlueData EPIC and Qumulo file system to provide customers like you with a simple,flexible and comprehensive AI environment.

We built out the following lab scenario based on a real customer’s IoT process:

- Qumulo FS provided a software-defined distributed file system designed specifically to support the enterprise. IoT data is generally provided in a special file format (e.g. MDF4), then converted into a different file format (Parquet/Avro) to optimize and speed up queries, which also allows efficient data compression and encoding schemes.

- HPE BlueData EPIC provided an enterprise-grade governance and security platform to manage multi-tenant environments. It allowed multiple analytics teams to access all the data they needed. The EPIC tool also created advanced analytics models, using Big Data scale-out environments such as Hadoop & Spark, to then utilize their preferred analytics tools.

HPE BlueData and Qumulo as a complement to create an enterprise-level AI environment

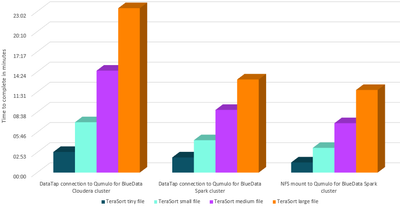

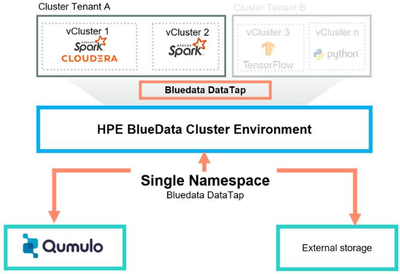

The goal of the solution tested was to leverage the HPE BlueData EPIC Software to create a single namespace containing multiple environments that could access data from a Qumulo environment. The results would then be analyzed for performance during the integration process.

- Qumulo’s software defined distributed file system is a scale-across solution capable of managing billions of files, large or small, seamlessly across an Enterprise environment.The file system offered real-time visibility, scale, and control of data without performance degradation, while also providing centralized access to files.

- HPE BlueData Software provided a platform for distributed AI, machine Learning, and analytics on containers. HPE BlueData EPIC Software provided multitenancy and data isolation to ensure logical separation between each project, group, or department within the organization. By creating a single environment that not only contained massive datasets, the software also enabled multiple teams to use their analytical tool of choice to work on the data. The result? Cluster sprawl and redundant copies of test data were avoided or eliminated entirely.

- HPE BlueData EPIC is integrated with Qumulo file systems via EPIC DataTap. EPIC DataTap implements a high-performance connection to remote data storage systems via NFS and HDFS. This allows unmodified Hadoop and AI/ML applications to run against data stored in the remote NFS and HDFS without any modification or loss of performance. All data can be managed as a single pool of storage (single namenode), so no data movement is needed.

The testing results confirmed that there was no performance degradation when using DataTap compared to using an NFS mount. The HPE BlueData and Qumulo environments were vanilla installations — with no performance or configuration tweaking required.

Here's a demo that Calvin Zito got showing Qumulo in action - but note this is not with HPE BlueData.

Conclusion? A flexible analytics environment

The Lab team’s testing demonstrated that the integration of HPE BlueData and Qumulo can provide a single, flexible environment where multiple clusters are created that could ultimately deploy the same tool to work with the same data—with no cluster sprawl or dealing with multiple storage systems.

Data scientists and analysts can focus on analyzing the test data with their preferred tools, while DataTap allows them to access all data lakes without having to create multiple copies and without a performance penalty.

To find out more about how to Handle massive IoT data with HPE BlueData and Qumulo please check out our References Source list:

https://qumulo.com/blog/hybrid-storage-solution-for-adas-development-and-simulation/

https://qumulo.com/wp-content/uploads/2019/01/CS-Q141-Hyundai-MOBIS-1.pdf

https://qumulo.com/wp-content/uploads/2019/05/SB-Q168-ADAS-A4.pdf

https://sparkbyexamples.com/spark/spark-read-write-dataframe-parquet-example/

Meet HPE blogger Eric Brown, an HPE Solutions Engineer who has worked with HPE Storage for over 20 years. His areas of expertise are Big Data and Analytics.

Storage Experts

Hewlett Packard Enterprise

twitter.com/HPE_Storage

linkedin.com/showcase/hpestorage/

hpe.com/storage

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...