- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Introducing vSphere Distributed Switch Deployment ...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Introducing vSphere Distributed Switch Deployment with HPE Nimble Storage dHCI

Learn about the new HPE Nimble Storage dHCI feature that enables native deployment on vSphere Distributed Switches for new server deployments.

Previously, while vSphere Distributed Switches could be used for additional virtual machine (VM) workloads with dHCI, it was necessary for the management and iSCSI traffic to remain on the default standard vSwitches for a typical new server deployment.

You may have infrastructure requirements to use vSphere Distributed Switches rather than traditional vSphere Standard Switches, a need for greater ease of management at scale, or a requirement for some of the advanced features available only with vDS. With the introduction of dHCI automated vDS deployment, these needs can be met with the standard dHCI stack setup workflow, saving time and effort.

Let’s take a closer look at HPE Nimble Storage dHCI support for vSphere Distributed Switches.

Deploying VDS for dHCI

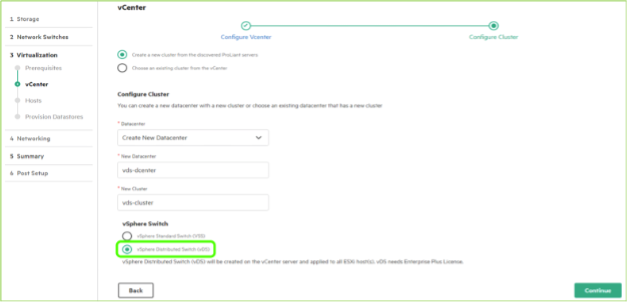

When deploying and configuring a new server install of dHCI, deploying on VDS rather than VSS is incredibly straight forward. This continues the goal of HPE Nimble Storage dHCI to keep deployment and management of your cluster as simple and easy as possible, while retaining the power and flexibility that modern business needs require.

To utilize vSphere Distributed Switches, it is necessary to use a vSphere Enterprise Plus license.

The only change to the stack deployment process is the addition of an option to select whether you want to use vSphere Standard Switches or vSphere Distributed Switches. All other switch configuration and cabling prerequisites remain the same.

And that’s it! The rest of the stack setup procedure remains the same.

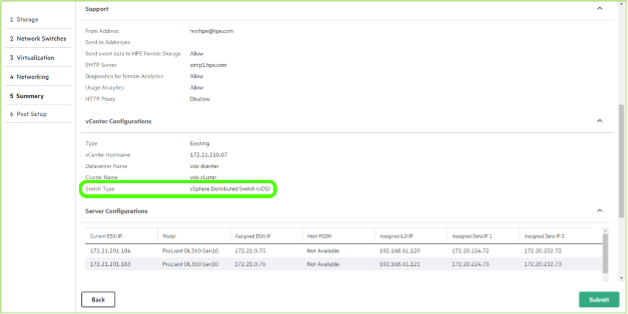

The stack setup summary page provides an overview of the configuration that will be applied, including the type of vSwitch that will be implemented.

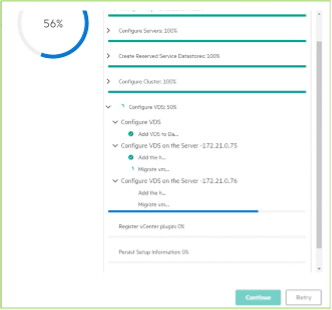

During stack setup, you can monitor VDS deployment progress as the vSphere Distributed Switch is configured and servers are migrated to the switch.

When the vSphere Distributed Switch option is selected in the Stack Setup Wizard, the follow is the flow of operations during deployment:

- Check for presence of a valid vSphere Enterprise Plus or Evaluation license.

- Create three vSphere Standard Switches and assign relevant vmnics: vSwitch0, iSCSI1, and iSCSI2. This is the same workflow that is followed if the VSS option is selected in the Stack Setup Wizard.

- Add hosts to vCenter.

- Create 1 vSphere Distributed Switch with 3 port groups and 4 uplinks.

- Management network portgroup created with Uplink1 and Uplink2.

- iSCSI-1 network portgroup created with Uplink3.

- iSCSI-2 network portgroup created with Uplink4.

- Migrate vmnics from the vSphere Standard Switches to the vSphere Distributed Switches.

VDS implementation details

dHCI stack setup takes care of all deployment and configuration needed by the solution for management and iSCSI connectivity within vSphere. The are configured by stack setup:

- One vSphere Distributed Switch

- One Uplink Port Group

- Four uplinks per host assigned to the Uplink Port Group

- One Distributed Port Group for management and VM traffic

- Two Distributed Port Groups for iSCSI data traffic

- One vmkernel port configured per Distributed Port Group per host

The dHCI deployment process automates the creation of these components and the assignment of vmkernel ports and uplinks, as well as associates between them.

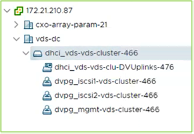

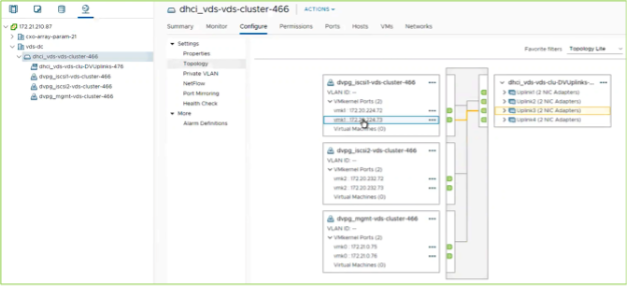

This screenshot shows the various port groups created for a default dHCI VDS deployment. The naming conventions applied match the port groups’ various functions. In this example:

- Uplink Port Group: dhci_vds-vds-clu-DVUplinks-476

- iSCSI 1 Distributed Port Group: dvpg_iscsi1-vds-cluster-466

- iSCSI 2 Distributed Port Group: dvpg_iscsi2-vds-cluster-466

- Management Distributed Port Group: dvpg_mgmt-vds-cluster-466

The topology view of the VDS will display the vmkernel to uplink port mapping. In this example, the dHCI cluster has two ESXi servers, reflected by the presence of two vmkernel ports, one for each host, in the various distributed port groups.

The default vmkernel to uplink mapping configued by dHCI stack setup is:

- vmk0 -> Uplink1 and Uplink2 (management)

- vmk1 -> Uplink3 (iSCSI 1 network)

- vmk2 -> Uplink4 (iSCSI 2 network)

The order in which the physical vmnic ports are assigned to the uplinks is:

- vmnic#1 -> Uplink1

- vmnic#2 -> Uplink3

- vmnic#3 -> Uplink2

- vmnic#4 -> Uplink4

Note that the vmnic numbers are just to illustrate and the actual numbers will depend on the specific ports present on the compute nodes. dHCI stack setup will utilize the first four 10Gb ports it discovers for stack setup.

It is important to ensure that the compute nodes are cabled correctly, so that the server ports and associated switch ports are configured correctly. Ports 1 and 3 are assigned as the management interfaces, while ports 2 and 4 are for the iSCSI 1 and iSCSI 2 networks, respectively. dHCI staggers the assignment of roles to ensure that management and iSCSI traffice are spread across network interface cards, to ensure greater network resiliency. For more information on cabling and port assignments, please refer to the HPE Storage dHCI and VMware vSphere New Servers Deployment Guide. For more information on network requirements, please refer to the HPE Nimble Storage dHCI Network Considerations Guide.

When the time comes to scale out the compute cluster, any new servers that are added will automatically get configured on the vSphere Distributed Switch.

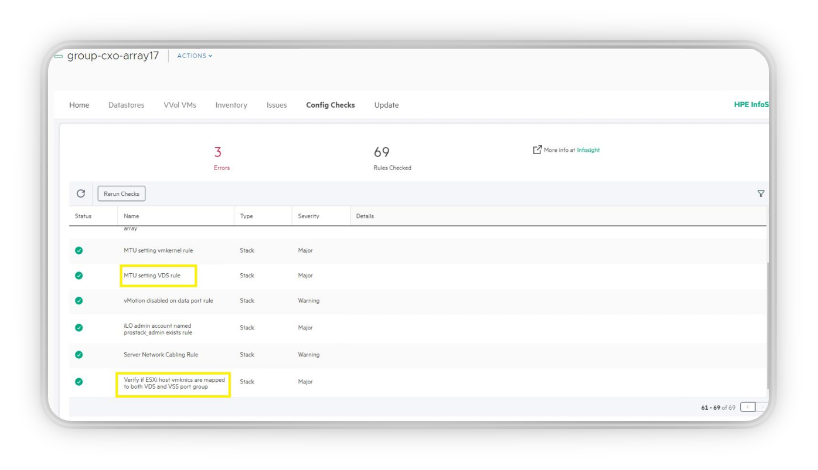

Accompanying the vDS implementation are new Configuration Check rules. These are verified once per day and ensure that the vDS is properly configured and in good health. These checks provide a convenient way to look at the health of the dHCI stack as a whole without having to examine multiple, separate interfaces and locations for potential issues.

- MtuSettingVdsRule - Checks if MTU setting matches between vDS on host and subnets on array

- VmknicToPortgroupMappingRule – Checks if vmknics on ESXi host are mapped to both vDS and VSS portgroup

- VdsNetworkRule - Checks if same vDS port group exists on all ESXi hosts in the cluster

Simplify now

With support and automation for dHCI deployment on vSphere Distributed Switches, HPE continues to expand the automation of configuration and management within dHCI. Now, implementing the appropriate virtual switch technology for your environment is as simple as select an option during setup.

Meet Storage Experts blogger Daniel Elder

Meet Storage Experts blogger Daniel Elder

Daniel is a Senior Technical Marketing Engineer specializing in HPE Nimble Storage dHCI.

Storage Experts

Hewlett Packard Enterprise

twitter.com/HPE_Storage

linkedin.com/showcase/hpestorage/

hpe.com/storage

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...