- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- NimbleOS 5.1 - Intro to Peer Persistence (Part 1)

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

NimbleOS 5.1 - Intro to Peer Persistence (Part 1)

Finally after waiting for so many years, “Peer Persistence for Nimble Arrays” aka Synchronous Replication with automatic failover has arrived for Nimble Storage.

This functionality is in high demand throughout Europe as we typically have short distance setup and rather low costs links. It's exciting to see this new feature be launched, and complimentary to existing Nimble customers as part of Timeless Storage.

One thing to note is that this is not a port of the popular 3PAR feature by the same name, this is a Nimble-designed and implemented technology, which has been worked on for a few years inside Engineering. However, the outcomes from an application failover and resilliency perspective are similar between the two products, hence keeping the same name.

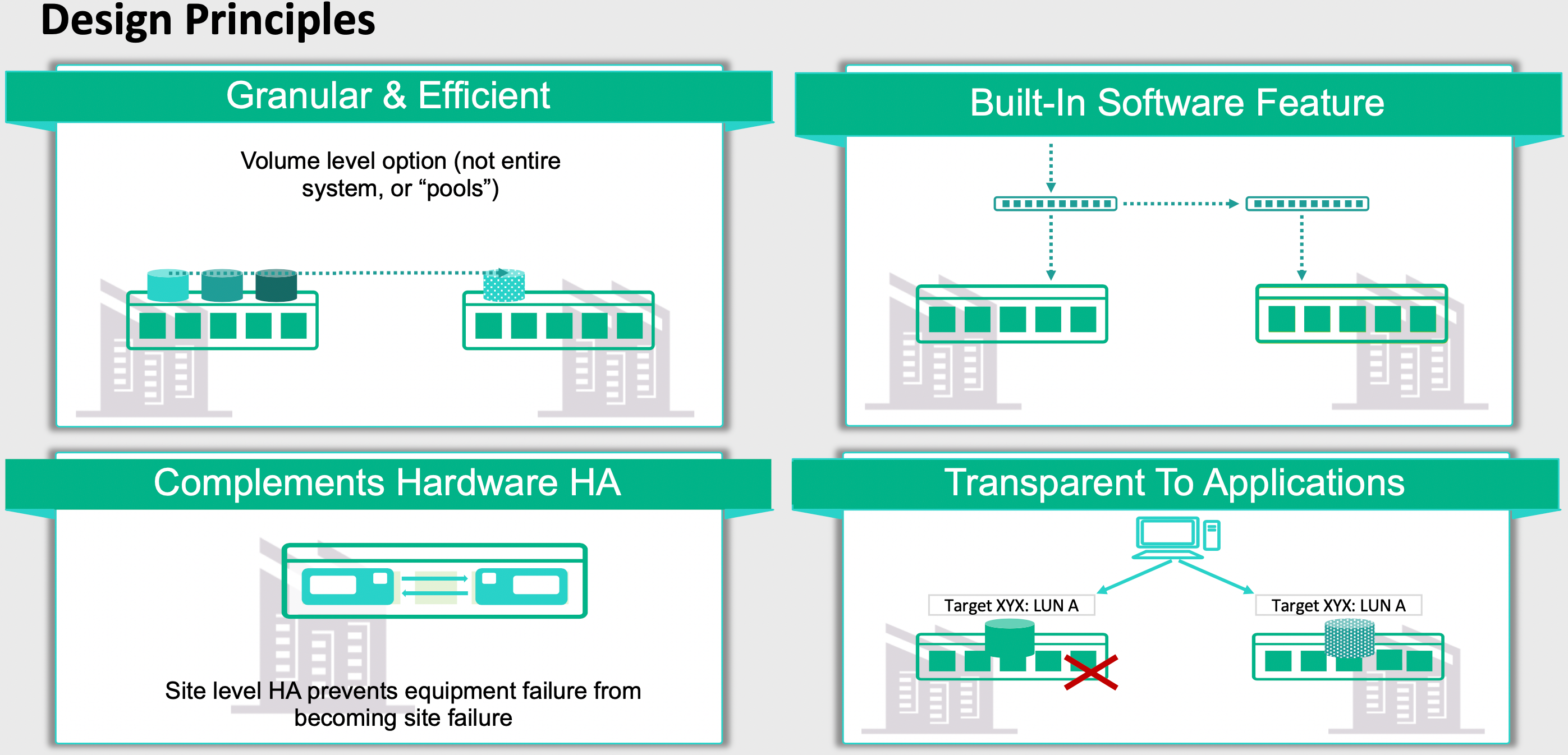

The concepts behind Peer Persistence on Nimble are as follows:

Let's go over each of these in a bit more detail:

Granular & efficient

You can select synchronous replication on either side on a per volume basis. You are not obliged to do this for the complete array, or a complete pool of storage. This is configured using Volume Collections, much like snap-replication is configured historically.

Built-in software feature

You don’t need any additional hardware to have Peer Persistence running. As with all functionalities with HPE Nimble Storage, you just can enable this function without any additional cost, just upgrade to NimbleOS 5.1 as part of your Timeless Storage support contract.

Complements the Hardware High Availability

Every Nimble Storage array remains fully redundant, meaning dual controllers running HA at all times with 100% performance protection, with guaranteed 99.9999% availability as standard. Peer Persistence will give you an additional layer of protection on top of that, and mirror your data to the second site to another fully redundant Nimble Storage array.

Transparent to Applications

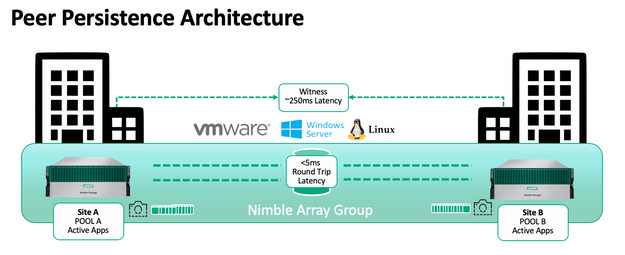

Thanks to a witness that is best installed on a third location, the applications can failover transparently to the downstream site in case of unavailability of the primary site.

The big difference between using synchronous replication compared to snap-replication is that we achieve this by utilising our Scale Out technology, and therefore we now have a single Nimble array group consisting of two arrays. Those arrays are separated by the concept of 'pools', and synchronous replication will be performed between those 2 pools.

What are the pre-requisites?

- In the first release, Peer Persistence can only be configured between arrays of the same model. Meaning an AF40 can replicate to another AF40 but not to a HF40, as one is All Flash, the other is Hybrid. Capacity and interface cards may differ on both systems, but the type and model of the arrays must to be the same.

- Peer Persistence is supported on the current 'Gen5' and previous Generation of Nimble hardware, including:

AFx0 arrays, HFx0 arrays, including the "C" non-dedupe models.

AFx000 arrays, CSx000 arrays.

NOTE: The HF20H and CS1000H are not supported for synchronous replication - even if fully populated with both halves of drives.

- In order to provide maximum protection, redundant links for Replication and Client Access are required. A minimum of 2 x 10Gb Ethernet links are required per controller for Replication traffic.

- Both FC and iSCSI attached hosts are supported with Peer Persistence,, however replication is running over IP. Also we do not support mixing protocols of a single volume across sites.

- Group, Synchronous Replication, Management, and Data access traffic require the same network subnet be available to all arrays in the group. These ports must be able to communicate on Layer 2 of the TCP/IP model.

- Latency should remain <5ms round-trip response time between arrays in the group on all synchronous replication links. The replication traffic will be classified as “Group traffic” within the scale-out group.

- If using Automatic Switch Over (ASO) for application transparent failover, the witness client will need to be located in a third failure domain. Look for this to be offered as a service using a cloud closer to HPE's heart in the future...

- NimbleOS 5.1 comes with updated host-side Nimble Toolkits, and these should be installed and correctly working for VMware, Windows and/or Linux hosts.

In the next of our blogs, we'll cover how to configure Peer Persistence in a bit more detail between Nimble arrays. Until then, stay safe and Be Nimble

twitter: @nick_dyer_

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...