- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- NimbleOS 5.1 - iSCSI & FC MultiProtocol Access

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

NimbleOS 5.1 - iSCSI & FC MultiProtocol Access

Historically, a Nimble array could be solely configured as iSCSI or Fibre Channel, and the system didn't let you choose what protocol to use. In fact, during initial installation if the array detects Fibre Channel cards it will default to FC protocol. If no FC cards were detected, iSCSI was the protocol you could use. It was also not possible to switch protocols in a Nimble array.

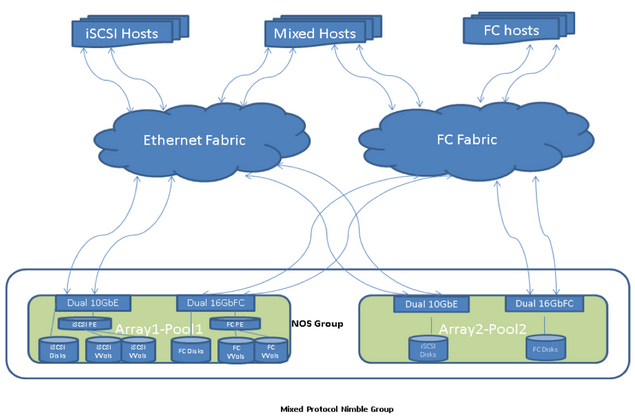

Nimble OS 5.1 solves this issue, as we now support provisioning iSCSI and FC protocols on the same array.

If you wish to connect iSCSI hosts and FC hosts to the same Nimble array, and use both protocols at the same time, you can do that using Nimble OS 5.1 It delivers more flexible hardware options and customers can now switch between protocols much easier.

There are some conditions to be met, of course.

Mixed protocol is only supported on homogeneous groups, meaning that all arrays in the group needs to have similar hardware capabilities. In a single array configuration, this isn't a problem - but if you are scaling out with multiple arrays within a group, then this is something to consider. The protocol chosen always applied on the entire group and cannot be set differently per pool. You can't disable both protocols on the group, obviously.

A volume can only be presented using a single protocol. So that volume can be accessed using iSCSI or FC, never both at the same time. The volume ACL are assigned to an iSCSI initiator group or a FC initirator group.

Initial setup is no different as actual.

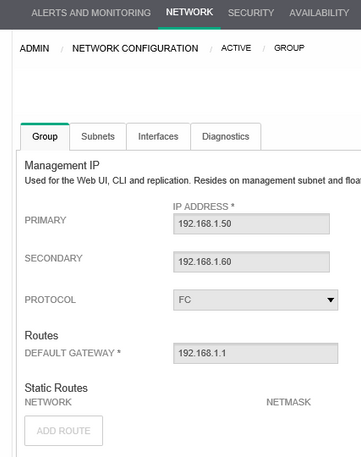

If on initial array boot it detects FC cards, than FC will be the default protocol choosen by the installer software. This can then be changed using "Administrator > Network > Configure Active Settings". You will see the following view:

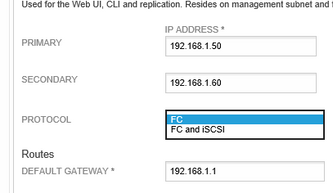

Now you can choose your protocol you want to use. In this example, we can now add iSCSI to the possible protocols.

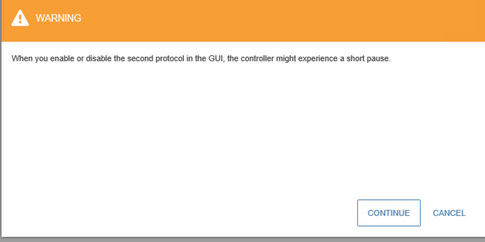

When you change this you will see the following warning. Don't worry, it's a temporary IO pause whilst the second protocol stack is initiated.

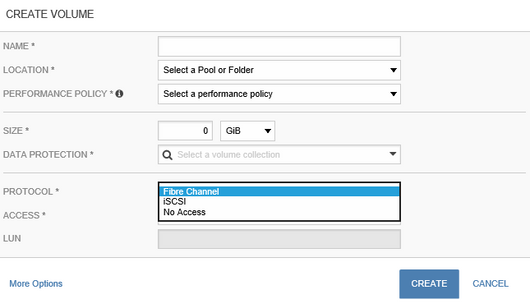

When provisioning new volumes, you now have the choice between FC or iSCSI (but not both).

The rest is business as usual, no surprises there.

Those changes can also be done using CLI.

“group --edit –-scsi_enabled {yes|no} | --fc_enabled {yes|no}"

"group --info"

It's also possible to change the protocol for an existing volume. Volumes and volume collections are in fact protocol agnostic. The access protocol is only tied with the initiator group.

So what needs to be done if you want to change the protocol on an existing volume? Well, as you may expect, it is a disruptive process.

1. Disconnect the volume from the host, remove all ACLs on the volume

2. Add volume ACLs to initiatorgroup with new protocol

3. Some hosts need manual re-scan to avoid host errors connecting to unavailable targets.

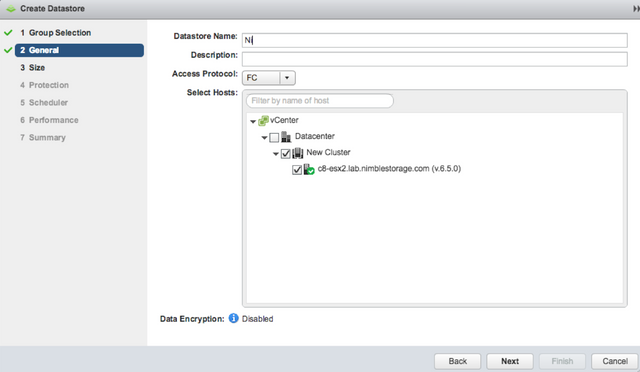

And finally, we also adapted the new vCenter Plugin to support this new functionality: As you can see below, during creation of the datastore, the protocol used can be set upon your choice:

Thanks for taking the time to read this blog. If you have any questions, please don't hesitate to ask them in the comments section below.

Enjoy the new Nimble OS 5.1!

Written by Walter van Hoolst, Nimble Storage SME, Belgium

twitter: @nick_dyer_

- Back to Blog

- Newer Article

- Older Article

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...