- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- NimbleOS 5.2: Fan-Out Replication to Enhance Your ...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

NimbleOS 5.2: Fan-Out Replication to Enhance Your DR & Backup Plans with HPE Nimble Storage

As part of the NimbleOS 5.2 blog series, this technical article details the enhancements we've made to our periodic replication.

HPE Nimble Storage arrays have had the ability to replicate datasets periodically using our incredibly granular snapshot functionality since the very early days of NimbleOS 1.3 – back in 2011.

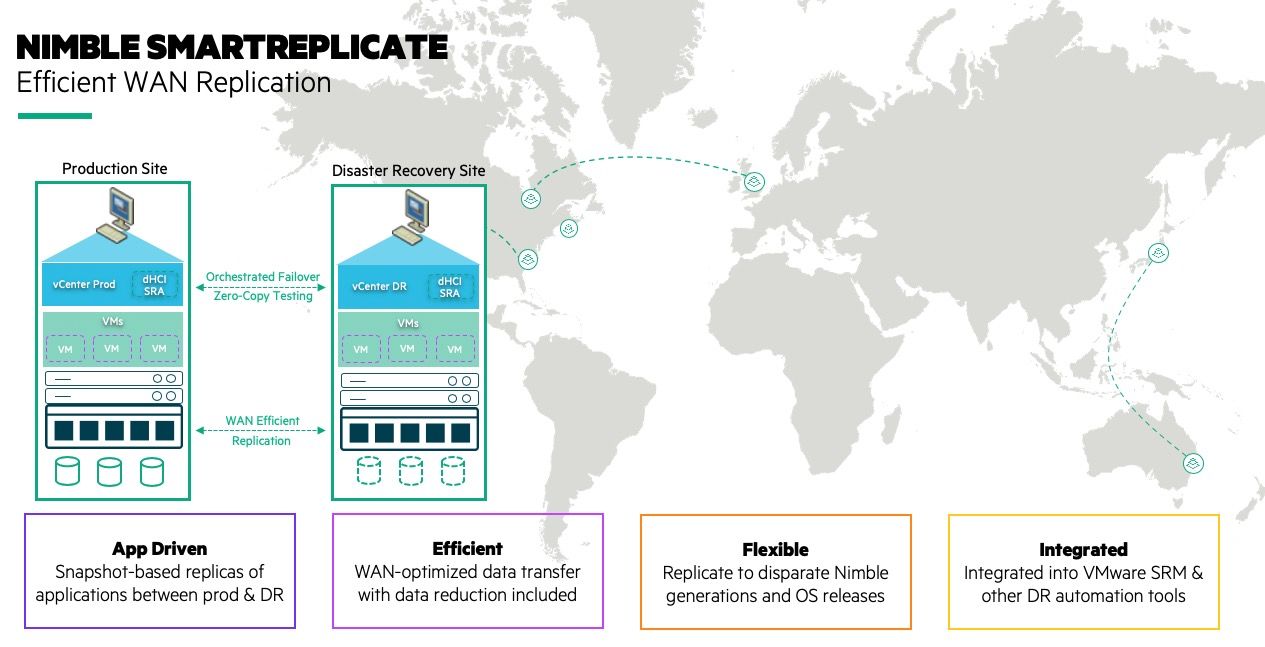

With only compressed, delta blocks being sent (of those being variable block sizes rather than lumpy fixed blocks), and patented WAN efficient data streams on the designated Nimble replication ports – this winning combination has provided tens of thousands of customers the tools to have ultra efficient copies of their datasets with deeper retention sets than traditionally dispersed in datacentres around the world.

Dubbed SmartReplicate, it is able to provide a consistent basis for customers who wished to reduce their reliance on cumbersome and heavy backup policies, and move more towards a flash-accelerated restore process for DR as well as backup/recovery – the latter of which our partners at Veeam, Commvault, ArcServe and most recently Cohesity have built slick integration into Nimble platforms for our mutual customers to benefit from lightning quick backups, recovery, data validation, ransomware protection & UAT/test copies.

Further driving the efficiency agenda - the SmartReplicate technology allows customers to replicate across various generations of Nimble array, across any type of disk media, with pretty much any NimbleOS release that's in support! It means that customers have been able to mix all-flash and hybrid-flash platforms for their primary and DR sites without the tradeoff of data services, performance whilst at the same time driving down costs.

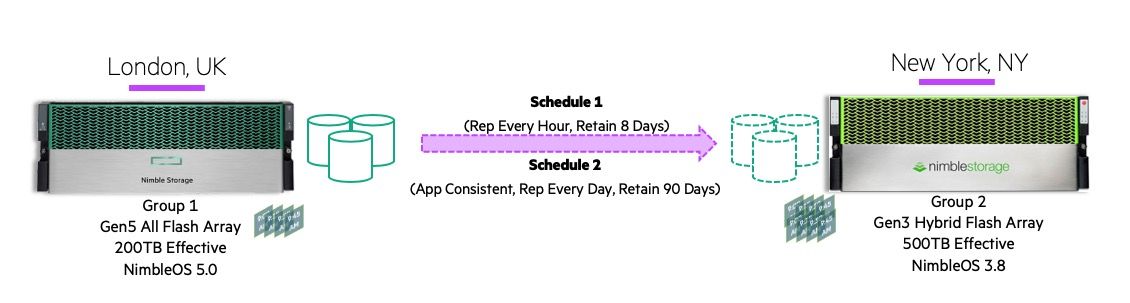

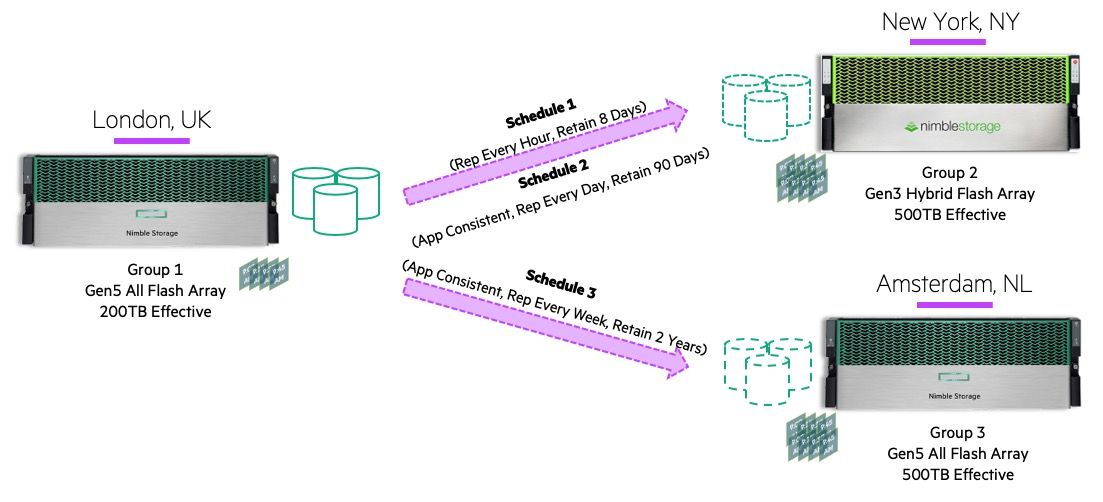

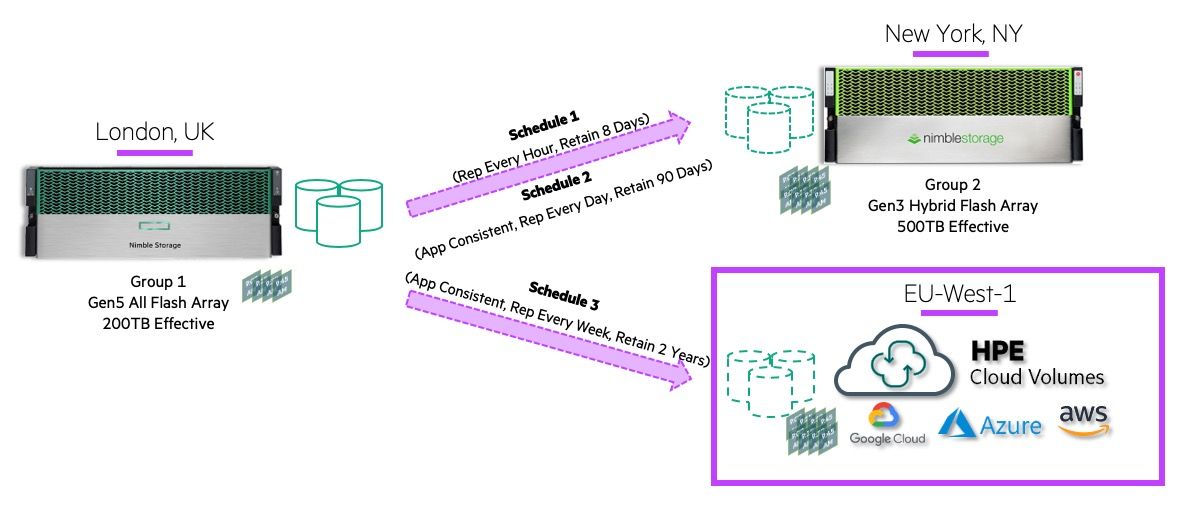

Take my example here, I have a new AFA Gen5 Nimble array replicating data for DR and long term backups to a Gen3 Hybrid Flash array in New York. This has always been entirely possible with NimbleOS native snapshots. What's more, those snapshots are can be VM and application consistent within vCenter, Hyper-V, SQL, Exchange, Oracle and other apps you may want to protect consistently.

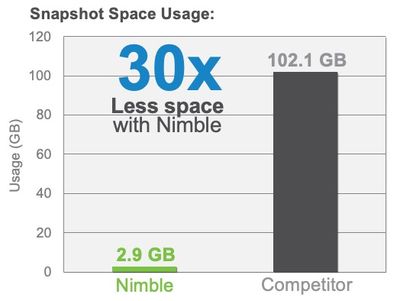

We often see huge data efficiency savings vs the competition performing the same functionality. Here is one example where Nimble saved 30x the space compared to a competitor when taking daily snapshots & replication with a 10 day rentention policy for Microsoft Exchange.

However, we heard from our customers that they wanted the functionality within periodic replication to protect the SAME volume across multiple failure domains. Nimble has always been able to replicate data fan-in/out - but a single volume could only have a single source and destination. Or in other words - a volume can only be replicated to one other place.

Fan-Out Periodic Replication - New in NimbleOS 5.2

In NimbleOS 5.2, we’re excited to enhance this functionality to allow for true fan-out replication of volumes across two disparate Nimble arrays – or even HPE Cloud Volumes if you wish to send a copy of your data to the Public Cloud.

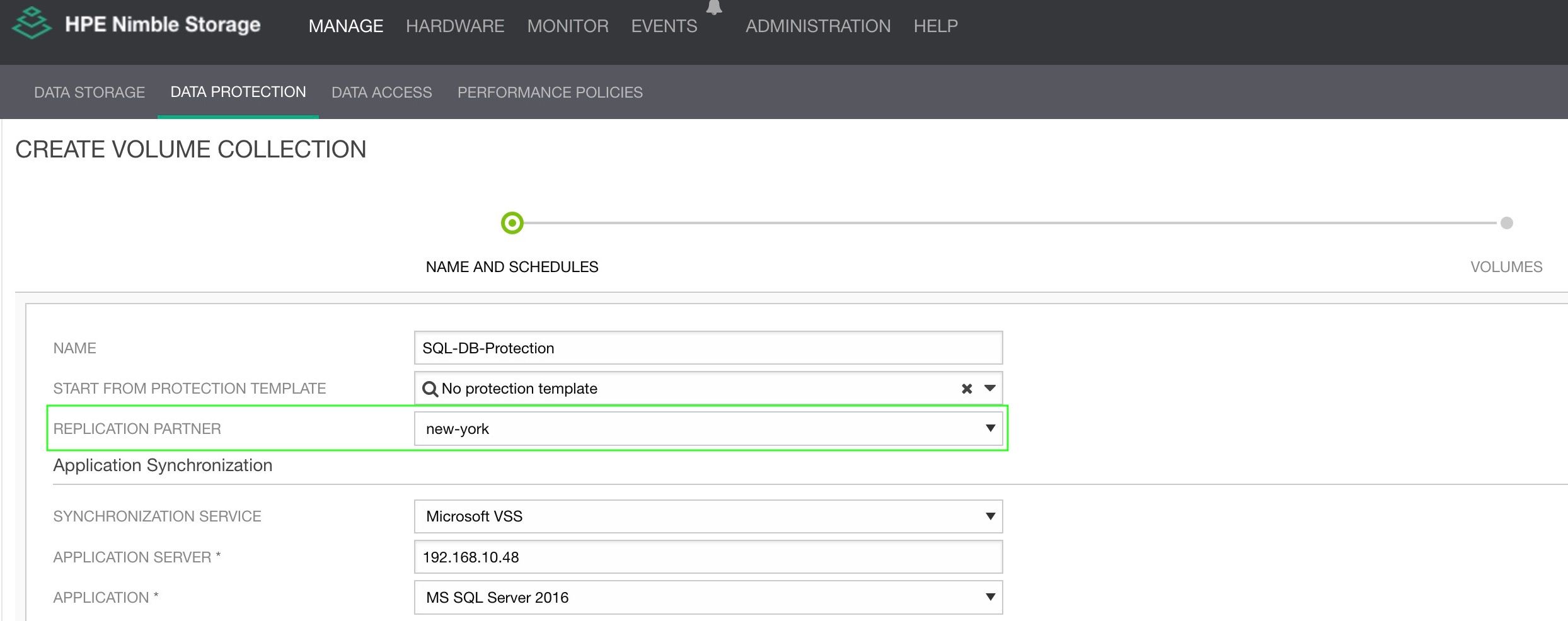

Let’s take a look at this in the array UI.

In my example I now have three sites; London, New York and Amsterdam. As described above, London is an All Flash platform, yet New York and Amsterdam (my new site) are Hybrid Flash platforms. All three are deemed to be production sites, hosting active data.

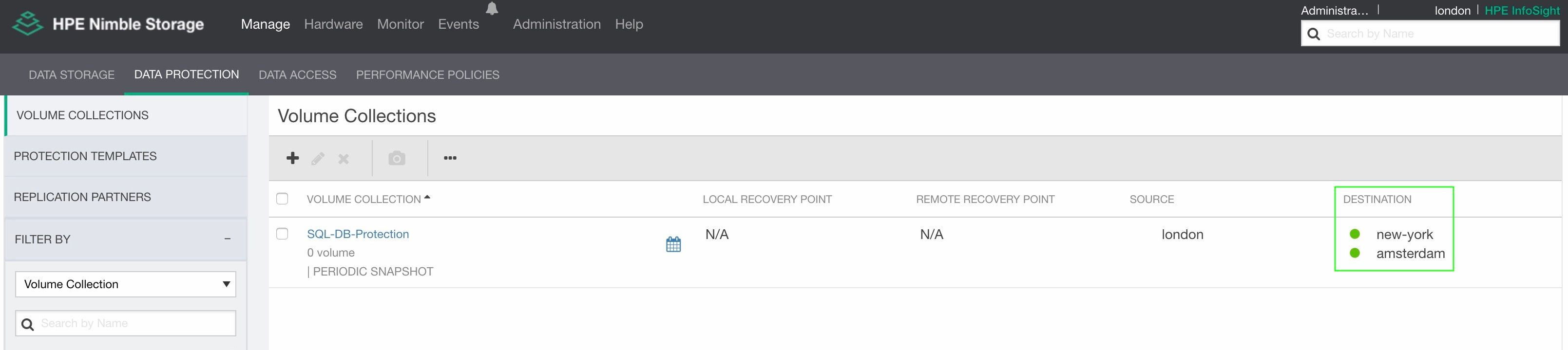

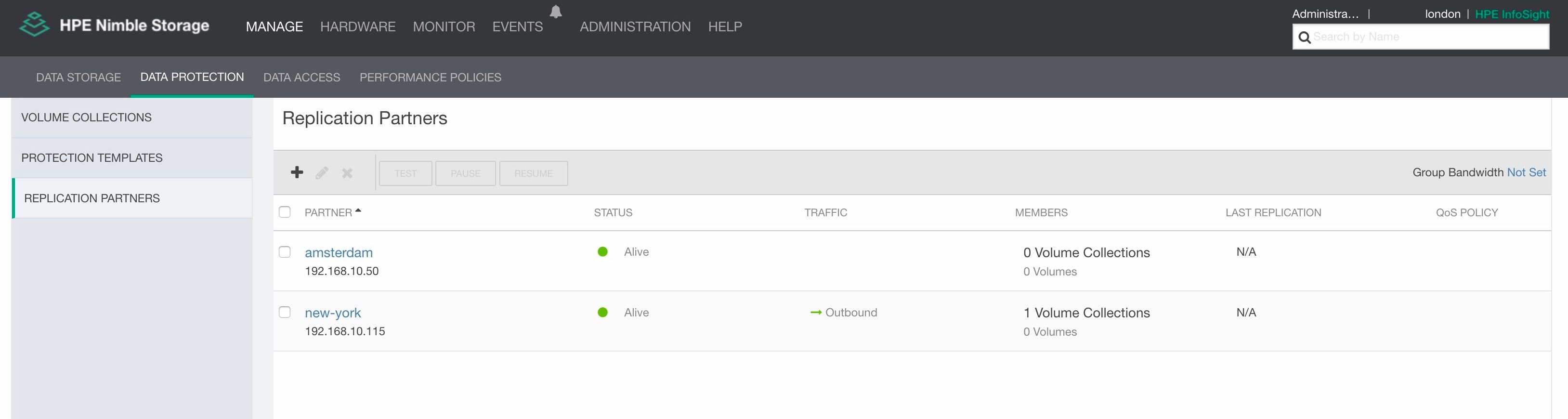

In releases prior to NimbleOS 5.1, it was possible to create multiple replication partners within the array. As you can see here, I have replication partnerships defined from London (the array I’m on) to both Amsterdam and New-York.

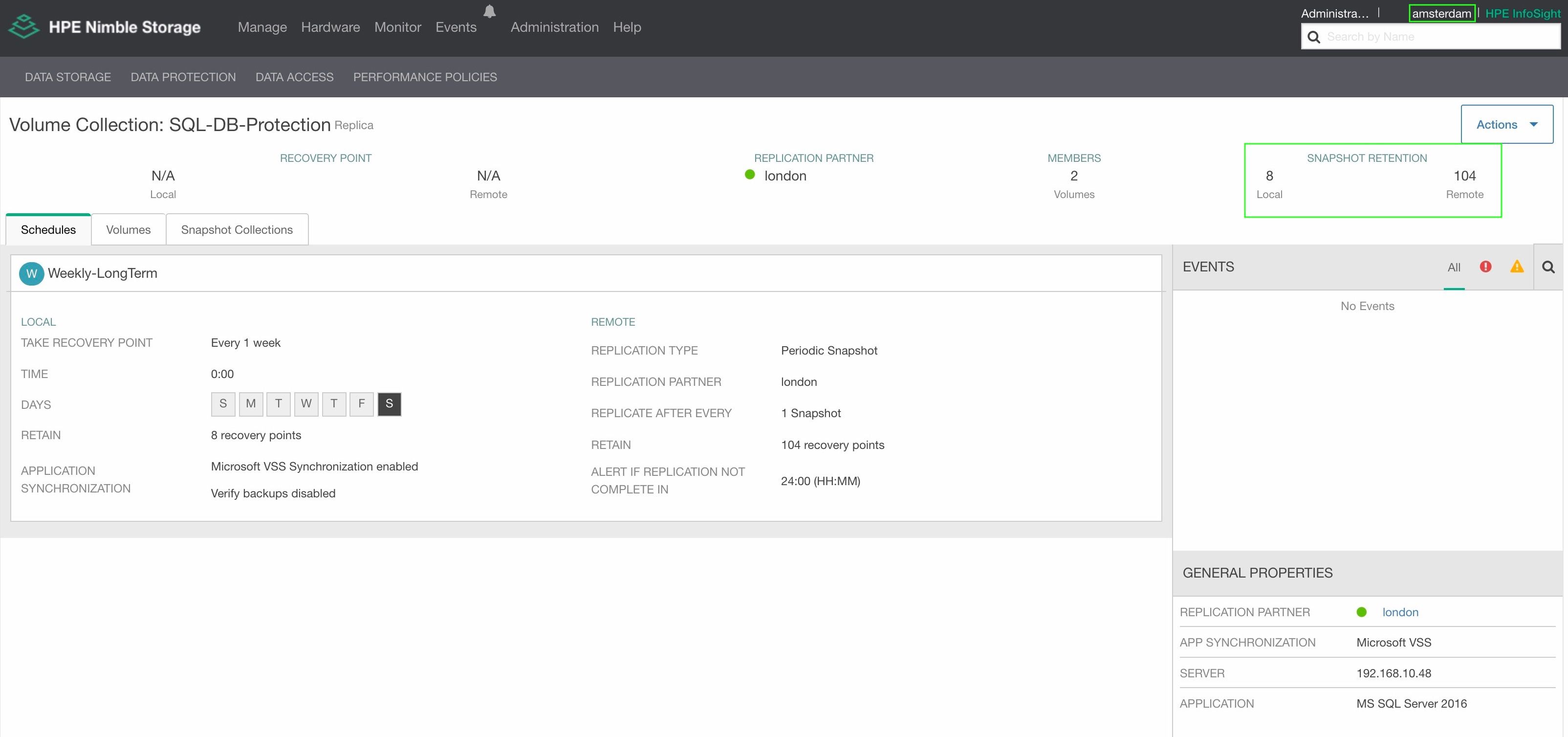

When inspecting the defined Volume Collection (Nimble’s terminology for what you may know as a Consistency Group) the characteristics of the collection would only be available for a single downstream array. We can see that highlighted here, that the replication partner is defined at the global level of the volume collection.

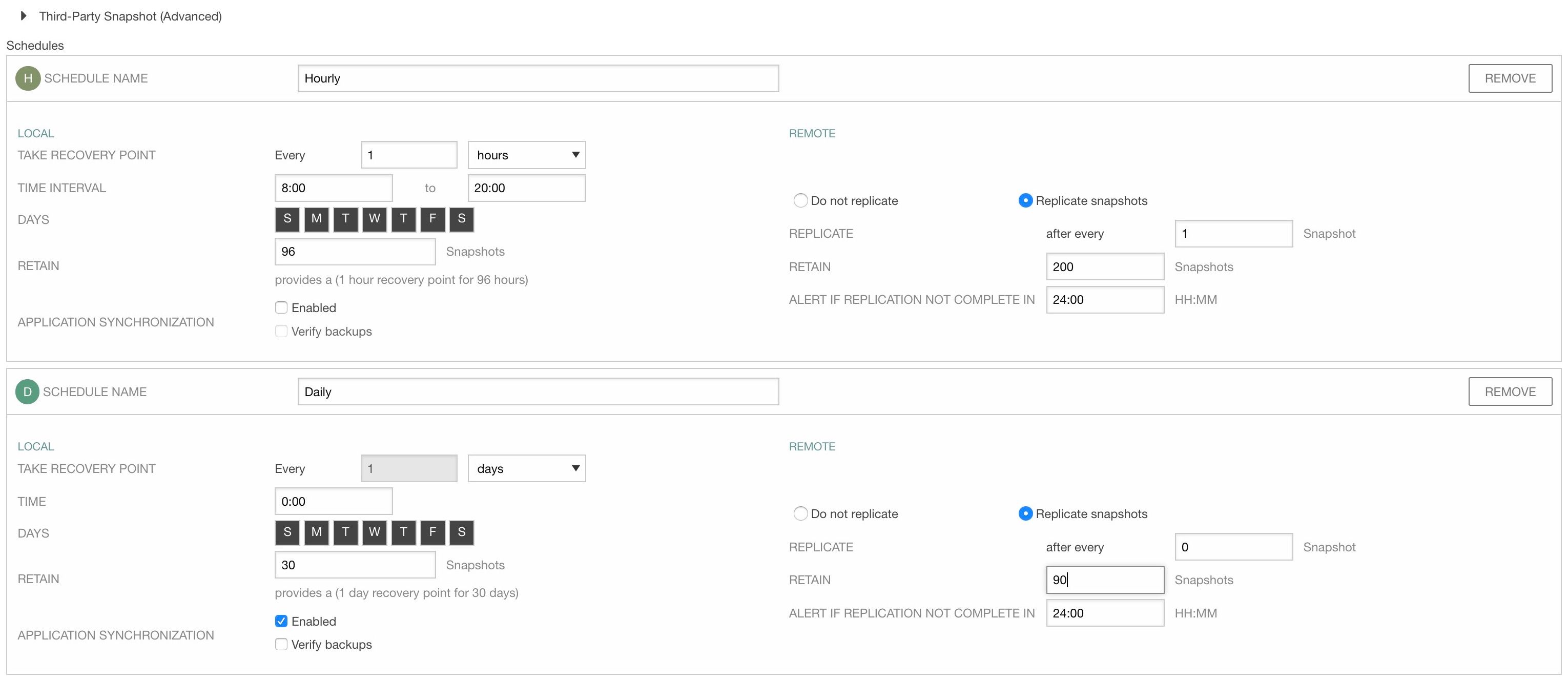

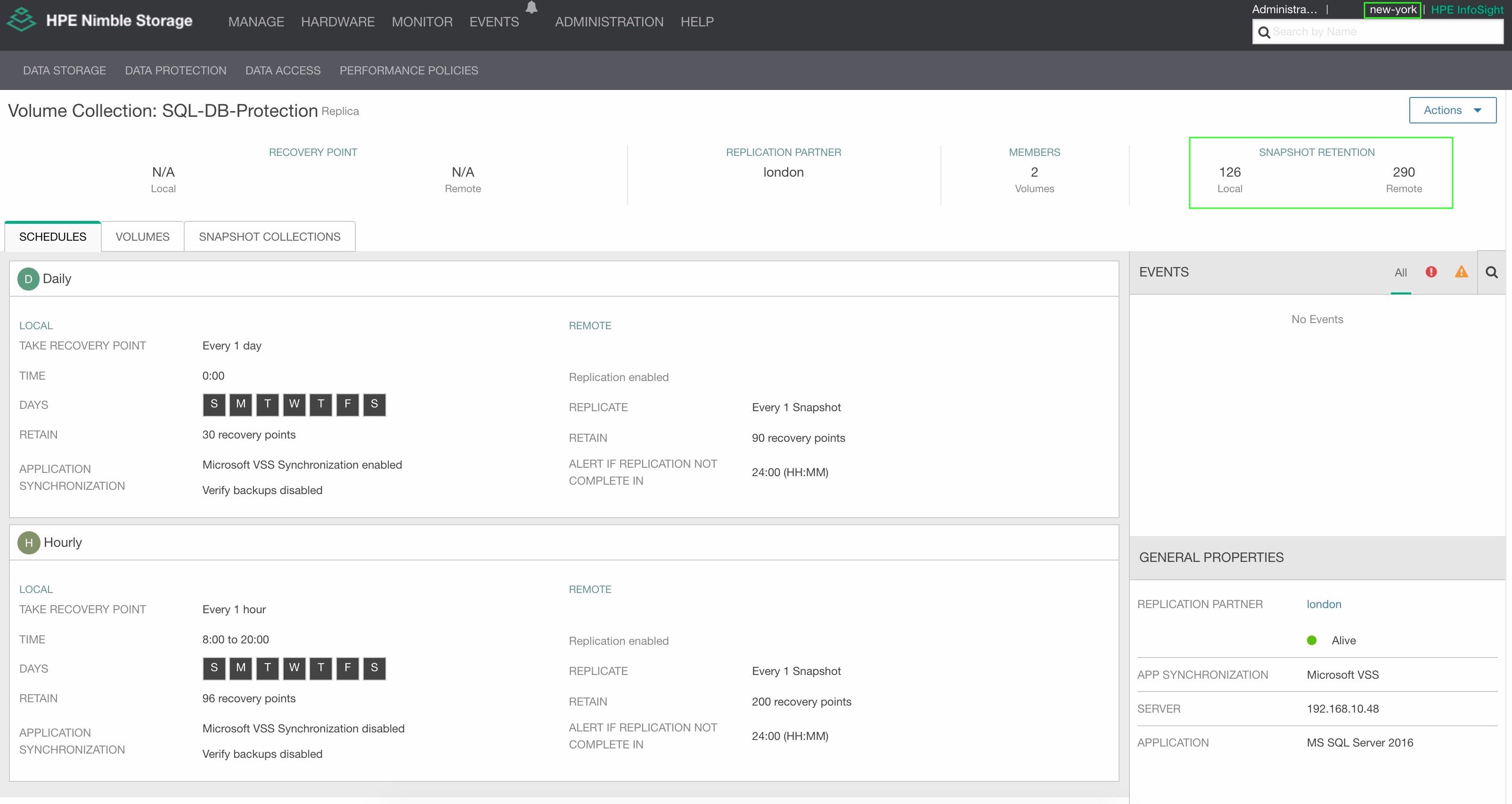

Any schedules and retention policies defined within the Volume Collection would automatically be applied to the downstream replication partner. As you can see here, we’re looking to replicate and retain those 200 hourly snapshots and 90 daily snapshots to New York – giving me a rollback period of 8 days worth of hourly and 3 months worth of daily recovery points for either clone, failover or handover functionality.

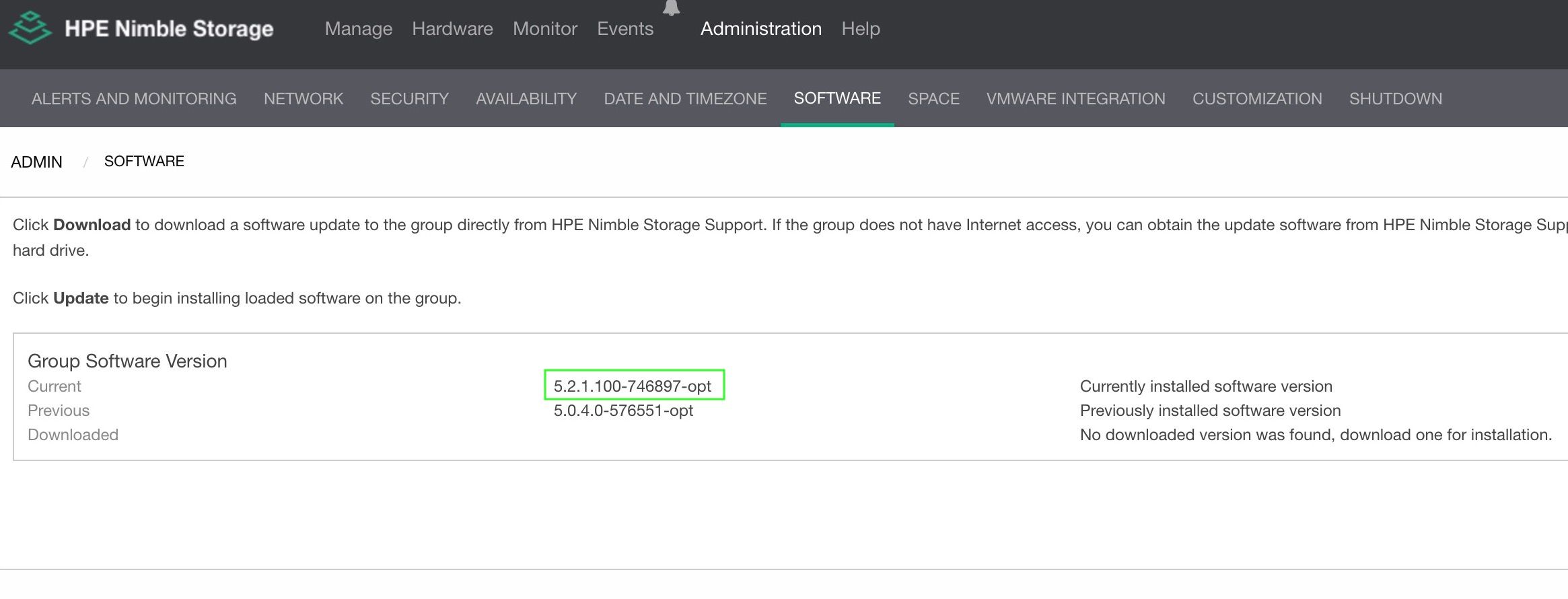

Let’s upgrade London to NimbleOS 5.2!

This is a simple, painless process and is non-disruptive to hosts and applications as always.

NOW I want to re-design my data protection strategy using the new functionality, and protect the same datasets with different retention policies across multiple sites. I'm going to have a 2-year weekly retention of datasets on Amsterdam, as well as the protection I currently have running in New York.

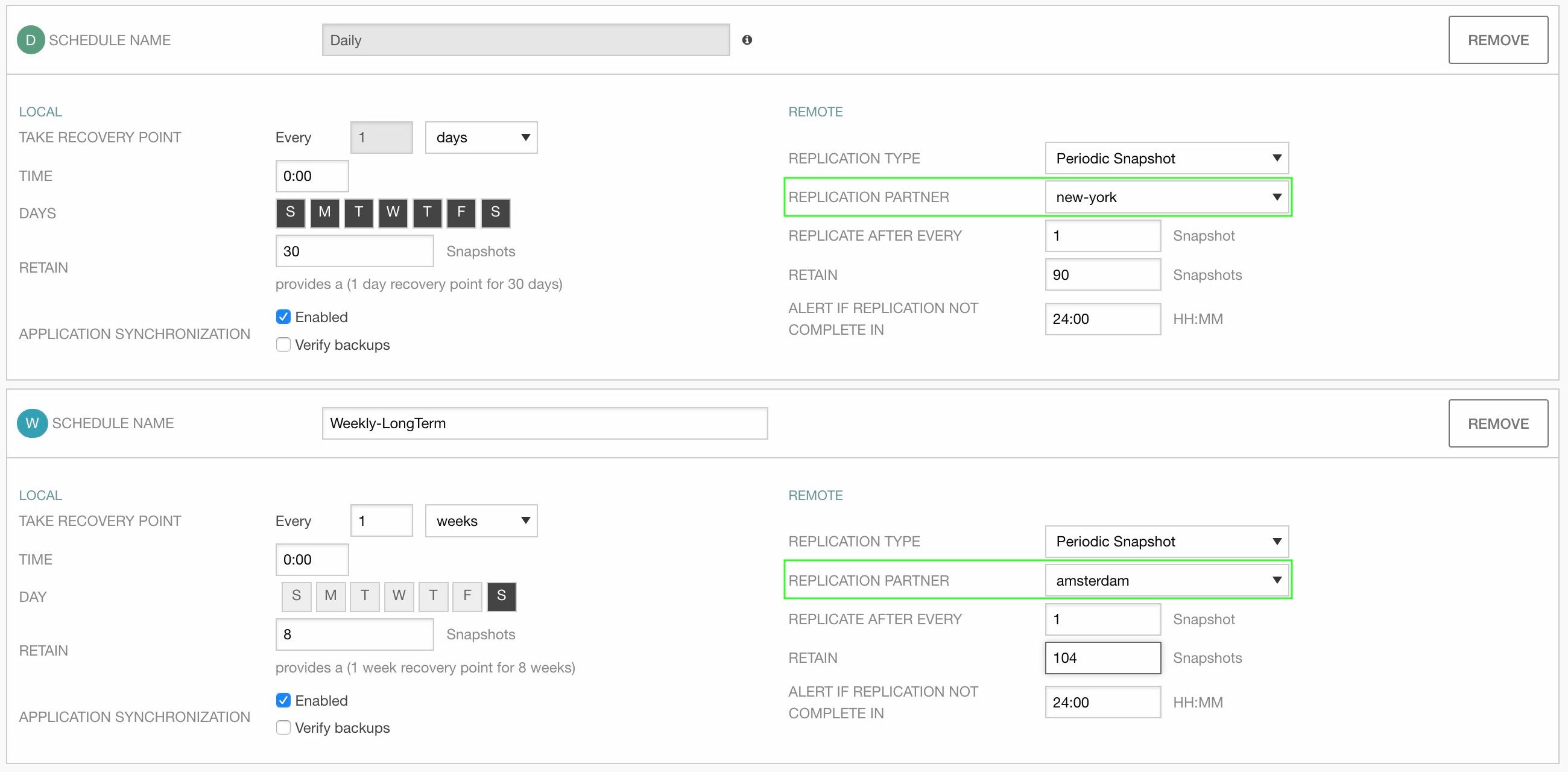

When we re-visit the Volume Collection pane we can now see the “Replication Partner” has now moved to be inside the Schedule itself, and allows us to define completely different replication partnerships, schedules and retention policies for a single volume across multiple destination arrays – all controlled within a single volume collection.

In my modified example, I’ve enhanced my DR and backup policies to govern cross-contient protection. Therefore alongside my 200 hours and 90 daily recovery points available in New York, I have defined a new Weekly “long-term” retention of data - to be stored in Amsterdam – with 2 years worth of weekly recovery points. This has drastically boosted my data protection capabilities within the systems.

The Volume Collection summary page now shows a single SQL DB protection policy in London with independent data protection policies on both New York and Amsterdam as destinations.

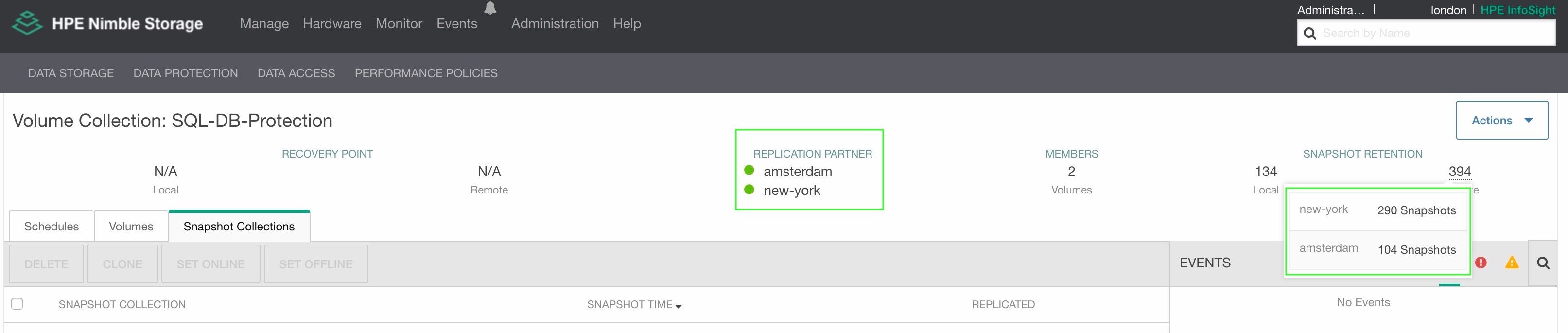

Jumping into the SQL volume collection, we can see the details of the collection, but now it shows both replication partners, AND the retention policies on each partner.

Scoping out the destination arrays, each location now shows their own schedule & retention policy – completely independently of one another.

New York – Hourly & Daily recovery points (up to 3 months)

Amsterdam – Weekly longer term recovery points (up to 2 years)

Note: the vCenter HTML5 plugin will be updated in the next milestone release for the new fan-out replication functionality.

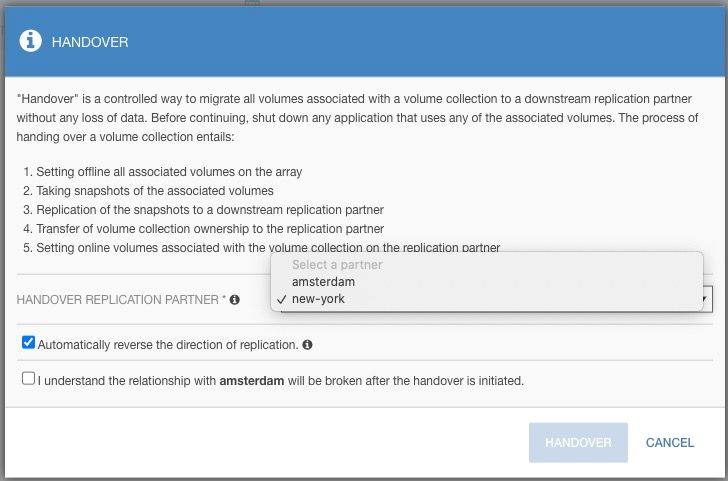

Finally, we then have the ability to invoke disaster recovery or data protection measures on either of the sites. We can choose to PROMOTE the volume (should something disasterous happen and we need to start running on our DR site of choice) on a site of our choosing, and we have the ability to gracefully handover ownership of volumes across sites using the HANDOVER option.

Notice when I select this now on London, it gives me the option as to where I want the ownership to be handed to.

What happens to the third site when you handover from Primary to a chosen DR site?

- I have chosen to handover the ownership of the data from London to New York. The roles will switch; New York will assume ownership of the datasets, and London will become a downstream replica of New York.

- Replication is discontinued on the affected volume collections to the 3rd site - which you didn't handover to (Amsterdam in this case). You are asked to confirm that you understand this with the additional checkbox. Allpreviously replicated copies of your data is preserved and remain intact. The volume collections will remain on the original site - but will be inactive.

- If you were to hand back the ownership to the original primary site (London), replication would re-commence to the 3rd site (Amsterdam) from a mutual copy of the data that it finds on both sites.

Multi-Site Data Protection

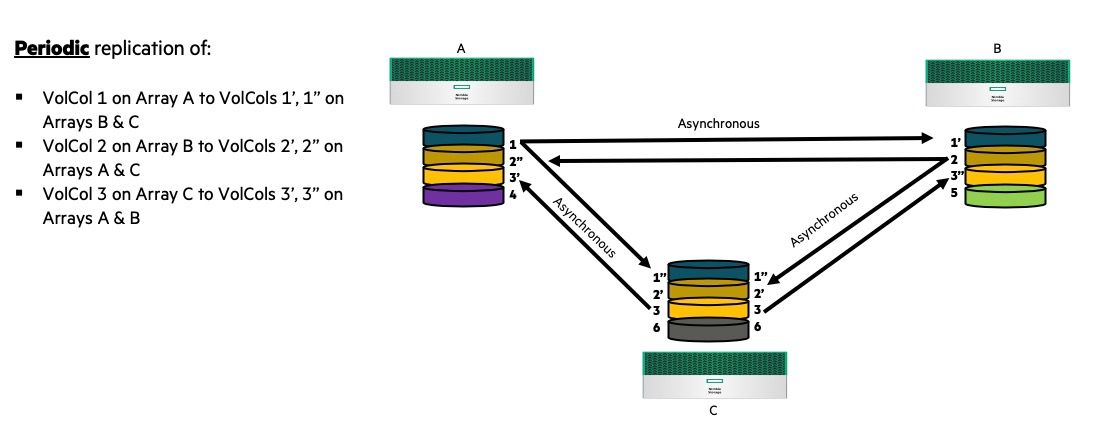

In my example we had a single volume replicating to two disparate locations. We can stretch this concept further and have a three way active/active/active, with volumes being replicated across two other locations. Looking below - Site A is replicating it's data to B & C, B is replicating to A & C, and C is replicating to A and B.

Long term retention in Public Cloud using HPE Cloud Volumes

Nimble has the additional coolness of being able to replicate, clone, promote, demote & migrate data back from the Public Cloud using HPE Cloud Volumes. This is built into the Nimble array UI, and any customer is able to configure a replication partnership to any HPE Cloud Volumes region they wish - just like a regular Nimble array. This is PERFECT for long term data retention, and allows me to clone or promote my dataset to AWS, Azure or GCP at a click of a button.

In my new example - rather than having a physical array in Amsterdam that I have to buy, manage & look after, i'm now consuming a DR replication-target -as-a-Service in EU-West-1 using HPE Cloud Volumes - all controlled via the Nimble platform just like a regular replication target.

This is probably a good idea for a follow on blog post... Stay tuned on that one!!

One last thing – you’ll notice in the drop-down list of “Replication Type” there is an option for Synchronous replication, however it’s greyed out. This is for two reasons – we haven’t configured synchronous replication, and more importantly in this release fan-out replication is only available natively within Nimble using Periodic Snapshot replication. If you want fan-out replication of a single volume protected under Peer Persistence, this is possible using HPE Recovery Manager Central (RMC). However as we look to the future, we do plan to roll-out this enhanced functionality natively in the Nimble array soon.

So there we have it! Super easy to switch on, consume and adopt - across any generation of Nimble array you can get your hands on!

Hope this is useful. Please do comment below with any questions or thoughts. Furthermore you're always welcome to speak with Nimble Support or your local Storage SA if you wish to talk this through in more detail.

twitter: @nick_dyer_

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...