- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Tech preview: Network File System Server Provision...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Tech preview: Network File System Server Provisioner for HPE CSI Driver for Kubernetes

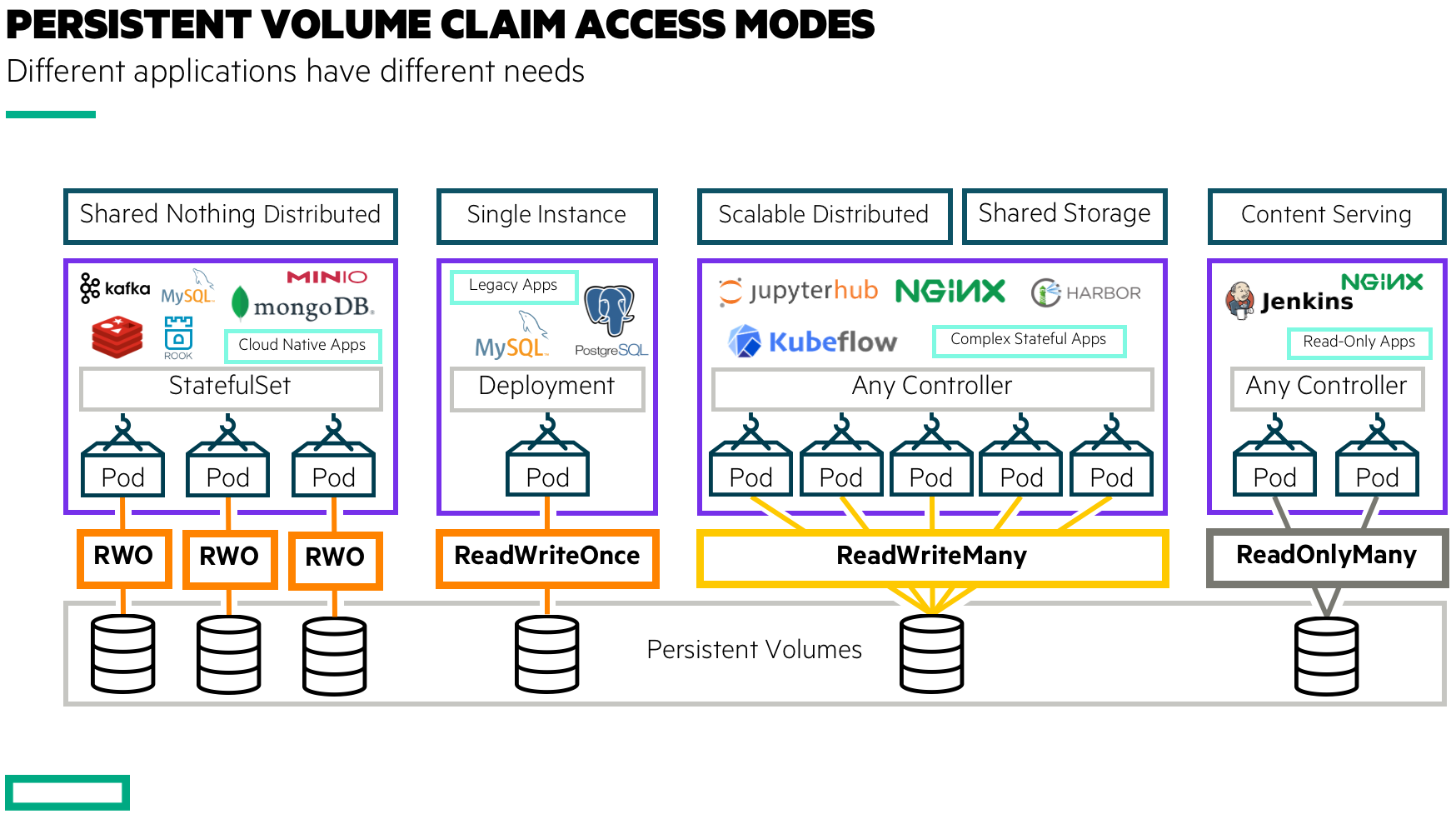

Applications running on Kubernetes have diverse storage needs. Some don’t require any storage at all. But the vast majority of the stateful applications that require persistent storage are deployed in a pattern usually referred to as a “shared nothing” architecture.

Failure detection, resilience and discovery is all happening on the application layer. A typical example would be running a distributed database such as MongoDB, or using a master and replica pattern with MariaDB and similar databases. For these deployment patterns, ReadWriteOnce (RWO), which is employed by HPE Nimble Storage, HPE Primera and HPE Cloud Volumes, we are covered! Then we have the last mile, ReadWriteMany (RWX) and ReadOnlyMany (ROX) deployments, which include applications that require multiple Kubernetes pods across multiple worker nodes, and require simultaneous access to the same filesystem.

The most common pattern is that of a separate system, external to the Kubernetes cluster or running on Kubernetes itself, providing the shared filesystem access. Network File System (NFS) in particular has historically been very popular, but distributed filesystems such as the HPE Data Fabric (MapR), CEPH and Gluster are frequently mentioned in these conversations. It’s not uncommon to see vendors touting that RWX is required for Kubernetes, which is completely false.

Another pattern we’ve seen, and used ourselves quite frankly, is to use the NFS server provisioner helm chart to provide RWX. The simplicity in the design of the NFS server provisioner is also its limitation. It doesn’t provide high-availability (HA), and it serves the cluster from a single RWO volume and single server instance. Multiplying the pattern would require multiple StorageClass objects and a lot of manual provisioning to create a sensible separation for the cluster workloads.

With the introduction of a NFS Server Provisioner for HPE CSI Driver for Kubernetes, we wanted to address multiple things.

- Provide some sort of fault tolerance and automatic recovery in the instance of a node failure or network partition.

- Give users granular control of each RWX/ROX Persistent Volume Claim, performance characteristics, capacity and data management (snapshots and clones).

- Create a unified solution for all supported Container Storage Providers (CSP) using the HPE CSI Driver.

- Simplify use, for both the Kubernetes cluster administrators and other users who deploy applications.

Architectural challenges

The inherent design principles of deploying a single file server in the same tier as the application presents a number of challenges and caveats that users need to be made aware of. It also opens up some interesting deployment options not possible before. The obvious benefit the RWX capability provide is being able to deliver the last mile for a complete solution from a single platform.

The NFS server leverages a standard RWO claim from a supported HPE CSI Driver backend. The user RWX/ROX claim is essentially a NFS client to the NFS export. In an effort to make this as seamless and as Kubernetes-native as possible, data between the NFS client and server should flow across the pod network; the data between the NFS server and HPE CSI Driver backend uses the conventional data fabric, iSCSI or FC. This inherently means that there will be a lot of double buffering involved, and network latency between worker nodes will matter a lot if the user workload (pod) isn’t co-located with the NFS server. The NFS server itself is the industry standard user-mode NFS implementation NFS-Ganesha. High-performance and multi-threaded with no intention of letting CPU cycles sit idle.

As illustrated above, it’s possible to create dedicated NFS servers, either separated from the workloads, co-located with the workloads, or in a more promiscuous free for all mode.

Controls and constraints

There are quite a few parameters that may be used to tweak placement, resource consumption, and mount options. For the initial tech preview there are no memory or CPU constraints on the NFS servers. At this time only twenty NFS servers are allowed per worker node. The anticipation is that most applications and clusters are sized in a manner where a dozen or so RWX/ROX claims in the teens of terabytes are sufficient for the applications that needs it. If this isn’t the primary use case being addressed, it’s advisable to look at the HPE Data Fabric for a more scalable and performant RWX/ROX solution for Kubernetes. That being said, the NFS Server Provisioner provided with the HPE CSI Driver for Kubernetes is not meant to address the big data use cases that are craving multiple gigabytes per second throughput and petabytes of capacity. Those workloads require distributed, scalable and performant filesystems. The NFS Server Provisioner provides a user-mode NFS server on top of a single local filesystem, such as XFS.

Part of the tech preview is also a “pod monitor”. It’s designed to monitor pods with a certain label and take action if the node the pod runs on becomes unresponsive. The purpose is to have Kubernetes gracefully reschedule the NFS server Pod controlled by the deployment. Naturally, this will pause IO for any RWX/ROX claims dependent of the NFS server and the length of the automatic recovery depends on many different factors. It could potentially be measured in minutes. A similar latency spike will also be introduced during rescheduling of the NFS servers due to node upgrades. If this is unacceptable for the workloads, again, the HPE Data Fabric might be a more suitable solution as it’s a true distributed filesystem with orders of magnitude higher tolerances for outages, and is unnoticeable for the applications dependent on the data.

Next steps

As stated, the NFS Server Provisioner is currently in tech preview and should be treated as an open beta. It’s delivered with the HPE CSI Driver for Kubernetes 1.2.0, and will work with any supported backend CSP. Feedback is immensely appreciated at this stage! Please feel free to connect with the team on Slack, or submit any issues or concerns through your regular HPE support channels. You can sign up for the HPE DEV Slack community at slack.hpedev.io* and login at hdedev.slack.com.

It’s also possible to submit tickets via the GitHub issue tracker for the HPE CSI Driver for Kubernetes if that’s preferred.

The NFS Server Provisioner is already part of the documentation on SCOD. Check out the StorageClass options and PVC Access Modes for reference documentation, which may be subject to change (!). If you’re interested in a more in-depth tutorial, head over to HPE DEV: Introducing the NFS Server Provisioner for the HPE CSI Driver for Kubernetes.

Check out HPE CSI Driver for Kubernetes 1.2.0 available now! Stay tuned to Around The Storage Block Technical section for future exciting announcements for the HPE CSI Driver for Kubernetes.

* Sign up not required for HPE employees

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...