- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- The most flexible and efficient high-performance n...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

The most flexible and efficient high-performance network transport for NVMe over Fabrics

The rapidly-increasing speed of servers, storage, and network connections is stressing traditional network transports beyond their ability to support modern architectures and applications. This requires a more efficient transport to reduce latency, free up CPU processing power, and realize the full potential of flash storage.

The need for more efficient networking

Faster servers demand more data

Today’s servers support higher speeds and heavier, more powerful computing. This is demonstrated in the renewed competition in the CPU world between Intel, AMD, and ARM, as well as emergence of GPU-based accelerators to keep the growth rate of compute power high. Top-line servers can already drive 200Gb/s and will soon approach 400Gb/s of throughput; they often have co-processors such as GPUs or FPGAs that deliver even more compute power – and demand correspondingly more throughput.

In addition, the switch from VMs to more efficient containers means more compute instances can run on each physical server, increasing compute and I/O density. The increasing use of scale-out architectures, micro-services, and distributed computing for Big Data, machine learning (ML), and artificial intelligence (AI) also increase the amount of east-west traffic between servers for both compute and storage. As a result, it’s common for modern servers to require at least 25GbE connection; faster servers are using 100GbE and above.

Faster storage needs faster networks

The revolution in flash storage has increased storage throughput, moving from spinning hard drives to NAND flash. The growth of SSD and Flash continues to advance, and more than 80% of storage arrays are now flash-based. Emerging protocols are advancing in the market, like NVMe, which are needed to extract the greatest performance possible – with lower latency – out of servers, storage and storage networking infrastructure so business applications are not bottlenecked, and operational SLAs can be maintained.

NVMe, according to Gartner, is expected to be the number one storage protocol of the future. Flash arrays that are being designed with NVMe SSDs need to support as much as 400Gb/s of I/O bandwidth for large reads and large writes. And this trend will continue, with new even faster non-volatile memory (NVM) technologies. As storage gets faster, the network must not only run faster with more bandwidth and lower latency, but also more efficiently. Having either a bandwidth bottleneck or a high-latency network will limit storage performance and prevent customers from realizing the full value of their high-performance flash storage.

Faster networks require more efficient transport

The rapid growth of data, and the growing requirements IO performance and low latency, have created a unique set of challenges. Due to the increasing performance needs of servers and increasing performance ability of solid state storage, faster network speeds are rapidly being adopted. Shipments of 25/100GbE and 200/400GbE are increasing rapidly, and adoption will be even faster over the next three years. Today, all major server vendors and many storage vendors already offer the option to add 25 and 100GbE networking ports.

NVMe and NVMe over Fabrics

NVMe is the next generation storage communication language to replace SCSI protocol, purposely defined to work with SSD and memory-based storage technology. (There is no support for rotating disks). Because it is optimized for SSD/Memory storage, it is much more efficient than SCSI, and therefore demonstrates lower latency and higher performance.

NVMe over Fabrics is a protocol specification designed to connect hosts to storage over a network fabric using NVMe protocol. NVMe over Fabrics is an extension of the NVMe network protocol to Fibre Channel, Ethernet and Infiniband. It delivers faster, more efficient connectivity between storage and servers, as well as a reduction in CPU utilization of application host servers.

There are three primary choices for NVMe over Fabric transports available when it comes to NVMe over Fabrics. First is FC-NVMe. For existing FC SAN customers, this can be the go-to choice because there is little change required to their existing FC SAN environment. Next is leveraging the Ethernet transport and network with low latency NVMe over RoCE. This will require RDMA-enabled NICs and a network environment that is setup to be lossless, with DCB and PFC features enabled. HPE has a variety of networking switches that will support such an environment, and the HPE Ethernet RDMA-enabled NICs. The third option is NVMe over TCP. This is analogous to iSCSI as it provides NVMe storage connectivity of a standard TCP/IP network. For NVMe over TCP, the operating system vendors are just now starting to provide support.

Benefits of NVMe-OF over Fabrics

Our customers receive significant benefits from NVMe over Fabrics, including:

- Low latency

- Additional parallel requests

- Increased overall performance

- Reduced OS storage stack on the server

- Faster end-to-end solution with NVMe storage arrays

- Choice: multiple implementation types

There are additional benefits, as well:

- Ability to scale to thousands of devices

- Multi-path support between NVMe hot initiator and target, simultaneously

- Multi-path support to enable send/receive from multiple hosts and storage subsystems

- Potential for higher speeds over network fabrics as technology evolves

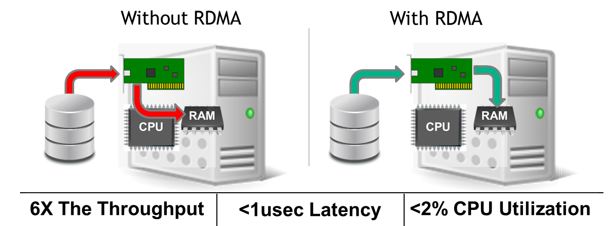

RDMA eliminates the network bottleneck

The most efficient response to meet the needs of faster servers, faster storage, and faster network speeds is to use Remote Direct Memory Access (RDMA). This enables direct memory transfers between servers, or between servers and storage, without burdening the CPU with networking tasks. It also avoids making extra copies of the data in the kernel, buffers, or network stack, and allows the network adapter to offload network processing from the CPU cores. On initiators (servers) this frees up CPU cores to run applications, VMs, containers and management software. On storage targets (arrays) the CPU cores can run storage-related tasks such as data protection (RAID, snapshots, replication), data efficiency (compression, deduplication, thin provisioning), storage protocols, or simply to support many more simultaneous client read/write operations.

Because of hardware network offloads, RDMA increases efficiency and also reduces latency to support a higher number of messages per second – or IOPS – than using TCP. In many cases it increases effective bandwidth, bringing it closer to the network’s line rate.

Choosing the best RDMA transport

RDMA Options

There are two popular RDMA transport options on the market today:

- InfiniBand. InfiniBand is popular in high-performance computing (HPC) and technical computing, and

- RoCE. RoCE is the predominant RDMA choice for enterprise and cloud, that largely run Ethernet. All the RDMA standards support varying wire speeds up to 100Gb/s, but multiple factors make RoCE the preferred choice for Ethernet deployments.

RoCE is the most efficient RDMA transport on Ethernet. RoCE was designed from inception – and from the ground up – as an efficient RDMA transport. RoCE can run in different types of networking environments depending on the needs of the application and users. It is routable for use on Layer 2 or Layer 3 networks. Performance can be optimized by using either Data Center Bridging (DCB), with its Priority Flow Control (PFC) to create a lossless network, or by using RoCE congestion management supported by Explicit Congestion Notification (ECN) in a lossy network. Looking at actual deployments and unit shipments, it’s clear that RoCE is by far the most popular choice for RDMA on Ethernet.

RoCE has many use cases which are increasingly popular in the enterprise and cloud. Network administrators and IT executives are under increasing pressure to accelerate application performance, and thereby need to ensure that their networks and applications are ready to support RoCE. RoCE is the clear leader when it comes to storage, as it typically demonstrates increased performance and ultra-low-latency.

NVMe is the next generation storage communication language to replace SCSI protocol, purposely defined to work with SSD and memory-based storage technology. Because it is optimized for SSD/memory storage, it is much more efficient than SCSI, driving lower latency and higher performance.

As deployments of ML and AI have exploded, NVMe over Fabrics (NVMe-oF) is the hottest storage technology development. Previously popular only in research or HPC environments, these technologies are now increasingly used by enterprises and cloud, or Web 2.0 service providers. The growth of autonomous cars, Internet of Things (IoT), increasingly automated analysis of Big Data, e-commerce, cyber-security threats, and digital video are all factors in the rapid expansion of ML/AI technology. Both the learning/training and the inferencing stages of AI demand high network performance, so many types of ML and AI technology can use RDMA via RoCE.

Hyperconverged Infrastructure (HCI) is one of the fastest-growing categories of data center infrastructure management. It allows a cluster of servers to each contribute compute, storage, hypervisor and management to a shared pool of resources that can run many applications. Various HCI solutions are starting to support RoCE for RDMA in order to achieve faster performance, especially for storage replication and live migration of virtual machines.

HPE M-series Ethernet Switch Family: Ideal for NVMe over Fabrics deployments

HPE M-series offers a storage-optimized Ethernet switch capable of providing superior RoCE support, and building an Ethernet Storage Fabric (ESF). It offers the highest performance measured in both bandwidth and latency, supporting from 8 to 64 ports per switch, with speeds from 1GbE to 200GbE per port. All the M-series models are non-blocking and allow enough uplink ports to build a fully non-blocking fabric. The latency is not only the lowest of any generally available Ethernet switch, but the silicon, software and buffers are designed to keep latency consistently low across any mix of port speeds, port combinations, and packet sizes, which prevents congestion and helps optimize RoCE performance.

M-series intelligent buffer design ensures physical memory is allocated to the ports that need it most. This assures I/O is treated fairly across all switch port combinations and packet sizes. Other switch designs typically segregate their buffer space into port groups, which makes them up to 4-times more likely to overflow and lose packets during a traffic microburst. This buffer segregation can also lead to unfair performance where different ports exhibit wildly different performance under load, despite being rated for the same speed.

Storage intelligence and management

The M-series family has features specific to optimizing current and future storage networking, including RDMA. These include support for Data Center Bridging (DCB), including DCBX, Enhanced Transmission Specification (ETS), and Priority Flow Control (PFC). It also includes support for Explicit Congestion Notification (ECN), which can be used for RoCE as well. Congestion notifications are sent out more quickly with M-series switches than with other switches, resulting in faster response times from endpoints in the network and smoother RoCE traffic flows. The HPE M-series switches also offer strong telemetry features for monitoring throughput, latency, and traffic levels on every port.

These capabilities are important for ensuring the highest performance and best user experience when deploying RoCE on high-speed networks. Any slowdowns or congestion at the server, storage, or switch levels can be quickly detected and fixed, ensuring applications continue to enjoy excellent performance from RoCE as the workloads change and the network grows.

For more information, please check out:

- Experience speed, agility for intense workloads

- HPE adds groundbreaking 200GbE platform to M-series Ethernet Switch Family

Storage Experts

Hewlett Packard Enterprise

twitter.com/HPE_Storage

linkedin.com/showcase/hpestorage/

hpe.com/storage

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...