- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- vSphere 7.0 and HPE Nimble Storage

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

vSphere 7.0 and HPE Nimble Storage

With the release of vSphere 7 and new developments on core storage, we plan to publish a series of blogs on:

- vSphere 7 storage integrations with HPE Nimble storage including NCM 7.0 with vLCM

- vSphere 7, SRM 8.3 and vVols DR (focus of this blog)

- CNS support for vVols (published)

NimOS 5.1.4.200 and 5.2.1 which will release soon, will carry official support for vSphere 7.0 vcPlugin from HPE Nimble Storage. Watch the announcements from HPE Nimble Storage on the release.

vVols are not new to HPE Nimble Storage. From the technical beta in 2014 to the demos at VMworld 2016, Nimble Storage demonstrated DR capabilities with vVols VASA3.0 using PowerCLI. When the vVols replication DR feature was released with vSphere 6.5, both HPE 3PAR and HPE Nimble Storage had Day 0 support for this important feature required by many enterprise customers. The simplicity of the HPE Nimble Storage platform is something our customers appreciate. Here are the top 10 reasons to consider your move from VMFS or RDMs to vVols today.

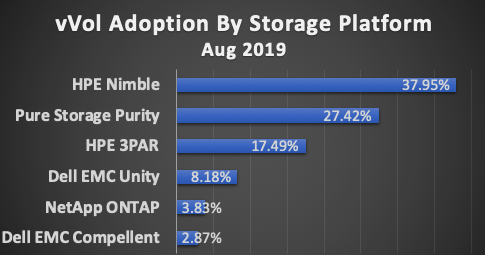

The adoption of vVols continues to grow in 2020. In the last 6 months, we have seen our enterprise customers continue to push the scale, with 10,000 vVols supported on a list of our platforms. VMware shared vVol adoption numbers by vendor in Sept 2019:

vSphere Setup Pre-requisites

Since we want to setup SRM and disaster recovery, your setup should have 2 sites. Each site will have one vCenter, one SRM instance, and a few ESXi hosts. Before setting up SRM, there are some pre-requisites on your vSphere 7.0 environment to setup vVols. Do these sequentially as listed, on the vCenters and ESXi hosts on both sides. Screenshots are provided below for your reference, for each step.

- Setup NTP on your vCenter VCSA. Navigate to your vCenter VAMI at https://<vcenter-IP-or-FQDN>:5480/ and login as root. Under “Time”, enter a reachable Time server. You can also set timezone here, but that is optional.

Figure 2: Setup vCenter NTP

- Setup NTP on each of your ESXi servers. Go to Configure --> System --> Time configuration --> Network Time Protocol --> Edit. Add your NTP server. Check the box to start the NTP service. Change the NTP service startup policy to “Start and stop with host” and hit OK. Once the change has been made, go back and check that it is indeed active. If you have a cluster with a large number of hosts, it is a good idea to use host profiles for consistent setup.

Figure 3: Setup ESXi NTP

- If your HPE Nimble Storage array uses self-signed certificates, make sure ESXi can accept this certificate into its key store. See this VMware KB for additional details. Go to Configure --> System --> Advanced System Settings --> Check for a key Config.HostAgent.ssl.keyStore.allowSelfSigned, and if the value is “false”, select the entry and click “Edit”. The change to this setting does NOT require and ESXi reboot.

Figure 5: Config.HostAgent.ssl.keyStore.allowSelfSignedFigure 5: Config.HostAgent.ssl.keyStore.allowSelfSigned

Figure 6: Edit Config.HostAgent.ssl.keyStore.allowSelfSigned to true

- This step is optional but helps debugging. Enable 2 services on ESXi: SSH and ESXi Shell. You can either edit the startup policy such that these services are always running when the host boots up, or simply start them when needed for debugging. Go to Configure --> System --> Services --> SSH or ESXi Shell and “Edit Startup Policy” or simply hit the “Start” button.

Figure 7: Check and start ESXi services

Figure 8: Edit SSH startup policy

- For a Fibre Channel deployment, make sure your zones are configured for availability. We recommend failover redundancy, port 1 from FC card on ESXi zoned with controller A, and port 2 from FC card on ESXi zoned with controller B. Configure your ESXi networking to talk to the HPE Nimble Storage array, if this will be an iSCSI deployment. We recommend having 2 dedicated physical NICs on the ESXi on 2 separate L3 data networks. Additionally, a dedicated physical NIC for management and one for vMotion is highly recommended. Setting MTU to 9000 (Jumbo Frames) is recommended if you own your entire network and can run jumbo pings end to end. The HPE Nimble Storage array should also have the same two L3 data networks data1 and data2. The screenshots below show this recommended setup, and results of the jumbo ping to another ESXi for vMotion, and the array’s discovery IPs.

- vmk0: management vmknic on vSwitch0 with vmnic0 uplink

- vmk1: vMotion vmknic on vSwitch1 with vmnic1 uplink

- vmk2: data1 vmknic on vSwitch2 with vmnic2 uplink

- vmk3: data2 vmknic on vSwitch3 with vmnic3 uplink

Figure 9: ESXi vmknics

Figure 10: ESXi vSwitches

Figure 11: ESXi jumbo vmkping to another ESXi for vmotion

Figure 12: ESXi jumbo vmkping to array's data1 discovery IP

Figure 13: ESXi jumbo vmkping to array's data2 discovery IP

- For iSCSI deployments, enable the software iSCSI adapter in ESXi. Go to Configure --> Storage --> Storage Adapters --> + Add Software Adapter --> Add software iSCSI adapter

Figure 14: ESXi software iSCSI adapter

If steps 1 through 6 seem like a lot of work, consider talking to your HPE Nimble Storage representative about our dHCI product line.

This is the environment I’ve setup:

- Site A

- vCenter: 10.21.123.210

- ESXi hosts: 10.21.170.159, 10.21.98.156

- Nimble array: sjc-array1099 with data discovery IPs 1.111.1.153 and 1.112.1.153

- SRM server: 10.21.26.24

- Site B

- vCenter: 10.21.28.179

- ESXi hosts: 10.21.28.185, 10.21.28.186

- Nimble array: sjc-array612 with data discovery IPs 1.111.2.154 and 1.112.2.154

- SRM server: 10.21.26.10

Setting up storage arrays for vVols and replication

Now it is time to setup the HPE Nimble storage arrays for each site. The array at site A will replicate to the array at site B, and vice-versa. To made administration easier, we will install and use the HPE Nimble Storage vCenter Plugin as well, on each site. Remember to perform these next steps on both sites.

- Register the vCenter with the Nimble array. Navigate to https://<Nimble-group-IP-or-FQDN> and head over to Administration --> VMware Integration --> Register a vCenter. Add an identifying name, and IP/FQDN of your site A vCenter host to this site A nimble array. Make sure do this on site B as well. Check both boxes for “Web Client” and “VASA Provider (VVols)”.

Figure 15: Register vCenter on Nimble arrayThis will push a new binary of the Nimble vCenter Plugin into the vCenter. You will see a brief message in a small ribbon on your Chrome browser about a plugin deployment, and a request to refresh your browser by simply clicking the button.

Figure 16: Refresh the browser for plugin install

Navigate to vCenter --> Menu --> Administration --> Solutions --> Client Plugins --> You should be able to see the Nimble Plugin in this list, and the version will match the Nimble OS software version.Figure 17: Nimble vcPlugin details

The action from the array GUI also registers the VASA Provider of the Nimble array with this vCenter. Navigate to vCenter --> Menu --> Hosts and Clusters --> Select the vCenter in the left panel --> Configure on the right panel --> Storage Providers --> You should be able to see the Nimble VASA Provider in this list. Confirm a few details here:- Provider status must be “Online”

- Provider version must show the Nimble OS software version

- VASA API version must show “3.0”

Figure 18: VP details

- Setup the second array as a replication partner for the first, and vice-versa. Navigate to https://<Nimble-group-IP-or-FQDN> and head over to Manage --> Data Protection --> Replication Partners --> Create replication partner --> On-premises Replication Partner.

Figure 19: Create an on-prem replication partner

On the next page, input details of your partner array. Configure the same secret on both arrays. The most important setting here from the perspective of vVols and SRM DR is highlighted: for “inbound location”, drop down and select the pool name “default” and check the box for “Use the same pool and folder as the source location”.Figure 20: Replication partner configuration

Check the status of your replication partner by going to Manage --> Data Protection --> Replication Partners --> Select the partner array and click on “Test”. Status should be “Alive”. - Now let’s create a vVol datastore on both site A and site B. This is the datastore that will host VMs from site A, and when site A fails over to site B, the VMs will be in the datastore on site B. So, it is recommended to keep the name of this datastore the same on both sites. Follow step #2 from this blog to use the HPE Nimble Storage vCenter Plugin to create a vVol datastore. Confirm that the vVol datastore is mounted on all ESXi hosts, by navigating to “Storage” and selecting the vVol datastore. “Hosts” will show all hosts successfully mounting this datastore.

Figure 22: vVol datastore mounted on all hosts

The “Summary” page will show details about this datastore, including the array that it is backed by and its space information from the Nimble array.Figure 23: vVol datastore details

Confirm that a “Protocol Endpoint” device shows up as “Accessible” on each ESXi host, by navigating to Configure --> Storage --> Protocol Endpoints. This shows the device serial number on the array. The Storage array identification matches “Provider ID” from Figure 18: VP details.Figure 24: Protocol Endpoint details

- Create VM Storage Profiles on both site A and site B. VM Storage Profiles help define the storage requirements of a VM. You can add capabilities exposed by your storage vendors that are required by the applications or the VMs. For this SRM demo, let’s say we want the data from VMs to be replicated once every hour. Go to vCenter --> Menu --> Policies and Profiles --> VM Storage Policies --> Create VM Storage Policy --> Name “srm-vvol-policy”. For “Policy Structure” select “Datastore specific rules” --> Enable rules for “Nimblestorage” storage.

Figure 25: Policy structure for VM Storage Policy

Under Placement --> ADD RULE --> Protection schedule (hourly) --> Clicking on the little “i” information icons will tell you a bit more about each element.Figure 26: NimbleStorage rules

Here we’re choosing to backup every hour, retain 48 local backup copies and 24 on the downstream, choosing to replicate each snapshot to the downstream and not delete replicas from the partner. Pay particular attention to the drop down for replication partner, and select the array for site B.Figure 27: Hourly protection schedule

Next, switch to the “Replication” tab at the top, next to “Placement”. Select “Custom”. Provider: NimbleStorage.Replication, VMware managed replication set to “Yes”. This is required for VASA3 replication to be setup and to work well.Figure 28: Custom replication

In step 4 of VM Storage Policy creation, “Storage compatibility” will show all vVol datastores that meet the criteria defined earlier. The vVol datastore we created must show up here.Figure 29: Storage compatibility

When creating the VM Storage Policy on site B, make sure to select the array from site A in the drop down box for replication partner.

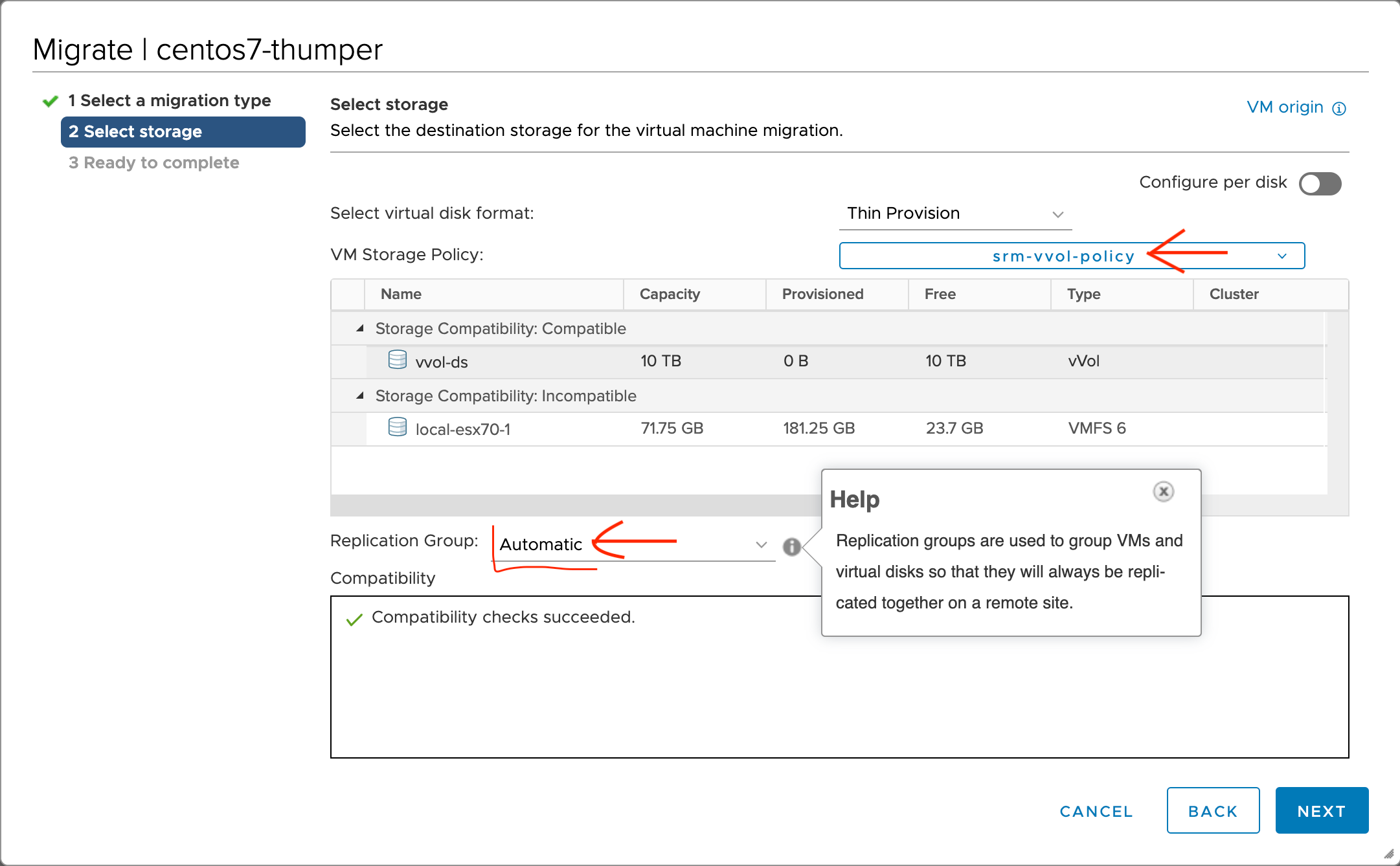

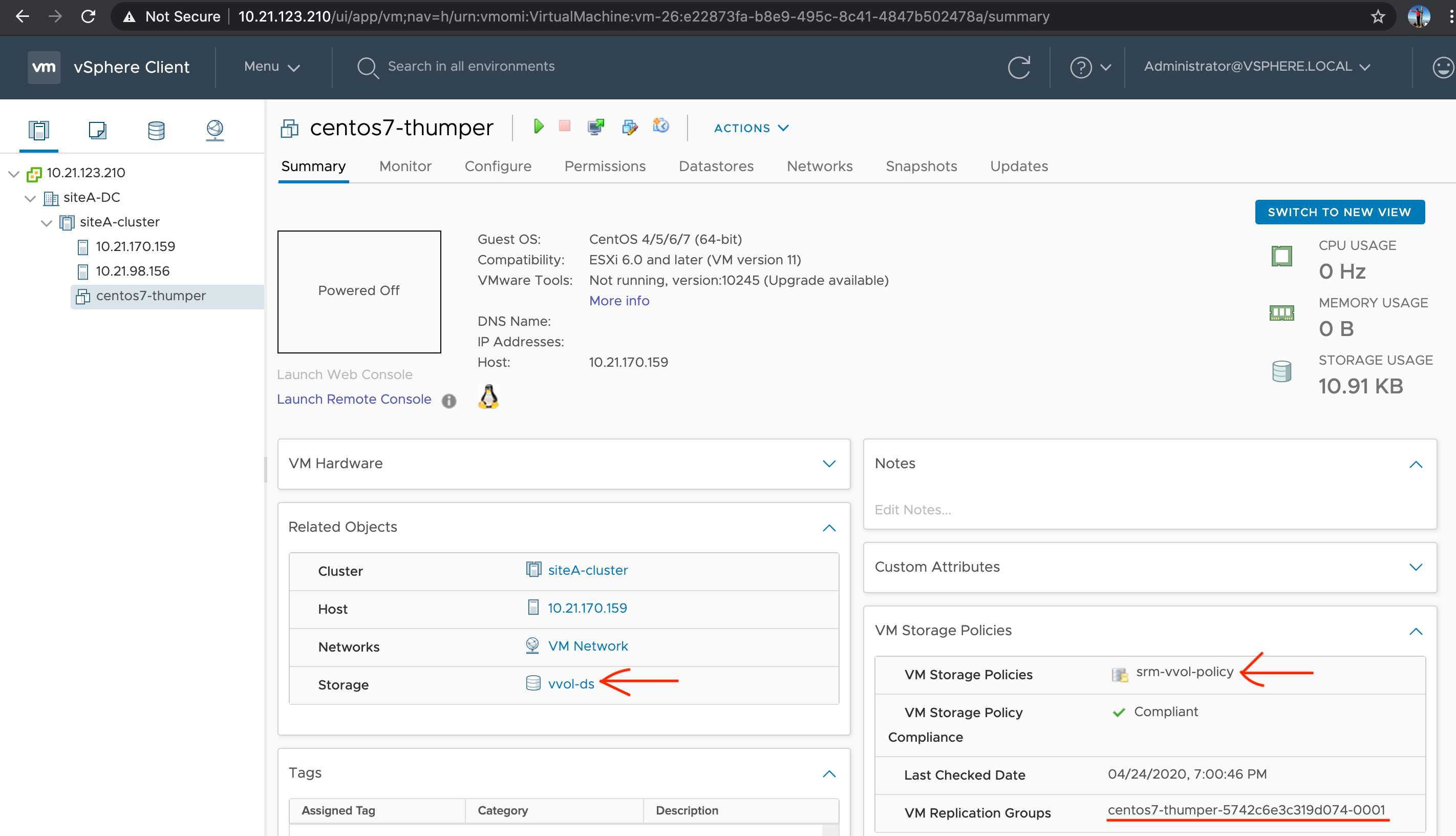

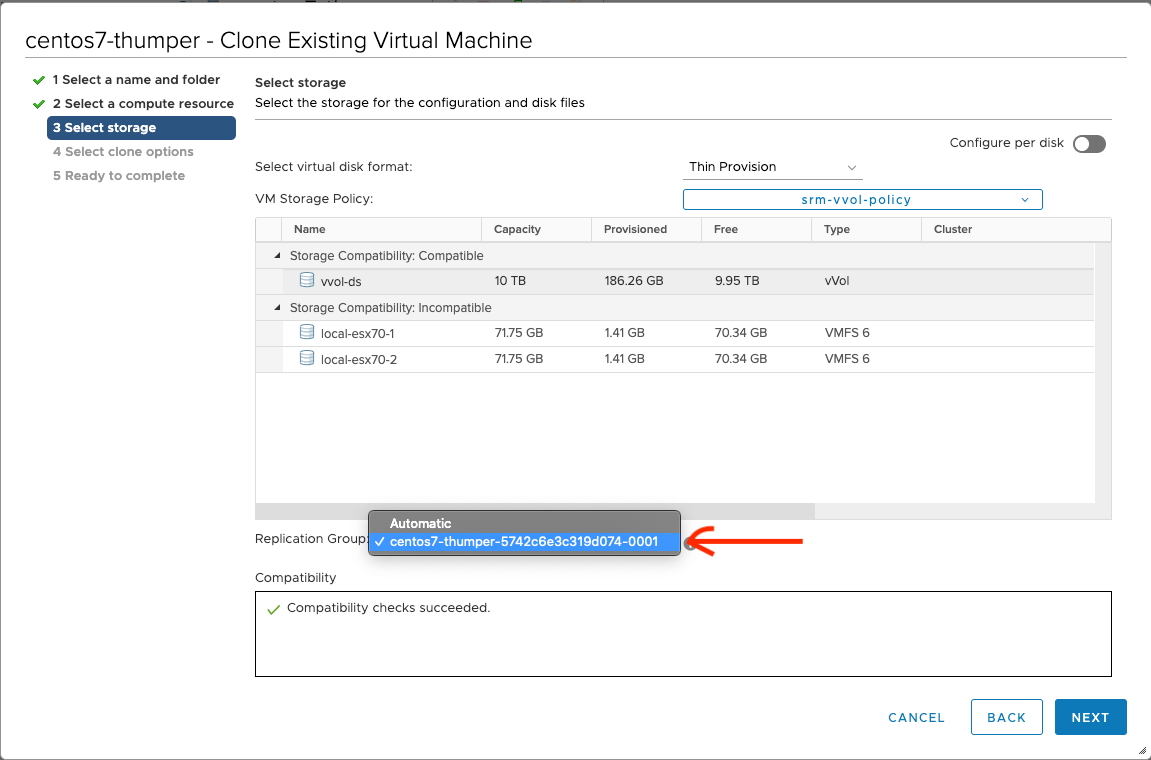

Now, create a VM, and apply this policy to the VM. I'm choosing to migrate an existing VM to the vVol datastore. You could create, clone, clone from template, or migrate with the result of creating a new VM on this vVol datastore. While applying this policy for VMs that need DR protection, select a replication group (RG). Choose to create an RG of type “Automatic”.

When the VM create/migrate is complete, the summary page of the VM should show it is “Compliant” with the applied profile, and an RG is created automatically.

Note: selecting an “Automatic” RG at VM provisioning type has created an RG which maps to a Nimble volume collection. If we now create a second VM, and want it to be protected “together” with this VM i.e. on it’s exact same schedule, to behave like a cluster of app VMs together, while provisioning the second and subsequent VMs, the replication group drop-down will show an existing RG which can be selected.

At this point, if you were to use VASA3 DR API using PowerCLI, pyVmomi or any other SDK, you would have a fully functioning DR solution. This has been the working solution for the last 4 years or so.

Next, we are ready to setup SRM 8.3 that includes additional UI to facilitate vVol VM DR. Watch this space for the next blog.

- Back to Blog

- Newer Article

- Older Article

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...