- Community Home

- >

- Storage

- >

- HPE Nimble Storage

- >

- Array Performance and Data Protection

- >

- Re: iSCSI Latency is Not an Issue for Nimble Stora...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-16-2013 07:15 AM

12-16-2013 07:15 AM

I am little bit confused with some of the latency numbers & need some clarification. What I read from internet resources is that iSCSI has more latency compared to FC & FCOE because of the TCP/IP overhead.

Looking at reference architecture for VDI (http://www.cisco.com/c/dam/en/us/solutions/collateral/data-center-virtualization/dc-partner-vmware/guide_c07-719522.pdf)

the average read & write latency numbers are below 12ms.

If anybody can shed light on this, I will appreciate.

Sincerely

Viral Patel

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-16-2013 09:25 AM

12-16-2013 09:25 AM

Re: isci Latency

Heya Viral.

I can't seem to access that VDI document there. Is there another location where it can be accessed?

What kinds of latency numbers are you seeing in your environment? (in VMWare, and on the array)

Also, indeed there is a little overhead with iSCSI due to network overhead: TCP/IP is a transport protocol has handshakes, resends, etc. And that's on top of the iSCSI "Ready to Transfer" (R2T) operations that occur (RFC 3720), where the first burst is 64k, followed by the rest of the payload.

On a relatively isolated/empty network (like many client iSCSI networks), it's not much at all. Perhaps a total of about 1-3ms depending on the environment and network hardware? (Host ---> One Switch ---> Array topology will usually operate at sub-milisecond speeds depending on payload size and the like. It's not as fast as 8 GBit FC in terms of protocol overhead, but only by a few microseconds here and there.

Not sure what you're alluding to re 12 ms: Most clean networks (again, assuming Host --> One/Two Switches --> Array) won't have latencies that high.

If you're experiencing a performance issues, slow performance etc, please feel free to open a Nimble support case as well, we'll look into that for you. Please be specific about what you're seeing, what data you're looking at, etc.

Let us know if you need any further help-

Andy K

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-18-2013 02:43 PM

12-18-2013 02:43 PM

Re: isci Latency

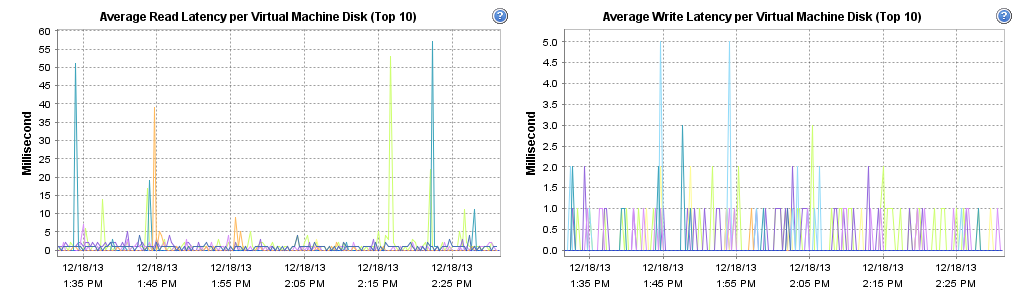

We see very low latencies, writes under 1ms a lot of the time. Here are some pictures. This is with brocade VDX switches and Nimble 240G. Let me know if you want any other information from our environment, I'd be more than happy to share.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-19-2013 06:55 AM

12-19-2013 06:55 AM

Re: isci Latency

Hi Andy & Rocky

Thanks for the response .

the Link I provided (http://www.cisco.com/c/dam/en/us/solutions/collateral/data-center-virtualization/dc-partner-vmware/guide_c07-719522.pdf)

I suspected this because of the report that I read through demarrtek.com ( see link below) which is trying to compare the results ( read latency) of FC,FCOE & iSCSI.

In this report, Average Latency is higher for the iSCSI compare to FCOE ( P7 of above report). In my mind, I was trying to make the case for the iSCSI for the most of the Production workload.

This latency is from the SQLIO perspective . Information that Rocky Provided convince me that with 10G & Jumbo Frame iSCSI can cover the Most of the Workload.

Sincerely

Viral

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-20-2013 08:07 AM

12-20-2013 08:07 AM

SolutionHi Viral,

It's true in "traditional" iSCSI storage implementations latency can be quite high. But this is due to how data is read and written randomly on the underlying storage itself.

Nimble Storage relies on CASL, which sequentialises all incoming writes. This enables us to deliver FC-like latency for reads and writes without the need for FC or FCoE.

If you have an hour spare I highly recommend watching the CASL Deep Dive video on Youtube by Devin Hamilton. By far our most popular and informative video uncovering the secret sauce.

twitter: @nick_dyer_

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-20-2013 08:23 AM

12-20-2013 08:23 AM

Re: isci Latency

Heya Vital: Just a shout out to what Nick said.

Also, please note that the testing that Demartek did was on Netapp arrays for iSCSI and FC (I used to work at Netapp, so am very familiar with the hardware and performance parameters that Demartek is testing with). Also, interestingly enough, the array that they were using (3240 with 24 10k RPM disk drives) does not appear to be using Netapp's own answers to hybrid flash architecture (called "FlashPool" at Netapp, mixed aggregates of 10k or 15k RPM disk raidgroups with SSD raidgroups), nor are they using expandable flash memory ("FlashCache"), which would put it more in line with a Nimble array in terms of raw storage architecture/hardware (more of a hybrid array, like what we do out of the box)... but of course with a street price at least the very least four times higher than our costliest Nimble array, which because of our optimized OS and CASL, we would totally outperform anyway. :-)

I would love to see Demartek run the numbers again with a standard Nimble array at 1/4 the street price as their setup for that document. Again, we would blow those numbers away to the point that their test comparing iSCSI to FC would be meaningless. That's the benefit of iSCSI with an array that was designed, from its infancy, to not just be "some disks with SSDs serving as Flash", but a genuinely hybrid array that is aware of and utilizes SSDs and normal HDs together to the best of their performance: We can get the kinds of op and latency numbers that would make *anyone* rethink going with FC (unless they already had all the fabric hardware there already).

If you have a Sales Engineer near you or who works with you, please contact them, they can probably bring by a proof of concept that demonstrates exactly what you're looking for.

Thanks!

-Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2014 05:38 AM

01-20-2014 05:38 AM

Re: iSCSI Latency is Not an Issue for Nimble Storage

Rocky,

Did you ever run any tests using 1 Gbit links?

Do you have any idea how much results for 1Gbit links may be different from yours?

Cheers ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-06-2014 11:42 AM

02-06-2014 11:42 AM

Re: iSCSI Latency is Not an Issue for Nimble Storage

Rocky,

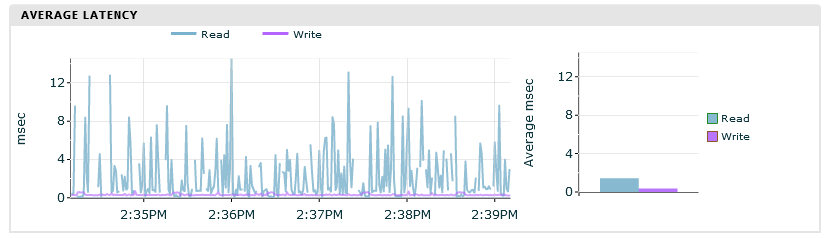

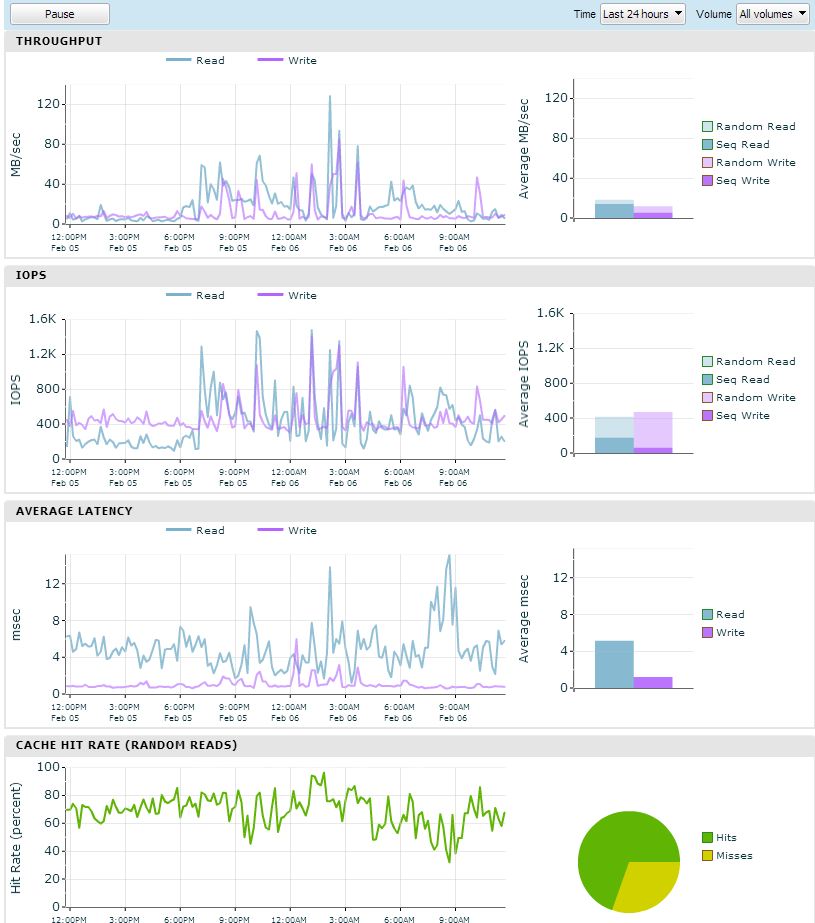

We have Brocade VDX 10 Gig and a Nimble 260G and this is what I'm seeing.... it's not good. Might be a configuration issue from the VM hosts to the Nimble though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-06-2014 01:58 PM

02-06-2014 01:58 PM

Re: iSCSI Latency is Not an Issue for Nimble Storage

Blimey that's like my graphical output. Need to talk to Nimble to what I'm doing wrong perhaps?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-06-2014 02:04 PM

02-06-2014 02:04 PM

Re: iSCSI Latency is Not an Issue for Nimble Storage

Our latency was lower for reads less than 0.5 ms but just under 1ms when copying 2TB OF FILES

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-05-2014 02:55 PM

03-05-2014 02:55 PM

Re: iSCSI Latency is Not an Issue for Nimble Storage

Our latency is troublesome all over the board. We have Brocade VDX 10 GbE switches connected to HP DL360G8 servers and Nimble 460G array... still getting 7000 ms latency from vmware virtual machines running SQL and the likes. No idea what the heck is going on with it. I'm in the process of finally moving vm's to 1 vCPU instead of what was happening before (folks see it slow so they add resources thinking it would speed it up but actually slowed it down more with CPU wait, etc) so I'm interested to see what happens once I'm done with the 176 machines.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-06-2014 06:18 AM

03-06-2014 06:18 AM

Re: iSCSI Latency is Not an Issue for Nimble Storage

Andrew, my gut tells me that your issue is likely in your switch stack somewhere. Are you running standard MTU or Jumbo frames? I see this often when Jumbo frames is desired to be deployed, but somewhere along the network path, a single device is not configured correctly. You need to make sure that every interface is set to the same MTU (Host drivers, switches, array). If that's not it, what about flow control? Is flow control enabled (hopefully bi-directionally) on all of your switching elements? Have you looked done any analysis to see if you are getting excessive re-transmits on any of your switch ports? How about CPU levels of the switching elements? Just a few other things, try turning off STP and Unicast Storm Control features on your switches if you can. The last thing (or maybe the first thing to check) is the quality of the cables. From time to time, I see problems with some manufacturers 10Gb cables (much less issues using fiber SFP+ adapters as provided with the Nimble 10Gb ports).

Rocky, in general, the "Network Latency" of a 10Gb fabric should be 0.1 ms and a 1Gb network will be 1 ms. If you looked at the stats provided in the array UI, and looked at the same stats as provided in your host monitoring tools, you should see those deltas between the two reports if everything is working correctly. If you see more network latency than 2x these numbers ( so .2 ms on 10Gb and 2 ms on 1Gb) something is not configured or performing correctly based on my experience.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-13-2014 07:30 AM

03-13-2014 07:30 AM

Re: iSCSI Latency is Not an Issue for Nimble Storage

Andrew, iSCSI latency is about 70 microseconds slower than FC or FCoE, that would be 0.07ms. Something is hitting you in the network as Mitch was trying to point out. Could you share your network diagram with us?

Just one question as well, the connectivity is that 10GbE all over, or is it 1GbE from the VMware server into the Brocade and then 10GbE to Nimble. Are you using the ISL link port on the switch to connect to Nimble?

Please help us with the questions so that we have a better understanding of your environment and can truly help you out.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-13-2014 11:42 PM

03-13-2014 11:42 PM

Re: iSCSI Latency is Not an Issue for Nimble Storage

Viral,

I don't think this is networking related. Also, write performance is fine so we can rule out CPU. Read latency is somewhat high which can be explained by low cache hit rate of random IO (around 60/80%). It would be interesting to show the Infosight statistics for this array. My guess based on these screenshots is that your cache utilisation is (too?) high.

My advice is to take this up with support, they can do a thorough scan of your environment.

Cheers,

Arne

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-18-2014 04:41 PM

03-18-2014 04:41 PM

Re: iSCSI Latency is Not an Issue for Nimble Storage

Your latency peaks just as your cache hit ratio takes a dive

At the 9am (far right) your at about 75% cache hits, then it dives to about 45%.

Off to the rotational drives at that point = explanation for increased latency?

Or is it not so much a 1:1 ratio.

Hey Nimble folks: we need more command line tools. I want something similar to Sun's ARC or L2ARC cache tool, which shows breakdown of cache hits/misses, and something like an iostat and/or gstat from FreeBSD, which shows my rotational disks getting used by my ssd's not getting used.

Pretty graphs are nice but give me black & green putty & root any day of the week.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-21-2014 02:36 PM

04-21-2014 02:36 PM

Re: isci Latency

I wish we had jumbo running on the network switch stack but unfortunately selling that idea and redoing our entire switch stack has been a rough go so we're sticking with 1500 MTU. I'll have to check on the flow control.