- Community Home

- >

- Storage

- >

- HPE Nimble Storage

- >

- Array Setup and Networking

- >

- Delayed ACK and VMWare Hosts Connecting to Nimble ...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-21-2014 02:16 PM

04-21-2014 02:16 PM

I was wondering if delayed ack should or should not be disabled in VMWare hosts connecting to Nimble Arrays through 10GbE. I've found some articles regarding this issue and they say to disable it to get the best throughput and latency out of the 10GbE.

Performance = art not science (with DelayedAck 10GbE example) - Virtual Geek

ESX 5.1 iSCSI Bad Read Latency | VMware Communities

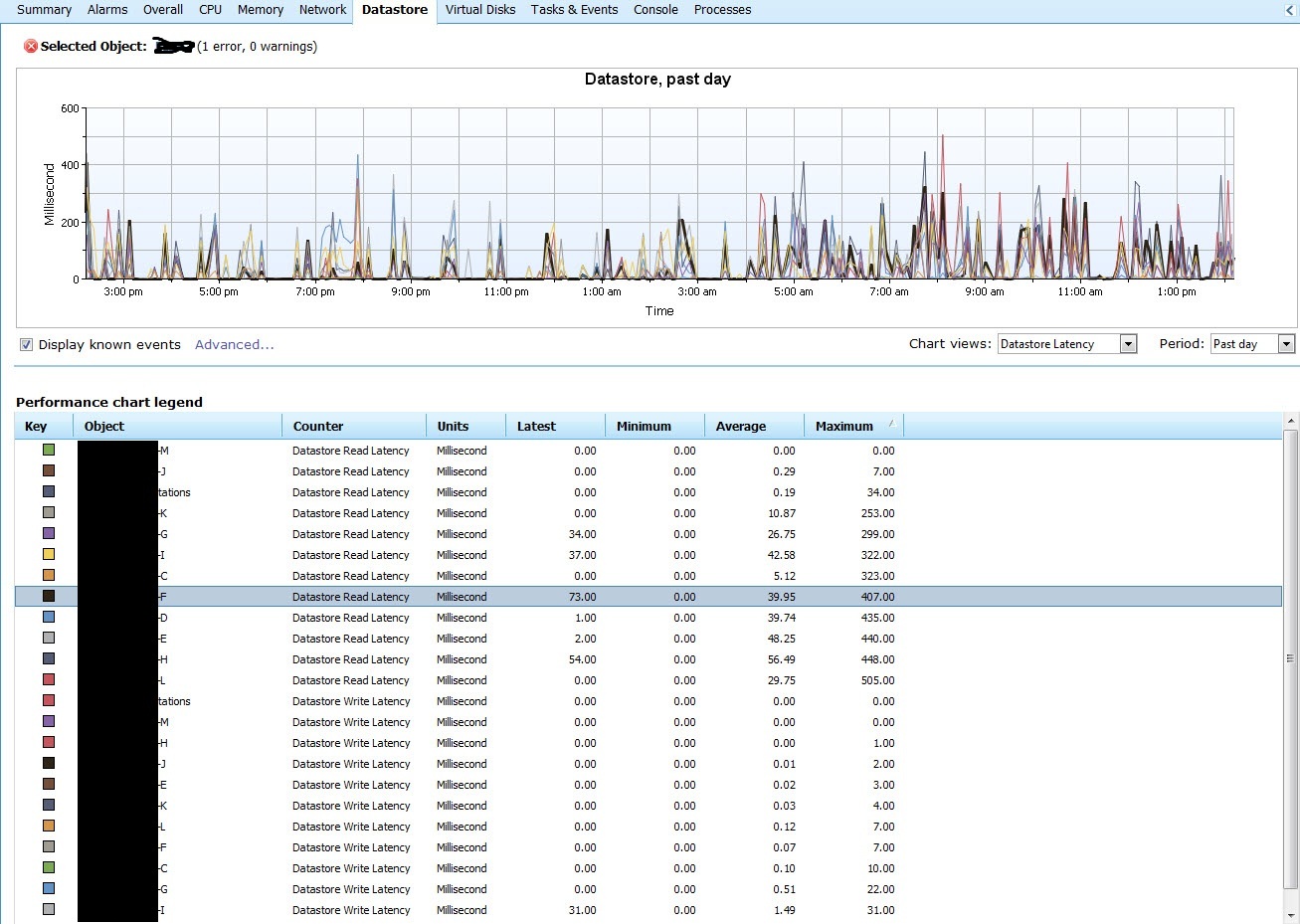

From my Nimble to the Host I see very little latency (0-5ms) but the datastores on the Hosts are showing 600-1700ms read latencies and 50ms and under for write latency.

Anyone out there running some form of 10GbE connecting their Nimble array to VMWare hosts and experienced the similar situation?

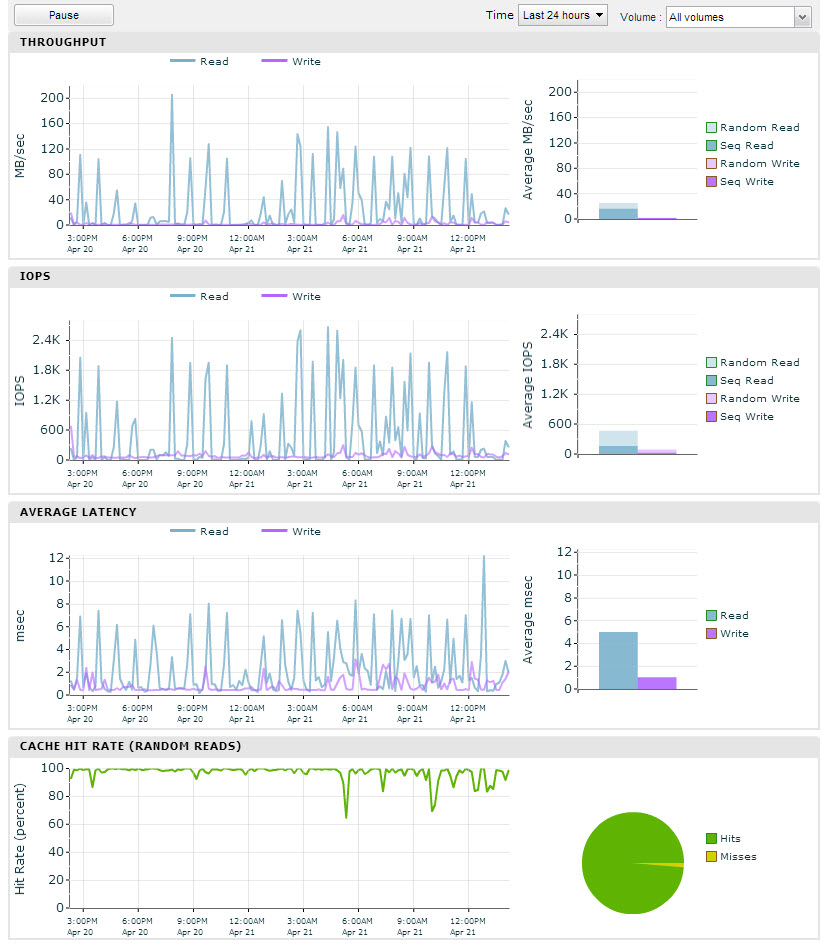

My situation is we have this one important database server and it's the only server running on this 460 Nimble array (Fig. 1). You can see the differences between the nimble latency view and the veeam one latency view of the virtual machine (fig. 2).

Fig 1. Nimble view of datastores

Fig 2. VEEAM One view of latency

-Andrew

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-23-2014 08:49 AM

04-23-2014 08:49 AM

SolutionAndrew,

recommendations to turn off TCP Delayed ACK on a VMWare hypervisor are usually associated with this VMWare KB: VMware KB: ESX/ESXi hosts might experience read or write performance issues with certain storage arrays

In that VMWare KB, let me point out one statement in particular:

"The affected iSCSI arrays in question take a slightly different approach to handling congestion. Instead of implementing either the slow start algorithm or congestion avoidance algorithm, or both, these arrays take the very conservative approach of retransmitting only one lost data segment at a time and waiting for the host's ACK before retransmitting the next one. This process continues until all lost data segments have been recovered."

In addition to the above statement, TCP Delayed ACK's "can" have an impact in some other, very specific, scenarios:

1) Certain very specific packet loss scenarios

2) Some situations where an MTU mismatch occurs, specifically in equipment between the host and the array.

Discussions on exactly why these scenarios can allow TCP Delayed ACK to cause a performance impact can be quite lengthy. ![]()

Suffice it to say, however, that:

1) Nimble arrays do not fit the description, given by VMWare, of arrays where Delayed ACK should be disabled. We have a fully functional TCP Congestion Control mechanism, including slow start and congestion avoidance.

2) Nimble Storage does not suggest that you disable TCP Delayed ACK, but it is your prerogative to do so.

3) If disabling TCP Delayed ACK on the initiator (as per the VMWare KB) does have a positive impact on performance against a Nimble Array, then there is something unusual occurring in that environment. Unless the problem is a bug in the initiator Delayed ACK code, disabling TCP Delayed ACK is probably not the ideal way to address the unusual circumstance.

******

Now... to your followup about the difference in latency numbers between VEEAM and the array... this may not indicate anything unusual.

VEEAM, by nature will request large sequential reads on the target dataset.

- From the Windows OS perspective, this would translate to reading something like 1MB or 2MB (some sources would indicate as much as 8MB) at a time from the Windows "Local Disk".

- iSCSI, however, will likely have negotiated to a much lower "burst" size, usually between 64k and 256k.

- For a 2MB SCSI request, the Windows iSCSI initiator may transmit 32 iSCSI requests of up to 64K, or 8 iSCSI requests of up to 256K

- Assume that each 256K iSCSI request takes ~6ms within the array (as per your graph, assuming 256k iSCSI operations)

- Assume relatively small 10Gbps network delays (this would usually be ~.1ms or less)

- Latency for the entire 2MB request would be (~6ms per iSCSI operation * 8 operations) = ~50ms

(note: it is also possible that the Windows iSCSI request would be for 8MB, but iSCSI would still have to request between 64k and 256k per "burst"... In terms of how latency is measured, the net result is the same and therefore not that interesting)

- If VEEAM measures the latency on "disk operations" as opposed to "iSCSI operations", then VEEAM would report about 50ms latency, but the array would report an average of 6ms.

This same concept is confirmed to be true of VMWare latencies. Borrowing a diagram from a Nimble KB ("VMWare reports I/O latency increased", KB-000180):

If VMKernel received an 8MB SCSI request, and used 64K iSCSI requests (not uncommon), the VMKernel latency on the single 8MB request might be as high as 180ms, even if each of the 125 individual 64K iSCSI requests was only 1.25ms.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-28-2014 11:42 AM

07-28-2014 11:42 AM

Re: Delayed ACK and VMWare Hosts Connecting to Nimble on 10GbE

Thanks Paul