- Community Home

- >

- Storage

- >

- HPE Nimble Storage

- >

- Array Setup and Networking

- >

- iSCSI Attached vs RDM vs VMDK?

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-18-2014 09:30 AM

06-18-2014 09:30 AM

In regards to setting up high I/O environments like database servers, I am looking for a definitive recommendation. I ask because I am coming across varying studies saying iSCSI attached drives, using Nimble Connection Manager, is better or just as good as using RDM configured with the VMware Paravirtual SCSI Controller. VMware says performance of a VMDK is negligible in comparison to using RDM, as stated in: http://www.vmware.com/files/pdf/solutions/sql_server_virtual_bp.pdf.

Has anyone performed the performance comparisons or know of a study that shows the three compared?

Thank you,

Talbert

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-18-2014 02:10 PM

06-18-2014 02:10 PM

SolutionHi Talbert,

The "old school" mentality with iSCSI storage & virtualisation was that performance was almost guaranteed to be higher performing when using RDMs to individual VMs vs presenting the data through VMFS. This was due to a combination of factors such as block size overhead, MPIO, and general VMFS issues. As an example, in my previous life at EqualLogic we never recommended VMFS for data volumes as the block size of 15MB would immediately crush an array as the data change would be massive vs using in-guest presentation of data.

Howver, in vSphere 5 VMware claim there's anything between 2-5% performance differential between doing RDM & VMFS, so these days it comes down to personal preference around data protection (ie if the appetite to have SQL databases consistently snapshotted on a per database level for easy recovery, vs having to snapshot on a VMFS/VMDK layer) & other factors such as SRM - VMFS presentation of data is the only way SRM works as designed.

Putting everything through VMFS makes life as a storage & VMware admin a lot easier, especially if using the vCenter plugin (look out for a new, better plugin in upcoming Nimble OS 2.1).

Of course, the elephant in the room that not many people want to address right now is VVOLs, which is going to turn the "block storage" world upside down as VMFS becomes redundant and puts to rest this RDM vs VMFS conversation. Here's a sneak preview of what that technology & integration looks like...

twitter: @nick_dyer_

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-19-2014 09:33 AM

06-19-2014 09:33 AM

Re: iSCSI Attached vs RDM vs VMDK?

I'm finding that other factors than performance are huge issues related to databases on Linux. Snapshots with the quiesce feature are challenging to get working as of mid 2014. There are some bugs addressed in the latest kernels but they are not stock on current releases (CentOS,RHEL). The fix is to compile a custom kernel. While this is feasible it is outside of our normal business practices and will give tourettes to the guy who takes over after me. I'm leaning toward just living with crash consistent snapshots for now. If we use a combination of log partition snaps with nightly data partition snaps and of course keep our regular backups we will have data integrity. My contention is why keep snaps at all if they are not reliable? There are cases where we may use a snap to recover and not know that there is corruption.

Snapshots on Windows using VSS seem to work fine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-19-2014 09:36 AM

06-19-2014 09:36 AM

Re: iSCSI Attached vs RDM vs VMDK?

A clarification: Using ISCSI from within the guest does not get snap synchronization at all. Using VMDK has the bug issue. RDM would fall into the same issue as VMDK.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-23-2014 08:54 PM

06-23-2014 08:54 PM

Re: iSCSI Attached vs RDM vs VMDK?

I was one of the early adopters of Nimble in 2010 while IT Director of Money Mailer in Garden Grove, CA... Believe me when I tell you that I "bent" Nimble every which way to get my environment onto it.

I was running EMC, Apple X-Raid, EQL, and HP when I bought my first CS240 in December 2010... 3 months later I bought another for DR/BC. Pretty dramatic performance impact across all my systems.

I had six RHEL vm's running Informix (IBM db's). After much testing and bending including having it all reside on VMFS, using RDMs in ESX, and initiator attached volumes, we eventually stabilized with best performance and peace of mind having the entire server reside on VMFS a volume. Every 4 hours OnBar would execute a backup to a unique disk within the same VMFS volume as the server and then the scheduled Nimble snap would occur.

In 2 1/2 years and quarterly full DR/BC testing we never had a problem with Informix coming online in the DR site.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-09-2014 10:39 AM

09-09-2014 10:39 AM

Re: iSCSI Attached vs RDM vs VMDK?

I decided to run some basic performance tests now that I have three separate environments up and running. Specifically, I tested the database volume that stores our MS SQL 2008 R2 data. Hope this information is useful to anyone curious as I was.

Note: each initial test was run independently in the order shown below (dv, pp, prod)

DBDV01:

Results when using vmdk file on same datastore as rest of VM vmdk files, using Paravirtualized scsi controller.

Second run through, ran 5 minutes after the first test

Third run through, ran immediately after the second test

DBPP01:

Results when using vmdk file on datastore dedicated to the F:\ database volume, using a Paravirtualized scsi controller.

Second run through and done about 3 minutes after first test

Third run through (ran immediately after second run)

DB01:

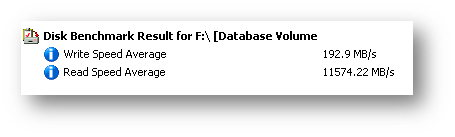

Results when using guest initiated iscsi connector, using Nimble connection manager

Second run through, and done about less than three minutes after first test

Third run through, ran immediately after second run

Analysis

Initial performance looks to lend itself to show a definite improvement when using Nimble’s best practice. As can be seen, Nimble’s cache looks to be working beautifully since speeds improve dramatically from the first run to the second run.

See chart below for breakdown:

DB01 | DBPP01 | DBDV01 | |

Notes | Volume tested is guest-initiated iscsi drive setup through Nimble Connection Manager | Volume tested is vmdk on completely separate datastore/lun with only the one vmdk in it, defined in vCenter and on Paravirtualized SCSI controller. | Volume tested is vmdk on same datastore/lun as rest of virtual machine, defined in vCenter and on Paravirtualized SCSI controller. |

First Run (read/write MB/s) | 133 / 7997 | 103 / 6163 | 61 / 3675 |

Second Run | 193 / 11574 | 173 / 10349 | 103 / 6164 |

Third Run | 192 / 11521 | 171 / 10256 | 118 / 7104 |