- Community Home

- >

- Storage

- >

- HPE Nimble Storage

- >

- Array Setup and Networking

- >

- Re: ISCSI switchport aggregation with MPIO?

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-06-2016 03:02 PM

07-06-2016 03:02 PM

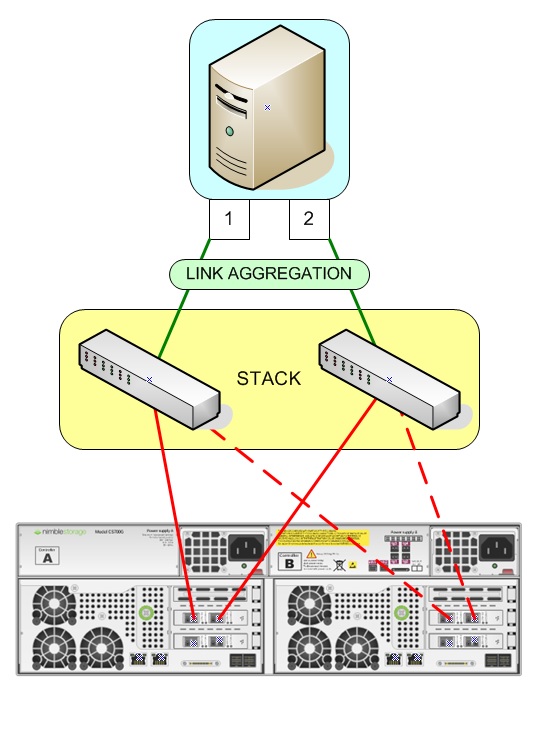

I have a question regarding an architecture setup by a vendor that I am taking over supporting. The current environment is VMware 5.5, two dedicated NICs per server for iscsi traffic to a switch stack that connects to a CS300.

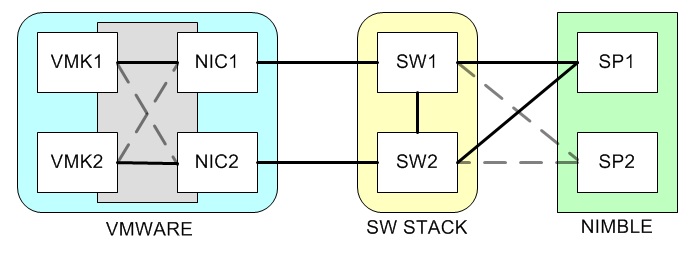

Currently, they have setup the VMware iscsi adapters per multipath recommendations (bonded VMKs with Round-Robin), however I am a bit confused on the potential impact of the network configuration. Currently, they have everything setup for ISCSI best-practice (dedicated switches, non-routed iscsi vlan, jumbo frames, etc), however the pair of stacked switches are aggregating the links to the NICs on the server.

Host egress traffic, from my understanding, shouldn't be effected, but return traffic from the Nimble would have to be processed by both NIC at least to layer 2 then one of them would have to drop traffic as it isn't the intended destination MAC for the incoming frames, correct? Since we are using software iscsi via VMware (see Nimble's VMware guide page 5), LACP or other bonded link protocols seem to me to add unnecessary overhead in both traffic and processing for ingress traffic on the host's CPU and NIC. Am I correct or mistaken?

Reference:

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-07-2016 07:00 AM

07-07-2016 07:00 AM

Re: ISCSI switchport aggregation with MPIO?

Josh,

I can't say for sure about traffic being dropped. With LACP you'll typically only get the throughput of one NIC per TCP stream and depending on the hashing algorithm used you'll probably only ever see one NIC speeds with your setup.

I have my environment setup with MPIO and no LACP following Dell network practices (using two Force10 switches that are VLT'd). Having LACP or bonded links adds extra config overhead in my view. I'd suggest remove the aggregation, give NIC 1 and NIC 2 it's own IP and configure software iscsi per page 5 like you pointed out.

Phil

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-07-2016 10:33 AM

07-07-2016 10:33 AM

Re: ISCSI switchport aggregation with MPIO?

Links are bonded with HP's Trunk protocol. VMK1 and VMK2 have their own IPs, are bonded to both NICs, each one with a different primary VMNIC with the secondary as failover. They are not teamed at the VMware side. I'm not an expert on how VMware handles traffic at the NIC level, so I was hoping for some confirmation on my suspicion that there's nothing gained by aggregating the links at the switch level to the hosts, and in fact may reduce performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-19-2016 09:43 AM

07-19-2016 09:43 AM

Re: ISCSI switchport aggregation with MPIO?

I would say nothing gained by aggregating switch to host.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-21-2016 01:24 AM

07-21-2016 01:24 AM

SolutionHello,

If both of the NIC's are bonded to the software iscsi initiator, that means you are running a flat subnets across both of the VMk's. Your interconnect / ISL between your two stacked switches will be a source of contention. Why? The switches have a finite cache, depending on the switch typically >9MB shared between all ports, if you are using LACP as your interconnect between your stacked switches then these ports will consume some some of the total cache. You want to eliminate traffic across your ISL, ideally one should use the bisect / even odd option (Administration > Networking > Subnet) when using a single subnet to prevent traffic across your ISL.

Many thanks,

Chris