- Community Home

- >

- Storage

- >

- HPE SimpliVity

- >

- Re: Legacy Simplivity Lenovo - Datastore is not mo...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-22-2019 08:30 PM

10-22-2019 08:30 PM

Legacy Simplivity Lenovo - Datastore is not mounting

We have Legacy Simplivity Lenovo, our problem is that we can't file ticket to support us,

Here is the problem, Datastore is not mounting and VMs were inaccessbile.

I tried to open the console of OmniStack and saw that storage IP is down,

I've checked the storCLI and the drive was online.

<blockquote>

root@omnicube-ip205-92:/opt/MegaRAID/storcli# ./storcli64 -v

StorCli SAS Customization Utility Ver 1.07.07 Nov 15, 2013

(c)Copyright 2013, LSI Corporation, All Rights Reserved.

Exit Code: 0x00

root@omnicube-ip205-92:/opt/MegaRAID/storcli# ./storcli64show

bash: ./storcli64show: No such file or directory

root@omnicube-ip205-92:/opt/MegaRAID/storcli# ./storcli64 show

Status Code = 0

Status = Success

Description = None

Number of Controllers = 1

Host Name = omnicube-ip205-92

Operating System = Linux4.1.20-svt1

System Overview :

===============

-----------------------------------------------------------------

Ctl Model Ports PDs DGs DNOpt VDs VNOpt BBU sPR DS EHS ASOs Hlth

-----------------------------------------------------------------

0 M5210 8 10 2 0 3 0 Opt On - Y 4 Opt

-----------------------------------------------------------------

Ctl=Controller Index|DGs=Drive groups|VDs=Virtual drives|Fld=Failed

PDs=Physical drives|DNOpt=DG NotOptimal|VNOpt=VD NotOptimal|Opt=Optimal

Msng=Missing|Dgd=Degraded|NdAtn=Need Attention|Unkwn=Unknown

sPR=Scheduled Patrol Read|DS=DimmerSwitch|EHS=Emergency Hot Spare

Y=Yes|N=No|ASOs=Advanced Software Options|BBU=Battery backup unit

Hlth=Health|Safe=Safe-mode boot

root@omnicube-ip205-92:/opt/MegaRAID/storcli# storcli64 show all

storcli64: command not found

root@omnicube-ip205-92:/opt/MegaRAID/storcli# ./storcli64 show all

Status Code = 0

Status = Success

Description = None

Number of Controllers = 1

Host Name = omnicube-ip205-92

Operating System = Linux4.1.20-svt1

System Overview :

===============

-----------------------------------------------------------------

Ctl Model Ports PDs DGs DNOpt VDs VNOpt BBU sPR DS EHS ASOs Hlth

-----------------------------------------------------------------

0 M5210 8 10 2 0 3 0 Opt On - Y 4 Opt

-----------------------------------------------------------------

Ctl=Controller Index|DGs=Drive groups|VDs=Virtual drives|Fld=Failed

PDs=Physical drives|DNOpt=DG NotOptimal|VNOpt=VD NotOptimal|Opt=Optimal

Msng=Missing|Dgd=Degraded|NdAtn=Need Attention|Unkwn=Unknown

sPR=Scheduled Patrol Read|DS=DimmerSwitch|EHS=Emergency Hot Spare

Y=Yes|N=No|ASOs=Advanced Software Options|BBU=Battery backup unit

Hlth=Health|Safe=Safe-mode boot

ASO :

===

--------------------------------------------

Ctl Cl SAS MD R6 WC R5 SS FP Re CR RF CO CW

--------------------------------------------

0 X U X U U U U U X X X X X

--------------------------------------------

Cl=Cluster|MD=Max Disks|WC=Wide Cache|SS=Safe Store|FP=Fast Path|Re=Recovery

CR=CacheCade(Read)|RF=Reduced Feature Set|CO=Cache Offload

CW=CacheCade(Read/Write)|X=Not Available/Not Installed|U=Unlimited|T=Trial

root@omnicube-ip205-92:/opt/MegaRAID/storcli# ./stocli64 /c0 show freespace

bash: ./stocli64: No such file or directory

root@omnicube-ip205-92:/opt/MegaRAID/storcli# ./storcli64 /c0 show freespace

Controller = 0

Status = Success

Description = None

FREE SPACE DETAILS :

==================

Total Slot Count = 0

ID-Index|DG-Drive Group|AftrVD-Identify Freespace After VD

root@omnicube-ip205-92:/opt/MegaRAID/storcli# ./storcli64 /c0 show

Generating detailed summary of the adapter, it may take a while to complete.

Controller = 0

Status = Success

Description = None

Product Name = ServeRAID M5210

Serial Number = SK72870056

SAS Address = 500605b00d263cc0

PCI Address = 00:0b:00:00

System Time = 10/23/2019 02:26:57

Mfg. Date = 07/24/17

Controller Time = 10/23/2019 02:26:57

FW Package Build = 24.21.0-0097

BIOS Version = 6.36.00.3_4.19.08.00_0x06180203

FW Version = 4.680.00-8458

Driver Name = megaraid_sas

Driver Version = 06.806.08.00-rc1

Vendor Id = 0x1000

Device Id = 0x5D

SubVendor Id = 0x1014

SubDevice Id = 0x454

Host Interface = PCIE

Device Interface = SATA-3G

Bus Number = 11

Device Number = 0

Function Number = 0

Drive Groups = 2

TOPOLOGY :

========

--------------------------------------------------------------------------

DG Arr Row EID:Slot DID Type State BT Size PDC PI SED DS3 FSpace

--------------------------------------------------------------------------

0 - - - - RAID1 Optl N 446.625 GB dflt N N none N

0 0 - - - RAID1 Optl N 446.625 GB dflt N N none N

0 0 0 18:0 21 DRIVE Onln N 446.625 GB dflt N N none -

0 0 1 18:1 22 DRIVE Onln N 446.625 GB dflt N N none -

1 - - - - RAID6 Optl N 6.546 TB dsbl N N none N

1 0 - - - RAID6 Optl N 6.546 TB dsbl N N none N

1 0 0 18:5 8 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 1 18:9 9 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 2 18:2 11 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 3 18:4 13 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 4 18:6 14 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 5 18:8 15 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 6 18:3 16 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 7 18:7 17 DRIVE Onln N 1.091 TB dsbl N N none -

--------------------------------------------------------------------------

DG=Disk Group Index|Arr=Array Index|Row=Row Index|EID=Enclosure Device ID

DID=Device ID|Type=Drive Type|Onln=Online|Rbld=Rebuild|Dgrd=Degraded

Pdgd=Partially degraded|Offln=Offline|BT=Background Task Active

PDC=PD Cache|PI=Protection Info|SED=Self Encrypting Drive|Frgn=Foreign

DS3=Dimmer Switch 3|dflt=Default|Msng=Missing|FSpace=Free Space Present

Virtual Drives = 3

VD LIST :

=======

-----------------------------------------------------------

DG/VD TYPE State Access Consist Cache sCC Size Name

-----------------------------------------------------------

0/0 RAID1 Optl RW Yes RWBC - 100.0 GB

0/1 RAID1 Optl RW Yes RWBC - 346.625 GB

1/2 RAID6 Optl RW Yes RWBC - 6.546 TB

-----------------------------------------------------------

Cac=CacheCade|Rec=Recovery|OfLn=OffLine|Pdgd=Partially Degraded|dgrd=Degraded

Optl=Optimal|RO=Read Only|RW=Read Write|B=Blocked|Consist=Consistent|

R=Read Ahead Always|NR=No Read Ahead|WB=WriteBack|

AWB=Always WriteBack|WT=WriteThrough|C=Cached IO|D=Direct IO|sCC=Scheduled

Check Consistency

Physical Drives = 10

PD LIST :

=======

------------------------------------------------------------------------------------------------

EID:Slt DID State DG Size Intf Med SED PI SeSz Model Sp

------------------------------------------------------------------------------------------------

18:0 21 Onln 0 446.625 GB SATA SSD N N 512B MTFDDAK480MBB-1AE1ZA 00NA689 00NA692LEN U

18:1 22 Onln 0 446.625 GB SATA SSD N N 512B MTFDDAK480MBB-1AE1ZA 00NA689 00NA692LEN U

18:2 11 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:3 16 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:4 13 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:5 8 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:6 14 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:7 17 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:8 15 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:9 9 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

------------------------------------------------------------------------------------------------

EID-Enclosure Device ID|Slt-Slot No.|DID-Device ID|DG-DriveGroup

DHS-Dedicated Hot Spare|UGood-Unconfigured Good|GHS-Global Hotspare

UBad-Unconfigured Bad|Onln-Online|Offln-Offline|Intf-Interface

Med-Media Type|SED-Self Encryptive Drive|PI-Protection Info

SeSz-Sector Size|Sp-Spun|U-Up|D-Down|T-Transition|F-Foreign

UGUnsp-Unsupported|UGShld-UnConfigured shielded|HSPShld-Hotspare shielded

CFShld-Configured shielded

Cachevault_Info :

===============

------------------------------------

Model State Temp Mode MfgDate

------------------------------------

CVPM02 Optimal 35C - 2017/03/22

------------------------------------

root@omnicube-ip205-92:/opt/MegaRAID/storcli# ./storcli64 /c0 show all

Generating detailed summary of the adapter, it may take a while to complete.

Controller = 0

Status = Success

Description = None

Basics :

======

Controller = 0

Model = ServeRAID M5210

Serial Number = SK72870056

Current Controller Date/Time = 10/23/2019, 02:27:26

Current System Date/time = 10/23/2019, 02:27:26

SAS Address = 500605b00d263cc0

PCI Address = 00:0b:00:00

Mfg Date = 07/24/17

Rework Date = 00/00/00

Revision No = 04F

Version :

=======

Firmware Package Build = 24.21.0-0097

Firmware Version = 4.680.00-8458

Bios Version = 6.36.00.3_4.19.08.00_0x06180203

NVDATA Version = 3.1705.00-0018

Boot Block Version = 3.07.00.00-0003

Driver Name = megaraid_sas

Driver Version = 06.806.08.00-rc1

Bus :

===

Vendor Id = 0x1000

Device Id = 0x5D

SubVendor Id = 0x1014

SubDevice Id = 0x454

Host Interface = PCIE

Device Interface = SATA-3G

Bus Number = 11

Device Number = 0

Function Number = 0

Pending Images in Flash :

=======================

Image name = No pending images

Status :

======

Controller Status = Optimal

Memory Correctable Errors = 0

Memory Uncorrectable Errors = 0

ECC Bucket Count = 0

Any Offline VD Cache Preserved = No

BBU Status = 0

Support PD Firmware Download = No

Lock Key Assigned = No

Failed to get lock key on bootup = No

Lock key has not been backed up = No

Bios was not detected during boot = Yes

Controller must be rebooted to complete security operation = No

A rollback operation is in progress = No

At least one PFK exists in NVRAM = Yes

SSC Policy is WB = No

Controller has booted into safe mode = No

Supported Adapter Operations :

============================

Rebuild Rate = Yes

CC Rate = Yes

BGI Rate = Yes

Reconstruct Rate = Yes

Patrol Read Rate = Yes

Alarm Control = No

Cluster Support = No

BBU = Yes

Spanning = Yes

Dedicated Hot Spare = Yes

Revertible Hot Spares = Yes

Foreign Config Import = Yes

Self Diagnostic = Yes

Allow Mixed Redundancy on Array = No

Global Hot Spares = Yes

Deny SCSI Passthrough = No

Deny SMP Passthrough = No

Deny STP Passthrough = No

Support more than 8 Phys = Yes

FW and Event Time in GMT = No

Support Enhanced Foreign Import = Yes

Support Enclosure Enumeration = Yes

Support Allowed Operations = Yes

Abort CC on Error = Yes

Support Multipath = Yes

Support Odd & Even Drive count in RAID1E = No

Support Security = Yes

Support Config Page Model = Yes

Support the OCE without adding drives = Yes

support EKM = Yes

Snapshot Enabled = No

Support PFK = Yes

Support PI = Yes

Support LDPI Type1 = No

Support LDPI Type2 = No

Support LDPI Type3 = No

Support Ld BBM Info = No

Support Shield State = Yes

Block SSD Write Disk Cache Change = Yes

Support Suspend Resume BG ops = Yes

Support Emergency Spares = Yes

Support Set Link Speed = Yes

Support Boot Time PFK Change = Yes

Support JBOD = Yes

Disable Online PFK Change = Yes

Support Perf Tuning = Yes

Support SSD PatrolRead = Yes

Real Time Scheduler = Yes

Support Reset Now = Yes

Support Emulated Drives = Yes

Headless Mode = Yes

Dedicated HotSpares Limited = No

Point In Time Progress = Yes

Supported PD Operations :

=======================

Force Online = Yes

Force Offline = Yes

Force Rebuild = Yes

Deny Force Failed = No

Deny Force Good/Bad = No

Deny Missing Replace = No

Deny Clear = No

Deny Locate = No

Support Power State = No

Set Power State For Cfg = No

Support T10 Power State = No

Support Temperature = Yes

Supported VD Operations :

=======================

Read Policy = Yes

Write Policy = Yes

IO Policy = Yes

Access Policy = Yes

Disk Cache Policy = Yes

Reconstruction = Yes

Deny Locate = No

Deny CC = No

Allow Ctrl Encryption = No

Enable LDBBM = Yes

Support FastPath = Yes

Performance Metrics = Yes

Power Savings = No

Support Powersave Max With Cache = No

Support Breakmirror = Yes

Support SSC WriteBack = No

Support SSC Association = No

Advanced Software Option :

========================

-----------------------------------------

Adv S/W Opt Time Remaining Mode

-----------------------------------------

MegaRAID FastPath Unlimited -

MegaRAID SafeStore -

MegaRAID RAID6 Unlimited -

MegaRAID RAID5 -

-----------------------------------------

Safe ID = FRRSW4BBM8DA9PSD753GABH63KQE3M7JGDM4CI2Z

HwCfg :

=====

ChipRevision = C0

BatteryFRU = N/A

Front End Port Count = 0

Backend Port Count = 8

BBU = Present

Alarm = Missing

Serial Debugger = Present

NVRAM Size = 32KB

Flash Size = 32MB

On Board Memory Size = 2048MB

On Board Expander = Absent

Temperature Sensor for ROC = Present

Temperature Sensor for Controller = Absent

Current Size of CacheCade (GB) = 0

Current Size of FW Cache (MB) = 1715

ROC temperature(Degree Celcius) = 78

Policies :

========

Policies Table :

==============

------------------------------------------------

Policy Current Default

------------------------------------------------

Predictive Fail Poll Interval 300 sec

Interrupt Throttle Active Count 16

Interrupt Throttle Completion 50 us

Rebuild Rate 75 % 30%

PR Rate 30 % 30%

BGI Rate 30 % 30%

Check Consistency Rate 30 % 30%

Reconstruction Rate 30 % 30%

Cache Flush Interval 4s

------------------------------------------------

Flush Time(Default) = 4s

Drive Coercion Mode = 128MB

Auto Rebuild = On

Battery Warning = On

ECC Bucket Size = 15

ECC Bucket Leak Rate (hrs) = 24

Restore HotSpare on Insertion = Off

Expose Enclosure Devices = On

Maintain PD Fail History = Off

Reorder Host Requests = On

Auto detect BackPlane = SGPIO/i2c SEP

Load Balance Mode = Auto

Security Key Assigned = Off

Disable Online Controller Reset = Off

Use drive activity for locate = Off

Boot :

====

BIOS Enumerate VDs = 1

Stop BIOS on Error = Off

Delay during POST = 0

Spin Down Mode = None

Enable Ctrl-R = No

Enable Web BIOS = No

Enable PreBoot CLI = No

Enable BIOS = No

Max Drives to Spinup at One Time = 2

Maximum number of direct attached drives to spin up in 1 min = 10

Delay Among Spinup Groups (sec) = 12

Allow Boot with Preserved Cache = Off

High Availability :

=================

Topology Type = None

Cluster Permitted = No

Cluster Active = No

Defaults :

========

Phy Polarity = 0

Phy PolaritySplit = 0

Strip Size = 256kB

Write Policy = WB

Read Policy = RA

Cache When BBU Bad = Off

Cached IO = Off

VD PowerSave Policy = Controller Defined

Default spin down time (mins) = 30

Coercion Mode = 1 GB

ZCR Config = Unknown

Max Chained Enclosures = 16

Direct PD Mapping = No

Restore Hot Spare on Insertion = No

Expose Enclosure Devices = Yes

Maintain PD Fail History = Yes

Zero Based Enclosure Enumeration = No

Disable Puncturing = Yes

EnableLDBBM = No

Un-Certified Hard Disk Drives = Allow

SMART Mode = Mode 6

Enable LED Header = No

LED Show Drive Activity = Yes

Dirty LED Shows Drive Activity = No

EnableCrashDump = Yes

Disable Online Controller Reset = No

Treat Single span R1E as R10 = No

Power Saving option = Disabled

TTY Log In Flash = No

Auto Enhanced Import = Yes

BreakMirror RAID Support = Yes

Disable Join Mirror = No

Enable Shield State = Yes

Time taken to detect CME = 60 sec

Capabilities :

============

Supported Drives = SAS, SATA

Boot Volume Supported = NO

RAID Level Supported = RAID0, RAID1, RAID5, RAID6, RAID00, RAID10, RAID50,

RAID60, PRL 11, PRL 11 with spanning, SRL 3 supported,

PRL11-RLQ0 DDF layout with no span, PRL11-RLQ0 DDF layout with span

Enable JBOD = No

Mix in Enclosure = Allowed

Mix of SAS/SATA of HDD type in VD = Not Allowed

Mix of SAS/SATA of SSD type in VD = Not Allowed

Mix of SSD/HDD in VD = Not Allowed

SAS Disable = No

Max Arms Per VD = 32

Max Spans Per VD = 8

Max Arrays = 128

Max VD per array = 64

Max Number of VDs = 64

Max Parallel Commands = 928

Max SGE Count = 60

Max Data Transfer Size = 512 sectors

Max Strips PerIO = 128

Max Configurable CacheCade Size = 0

Min Strip Size = 64 KB

Max Strip Size = 1.0 MB

Scheduled Tasks :

===============

Consistency Check Reoccurrence = 168 hrs

Next Consistency check launch = 12/27/2117, 21:00:00

Patrol Read Reoccurrence = 168 hrs

Next Patrol Read launch = 05/05/2098, 21:00:00

Battery learn Reoccurrence = 670 hrs

Next Battery Learn = 11/05/2019, 09:00:00

OEMID = IBM

Drive Groups = 2

TOPOLOGY :

========

--------------------------------------------------------------------------

DG Arr Row EID:Slot DID Type State BT Size PDC PI SED DS3 FSpace

--------------------------------------------------------------------------

0 - - - - RAID1 Optl N 446.625 GB dflt N N none N

0 0 - - - RAID1 Optl N 446.625 GB dflt N N none N

0 0 0 18:0 21 DRIVE Onln N 446.625 GB dflt N N none -

0 0 1 18:1 22 DRIVE Onln N 446.625 GB dflt N N none -

1 - - - - RAID6 Optl N 6.546 TB dsbl N N none N

1 0 - - - RAID6 Optl N 6.546 TB dsbl N N none N

1 0 0 18:5 8 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 1 18:9 9 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 2 18:2 11 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 3 18:4 13 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 4 18:6 14 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 5 18:8 15 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 6 18:3 16 DRIVE Onln N 1.091 TB dsbl N N none -

1 0 7 18:7 17 DRIVE Onln N 1.091 TB dsbl N N none -

--------------------------------------------------------------------------

DG=Disk Group Index|Arr=Array Index|Row=Row Index|EID=Enclosure Device ID

DID=Device ID|Type=Drive Type|Onln=Online|Rbld=Rebuild|Dgrd=Degraded

Pdgd=Partially degraded|Offln=Offline|BT=Background Task Active

PDC=PD Cache|PI=Protection Info|SED=Self Encrypting Drive|Frgn=Foreign

DS3=Dimmer Switch 3|dflt=Default|Msng=Missing|FSpace=Free Space Present

Virtual Drives = 3

VD LIST :

=======

-----------------------------------------------------------

DG/VD TYPE State Access Consist Cache sCC Size Name

-----------------------------------------------------------

0/0 RAID1 Optl RW Yes RWBC - 100.0 GB

0/1 RAID1 Optl RW Yes RWBC - 346.625 GB

1/2 RAID6 Optl RW Yes RWBC - 6.546 TB

-----------------------------------------------------------

Cac=CacheCade|Rec=Recovery|OfLn=OffLine|Pdgd=Partially Degraded|dgrd=Degraded

Optl=Optimal|RO=Read Only|RW=Read Write|B=Blocked|Consist=Consistent|

R=Read Ahead Always|NR=No Read Ahead|WB=WriteBack|

AWB=Always WriteBack|WT=WriteThrough|C=Cached IO|D=Direct IO|sCC=Scheduled

Check Consistency

Physical Drives = 10

PD LIST :

=======

------------------------------------------------------------------------------------------------

EID:Slt DID State DG Size Intf Med SED PI SeSz Model Sp

------------------------------------------------------------------------------------------------

18:0 21 Onln 0 446.625 GB SATA SSD N N 512B MTFDDAK480MBB-1AE1ZA 00NA689 00NA692LEN U

18:1 22 Onln 0 446.625 GB SATA SSD N N 512B MTFDDAK480MBB-1AE1ZA 00NA689 00NA692LEN U

18:2 11 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:3 16 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:4 13 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:5 8 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:6 14 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:7 17 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:8 15 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

18:9 9 Onln 1 1.091 TB SAS HDD N N 512B AL14SEB120N U

------------------------------------------------------------------------------------------------

EID-Enclosure Device ID|Slt-Slot No.|DID-Device ID|DG-DriveGroup

DHS-Dedicated Hot Spare|UGood-Unconfigured Good|GHS-Global Hotspare

UBad-Unconfigured Bad|Onln-Online|Offln-Offline|Intf-Interface

Med-Media Type|SED-Self Encryptive Drive|PI-Protection Info

SeSz-Sector Size|Sp-Spun|U-Up|D-Down|T-Transition|F-Foreign

UGUnsp-Unsupported|UGShld-UnConfigured shielded|HSPShld-Hotspare shielded

CFShld-Configured shielded

Cachevault_Info :

===============

------------------------------------

Model State Temp Mode MfgDate

------------------------------------

CVPM02 Optimal 35C - 2017/03/22

------------------------------------

root@omnicube-ip205-92:/opt/MegaRAID/storcli#

</blockquote>

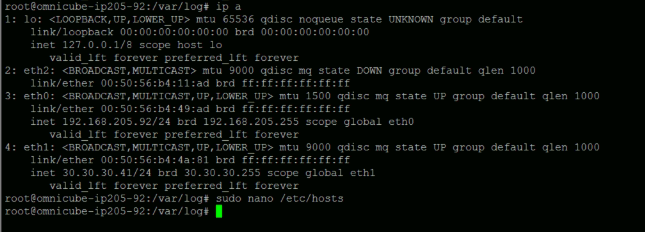

Here is the IP Address of interfaces:

VM Network: 192.168.x.x (eth0)

Federation: 30.30.x.x (eth1)

Storage: 20.20.x.x (eth2)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-23-2019 02:45 AM

10-23-2019 02:45 AM

Re: Legacy Simplivity Lenovo - Datastore is not mounting

Has the support contract expired ?

What is the status of svtfs status svtfs

What are the last 50 entries in tail -f 50 $SVTFS

What happens if you try to start svtfs. start svtfs

Are there any error lights on the acclerator card.?

I am an HPE employee

[Any personal opinions expressed are mine, and not official statements on behalf of Hewlett Packard Enterprise]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-26-2019 06:19 PM

10-26-2019 06:19 PM

Re: Legacy Simplivity Lenovo - Datastore is not mounting

Hi Dave,

Previous days, i was able to resolved the issue with this KB https://support.hpe.com/hpsc/doc/public/display?docId=emr_na-sv2790en_us&docLocale=en_US but today the datastore was become unavailable and here is the logs in /var/svtfs/0/logs/svtfs.log

I ran the sudo reboot now, and here is the logs:

<quote>2019-10-27T01:08:45.662Z INFO 0x7fc0d1103840 [:] [svtfs.app] svtfsapp.cpp:212 /var/tmp/build/bin/svtfs application is starting up...

2019-10-27T01:08:45.662Z INFO 0x7fc0d1103840 [:] [svtfs.app] svtfsapp.cpp:213 version.name : Release 3.5.2.90

2019-10-27T01:08:45.662Z INFO 0x7fc0d1103840 [:] [svtfs.app] svtfsapp.cpp:214 feature.version : 850

2019-10-27T01:08:45.662Z INFO 0x7fc0d1103840 [:] [svtfs.app] svtfsapp.cpp:215 build.timestamp : 2016-07-07T06:43:09-0400

2019-10-27T01:08:45.662Z INFO 0x7fc0d1103840 [:] [svtfs.app] svtfsapp.cpp:216 git.branch : release

2019-10-27T01:08:45.662Z INFO 0x7fc0d1103840 [:] [svtfs.app] svtfsapp.cpp:217 git.commit : e5e45a6aaa66c89a4af57069fddad2e263ff5117

2019-10-27T01:08:45.662Z INFO 0x7fc0d1103840 [:] [svtfs.app] sqlitelogbridge.cpp:38 SQLite log bridge installed successfully.

2019-10-27T01:08:45.662Z INFO 0x7fc0d1103840 [:] [svtfs.app] thriftlogbridge.cpp:39 Thrift log bridge installed successfully.

2019-10-27T01:08:45.706Z INFO 0x7fc0d1103840 [:] [svtfs.app] sqliteconfig.cpp:53 SQLite heap configured with 128 MB

2019-10-27T01:08:45.706Z INFO 0x7fc0d1103840 [:] [svtfs.app] svtfsapp.cpp:55 Starting HAL...

2019-10-27T01:08:45.706Z INFO 0x7fc0d1103840 [:] [hal.halclientfactory] halclientfactory.cpp:39 Using /var/svtfs/svt-hal/0/svt-hal.sock as socket file for hal

2019-10-27T01:08:45.706Z INFO 0x7fc0d1103840 [:] [svtfs.app] svtfsapp.cpp:60 HAL has been started.

2019-10-27T01:08:45.706Z INFO 0x7fc0d1103840 [:] [svtfs.app] svtfsapp.cpp:62 Starting control plane...

2019-10-27T01:08:45.707Z INFO 0x7fc0d1103840 [:] [net.ifmgr.ifmanager] ifmanager.cpp:77 Managing Interface Group Management: eth0: 192.168.205.92/255.255.255.0 [192.168.205.0 network] MTU 1500

2019-10-27T01:08:45.707Z INFO 0x7fc0d1103840 [:] [net.ifmgr.ifmanager] ifmanager.cpp:77 Managing Interface Group Federation: eth1: 30.30.30.41/255.255.255.0 [30.30.30.0 network] MTU 9000

2019-10-27T01:08:45.707Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop msgprotEvtLoop (#1) quota=10 limit=11

2019-10-27T01:08:45.708Z INFO 0x7fc0d1103840 [:] [tia.interface] tiainterface.cpp:93 Creation option 0, production mode true

2019-10-27T01:08:45.708Z INFO 0x7fc0d1103840 [:] [storage.devicemanager] devicemanager.cpp:178 Message: Creating Hardware Factory (from function _createDeviceFactory)

2019-10-27T01:08:45.708Z INFO 0x7fc0d1103840 [:] [hal.halclientfactory] halclientfactory.cpp:39 Using /var/svtfs/svt-hal/0/svt-hal.sock as socket file for hal

2019-10-27T01:08:45.709Z INFO 0x7fc0d1103840 [:] [storage.stgmgr.ssdreadcache] SSDReadCache.cpp:262 SSDReadCache(): SSDReadCache - enabled, reads to skip=0, flushes per cycle=10, activation threshold=1000, perf counters disabled, max tracing=disabled, bufferedio=enabled, divisor=0, memsize=570MiB

2019-10-27T01:08:45.709Z INFO 0x7fc0d1103840 [:] [storage.stgmgr.ssdreadcache] SSDReadCache.cpp:1966 _startCounters(): Initializing counters

2019-10-27T01:08:45.709Z INFO 0x7fc0d1103840 [:] [storage.writerequestallocator] WriteRequestMemoryPool.hpp:33 Allocated 0MB for WriteRequest objects

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [objectstore.store] objectstore.cpp:370 OCL Enable MetaData PassThrough 1.

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [nfsd.nfsd] nfsd.cpp:72 Using 256 nfs serivice threads

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [nfsd.nfsd] nfsd.cpp:74 rpc rx is done in rpc threads

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [nfsd.nfsd] nfsd.cpp:79 no limit on maximum rpc tx threads

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [nfsd.nfsd] nfsd.cpp:83 Max connections: 1024

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [nfsd.nfsd] nfsd.cpp:84 Max events: 128

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [nfsd.nfsd] nfsd.cpp:85 Max read size: 131072

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [nfsd.nfsd] nfsd.cpp:86 Max write size: 131072

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [nfsd.nfsd] nfsd.cpp:93 Internal max read size: 1048576

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [nfsd.nfsd] nfsd.cpp:94 Internal max write size: 1048576

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [nfsd.nfsd] nfsd.cpp:95 Requests longer than 10000 milliseconds are logged

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [obt.sendtools] netsend.cpp:66 NetSend: queue full timeout set to 20000 msec

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [obt.sendtools] netsend.cpp:66 NetSend: queue full timeout set to 20000 msec

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [filesystem.obtc.fsobtconnector] fsobtconnector.cpp:108 FsObtConnector : set AsyncTimeout to 20000 msec

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [filesystem.obtc.fsobtconnector] fsobtconnector.cpp:113 FsObtConnector: set SyncTimeout to 30000 msec

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [filesystem.obtc.fsobtconnector] fsobtconnector.cpp:116 FsObtConnector: set mIterations to 30

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [filesystem.obtc.fsobtconnector] fsobtconnector.cpp:135 FsObtConnector: ObtThreadCount fsystem<64> rebuildnorm<0> rebuildbulk<32> rebuildresp<8> proxy<64> proxyls<2> longrun<8> fsystemresp<1> fsproxyresp<1> fslongrunresp<1>

2019-10-27T01:08:45.710Z INFO 0x7fc0d1103840 [:] [filesystem.obtc.fsobtconnector] fsobtconnector.cpp:137 FsObtConnector : maxMsgRoundTripTimes<61>

2019-10-27T01:08:45.711Z INFO 0x7fc0d1103840 [:] [filesystem.fsmgr.filesystemmgr] filesystemmgr.cpp:1213 LogFilter.1: counter 0, reset limit=100 avg=4/h flt=18000 secs

2019-10-27T01:08:45.711Z INFO 0x7fc0d1103840 [:] [filesystem.fsmgr.filesystemmgr] filesystemmgr.cpp:1213 LogFilter.2: counter 0, reset limit=800 avg=40/h flt=36000 secs

2019-10-27T01:08:45.712Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop PicoSvcEvtLoop (#2) quota=20 limit=60

2019-10-27T01:08:45.712Z INFO 0x7fc0d1103840 [:] [cfgdb.transport.keepalivemanager] keepalivemanager.cpp:42 Cfgdb KeepAliveManager started. Send timeout 00:01:00. Receive timeout 00:01:30. Will check every 00:00:30

2019-10-27T01:08:45.713Z INFO 0x7fc0d1103840 [:] [cfgdb.transport.transport] transport.cpp:181 CfgDB set up for 'with MYFD' version of communication

2019-10-27T01:08:45.713Z INFO 0x7fc0d1103840 [:] [cfgdb.node.nodemanagerservice] nodemanagerservice.cpp:251 Create table

2019-10-27T01:08:45.714Z INFO 0x7fc0d1103840 [:] [cfgdb.archive.monitor.archivemonitor] archivemonitor.cpp:58 Registering archive disk and txn row threshold monitors

2019-10-27T01:08:45.743Z WARN 0x7fc0d1103840 [:] [control.phonehome.phonehomemonitor] phoneHomeMonitor.cpp:1688 file /var/tmp/build/event/control/PlatformUpgradeHostrebootNotrequiredEvent.xml does not contain a phone home attribute

2019-10-27T01:08:45.768Z WARN 0x7fc0d1103840 [:] [control.phonehome.phonehomemonitor] phoneHomeMonitor.cpp:1688 file /var/tmp/build/event/control/PlatformUpgradeHostrebootRequiredEvent.xml does not contain a phone home attribute

2019-10-27T01:08:45.769Z WARN 0x7fc0d1103840 [:] [control.phonehome.phonehomemonitor] phoneHomeMonitor.cpp:1688 file /var/tmp/build/event/control/IdentitystoreCloudSyncEvent.xml does not contain a phone home attribute

2019-10-27T01:08:45.770Z WARN 0x7fc0d1103840 [:] [control.phonehome.phonehomemonitor] phoneHomeMonitor.cpp:1688 file /var/tmp/build/event/control/PlatformUpgradeCleanshutdownSucceededEvent.xml does not contain a phone home attribute

2019-10-27T01:08:45.799Z INFO 0x7fc0d1103840 [:] [control.phonehome.phonehomemonitor] phoneHomeMonitor.cpp:482 assignCollectionTime: collectionTime 01:15:00

2019-10-27T01:08:45.799Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop ArbDiscoEvtLoop (#3) quota=10 limit=11

2019-10-27T01:08:45.799Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop DmxMgrSvEvtLoop (#4) quota=10 limit=11

2019-10-27T01:08:45.799Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop IPFailEvtLoop (#5) quota=10 limit=11

2019-10-27T01:08:45.799Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop HiveMgrEvtLoop (#6) quota=10 limit=11

2019-10-27T01:08:45.799Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop TopoDiscEvtLoop (#7) quota=10 limit=11

2019-10-27T01:08:45.800Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop HivePlcEvtLoop (#8) quota=10 limit=11

2019-10-27T01:08:45.800Z INFO 0x7fc0d1103840 [:] [iothreadmgr] iothread.cpp:42 Using 512KB stacks for I/O threads

2019-10-27T01:08:45.800Z INFO 0x7fc0d1103840 [:] [iothreadmgr] iothread.cpp:43 I/O credit pool has 128 credits

2019-10-27T01:08:45.800Z INFO 0x7fc0d1103840 [:] [iothreadmgr] iothread.cpp:44 I/O credit pool credit usage based on 65536 object size

2019-10-27T01:08:45.805Z INFO 0x7fc0d1103840 [:] [control.tz.timezone] timezone.cpp:221 Time zone database @ /var/tmp/build/etc/date_time_zonespec.csv loaded

2019-10-27T01:08:45.805Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop DR_VacuuEvtLoop (#9) quota=10 limit=11

2019-10-27T01:08:45.806Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop FDVwMgrEvtLoop (#10) quota=10 limit=11

2019-10-27T01:08:45.806Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop ESvcEvtLoop (#11) quota=10 limit=11

2019-10-27T01:08:45.806Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop IAASEvtLoop (#12) quota=10 limit=11

2019-10-27T01:08:45.806Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop OverlayEvtLoop (#13) quota=10 limit=11

2019-10-27T01:08:45.806Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop FDTSvcEvtLoop (#14) quota=10 limit=11

2019-10-27T01:08:45.806Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop BTPEvtLoop (#15) quota=10 limit=11

2019-10-27T01:08:45.807Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop AsyncPVSEvtLoop (#16) quota=10 limit=11

2019-10-27T01:08:45.807Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop IWOEvtLoop (#17) quota=10 limit=11

2019-10-27T01:08:45.807Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:409 Local Node ID is 42343c08-d9a9-1874-2159-026beb24aa51...

2019-10-27T01:08:45.807Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:595 Starting application stack for instance 0...

2019-10-27T01:08:45.807Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:763 Starting StartupShutdown

2019-10-27T01:08:45.807Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:788 Started StartupShutdown

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:763 Starting Control Plane Svc monitor DSV

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [control.svc.dsv.controlsvcdsvserver] rpcserver.cpp:117 Creating threaded thrift server (name 'Control Plane Svc monitor DSV' if 'localhost' port 7220 maxConnections 1)...

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [control.svc.dsv.controlsvcdsvserver] rpcserver.cpp:128 Thrift server has been created.

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [control.svc.dsv.controlsvcdsvserver] rpcserver.cpp:134 Starting RPC server thread...

2019-10-27T01:08:45.808Z INFO 0x7fc08ffff700 [:] [control.svc.dsv.controlsvcdsvserver] rpcserver.cpp:185 Starting thrift server on port 7220

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [control.svc.dsv.controlsvcdsvserver] rpcserver.cpp:157 RPC server thread has been started.

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:788 Started Control Plane Svc monitor DSV

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:763 Starting Management Interface Manager

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:788 Started Management Interface Manager

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:763 Starting Federation Interface Manager

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:788 Started Federation Interface Manager

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:763 Starting Storage Interface Manager

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [net.ifmgr.storageinterfacemanager] storageinterfacemanager.cpp:45 Storage network interface is eth2

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [net.ifmgr.storageinterfacemanager] storageinterfacemanager.cpp:55 Our storage address is 20.20.20.42/255.255.255.0

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:788 Started Storage Interface Manager

2019-10-27T01:08:45.808Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:763 Starting Message Service

2019-10-27T01:08:45.809Z INFO 0x7fc0d1103840 [:] [net.messaging.svtpv0server] svtpv0server.cpp:48 Starting an svtpv0 server on 30.30.30.41:22122

2019-10-27T01:08:45.809Z INFO 0x7fc0d1103840 [:] [net.messaging.svtpv0server] svtpv0server.cpp:48 Starting an svtpv0 server on 192.168.205.92:22122

2019-10-27T01:08:45.809Z INFO 0x7fc0d1103840 [:] [net.messaging.messageservice] messageserviceimpl.cpp:214 Session 18 joined by Relay, encryption disabled = 0

2019-10-27T01:08:45.809Z INFO 0x7fc0d1103840 [:] [net.messaging.messageservice] messageserviceimpl.cpp:214 Session 32786 joined by Relay, encryption disabled = 0

2019-10-27T01:08:45.810Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:788 Started Message Service

2019-10-27T01:08:45.810Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:763 Starting Federation Service

2019-10-27T01:08:45.810Z INFO 0x7fc0d1103840 [:] [common.classlib.eventloop] eventloop.cpp:43 Created eventloop FedEvtLoop (#18) quota=10 limit=11

2019-10-27T01:08:45.925Z INFO 0x7fc0d1103840 [:] [net.messaging.messageservice] messageserviceimpl.cpp:227 Adding peer 42063809-54df-b3ed-0dda-104520148721 at { {30.30.30.51:22122, 192.168.210.92:22122} }

2019-10-27T01:08:45.925Z INFO 0x7fc0d1103840 [:] [net.messaging.messageservice] messageserviceimpl.cpp:227 Adding peer 423c335f-9722-a0bf-5bb6-f2f8c6474608 at { {30.30.30.21:22122, 192.168.200.92:22122} }

2019-10-27T01:08:45.944Z INFO 0x7fc0d1103840 [:] [net.federation.federationserviceimpl] federationserviceimpl.cpp:107 42343c08-d9a9-1874-2159-026beb24aa51 has transitioned from UNKNOWN/UNKNOWN to ALIVE/NOTREADY

2019-10-27T01:08:45.944Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:788 Started Federation Service

2019-10-27T01:08:45.944Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:763 Starting Group RPC Service

2019-10-27T01:08:45.944Z INFO 0x7fc0d1103840 [:] [net.messaging.messageservice] messageserviceimpl.cpp:214 Session 11 joined by GroupRpcService, encryption disabled = 0

2019-10-27T01:08:45.944Z INFO 0x7fc08dffb700 [:] [net.messaging.messageservice] messageserviceimpl.cpp:214 Session 14 joined by ESWIM, encryption disabled = 0

2019-10-27T01:08:45.944Z INFO 0x7fc0d1103840 [:] [net.messaging.messageservice] messageserviceimpl.cpp:214 Session 32779 joined by GroupRpcService, encryption disabled = 0

2019-10-27T01:08:45.944Z INFO 0x7fc08dffb700 [:] [net.messaging.messageservice] messageserviceimpl.cpp:214 Session 32782 joined by ESWIM, encryption disabled = 0

2019-10-27T01:08:45.944Z INFO 0x7fc08dffb700 [:] [net.messaging.messageservice] messageserviceimpl.cpp:214 Session 16398 joined by ESWIM, encryption disabled = 0

2019-10-27T01:08:45.944Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:788 Started Group RPC Service

2019-10-27T01:08:45.944Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:763 Starting Analytics Service

2019-10-27T01:08:45.944Z INFO 0x7fc08dffb700 [:] [net.messaging.messageservice] messageserviceimpl.cpp:214 Session 8206 joined by ESWIM, encryption disabled = 0

2019-10-27T01:08:45.944Z INFO 0x7fc0d1103840 [:] [analytics.analyticsservice] analyticsservice.cpp:112 Analytics service is starting for nodeId 42343c08-d9a9-1874-2159-026beb24aa51

2019-10-27T01:08:45.947Z ERROR 0x7fc0d1103840 [:] [svtfs.app] sqlitelogbridge.cpp:69 unknown error (283) recovered 338 frames from WAL file /ctrdb/svtctr.db.0-wal

2019-10-27T01:08:45.951Z INFO 0x7fc0d1103840 [:] [analytics.ctrmsgendpoint] ctrmsgendpoint.cpp:507 Analytics message endpoint is starting.

2019-10-27T01:08:45.951Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:788 Started Analytics Service

2019-10-27T01:08:45.951Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:763 Starting I/O Thread Manager

2019-10-27T01:08:45.951Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:788 Started I/O Thread Manager

2019-10-27T01:08:45.951Z INFO 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:763 Starting TIA Manager

2019-10-27T01:08:45.951Z INFO 0x7fc0d1103840 [:] [tia.manager.manager] tiamanager.cpp:1111 Enter start()

2019-10-27T01:08:45.951Z INFO 0x7fc0d1103840 [:] [tia.manager.manager] tiamanager.cpp:218 The HW driver is ready.

2019-10-27T01:08:45.952Z INFO 0x7fc0d1103840 [:] [tia.manager.manager] tiamanager.cpp:1136

~~~~ svtfs.xml configurations ~~~~

UnitTestFile : /var/svtfs/0/myconf/nonstatic/tiamanager_unittest.xml

NvramDataFile : /var/svtfs/0/pooldir/tiamanager_nvram

NvramDataFileSize : 512

NumDmaWriteEngines : 2

HardwareHash : 1

2019-10-27T01:08:45.952Z INFO 0x7fc0d1103840 [:] [tia.async.device] tiadevice.cpp:322 issuing GET_CARD_STATUS_IOCTL...

2019-10-27T01:08:45.952Z INFO 0x7fc0d1103840 [:] [tia.manager.manager] tiamanager.cpp:391 TIA HW is in FATAL ERROR state...

2019-10-27T01:08:45.952Z ERROR 0x7fc0d1103840 [:] [tia.manager.manager] tiamanager.cpp:1147 sending TIA board Firmware Dead Exception: IA_FIRMWARE_DEAD_RESP_TYPE

2019-10-27T01:08:45.952Z FATAL 0x7fc0d1103840 [:] [control.controlplane] controlplane.cpp:779 TIA Manager returned error -1 from start()

2019-10-27T01:08:45.952Z FATAL 0x7fc0af7fe700 [:] [control.controlplane] controlplane.cpp:988 Stopping stack due to event of type: com.simplivity.event.tia.firmware.unresponsive

2019-10-27T01:08:45.952Z ERROR 0x7fc0af7fe700 [Invalid:] [cfgdb.sql.sqlconnectionfactory] sociconnection.cpp:221 Execute SQL query failed: Session is not connected.

svtsupport@omnicube-ip205-92:/var/svtfs/0/log$

</quote>

When issuing "start svtfs" :

svtsupport@omnicube-ip205-92:/var/svtfs/0/log$ start svtfs

start: Rejected send message, 1 matched rules; type="method_call", sender=":1.6" (uid=1003 pid=3360 comm="start svtfs ") interface="com.ubuntu.Upstart0_6.Job" member="Start" error name="(unset)" requested_reply="0" destination="com.ubuntu.Upstart" (uid=0 pid=1 comm="/sbin/init ")

svtsupport@omnicube-ip205-92:/var/svtfs/0/log$

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-27-2019 04:29 AM

10-27-2019 04:29 AM

Re: Legacy Simplivity Lenovo - Datastore is not mounting

2019-10-27T01:08:45.952Z INFO 0x7fc0d1103840 [:] [tia.manager.manager] tiamanager.cpp:391 TIA HW is in FATAL ERROR state...

This would indicate a problem with the accelerator card.Given that you have used the KB to remove the nostart file previously its likely that the accelerator card needs to be replaced.

I am an HPE employee

[Any personal opinions expressed are mine, and not official statements on behalf of Hewlett Packard Enterprise]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2019 11:54 PM

10-28-2019 11:54 PM

Re: Legacy Simplivity Lenovo - Datastore is not mounting

Is there a way for you to open me a ticket?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-29-2019 01:19 AM

10-29-2019 01:19 AM

Re: Legacy Simplivity Lenovo - Datastore is not mounting

Not my department im afraid.

You can check the current warrenty.

https://support.hpe.com/hpsc/wc/public/home

Contact details below

https://h20195.www2.hpe.com/v2/Getdocument.aspx?docname=A00039121ENW

I am an HPE employee

[Any personal opinions expressed are mine, and not official statements on behalf of Hewlett Packard Enterprise]