- Community Home

- >

- Storage

- >

- HPE SimpliVity

- >

- Problem with Upgrade 3.7.8.232 to 4.0.1

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-21-2020 12:57 AM

10-21-2020 12:57 AM

Problem with Upgrade 3.7.8.232 to 4.0.1

Dear friends

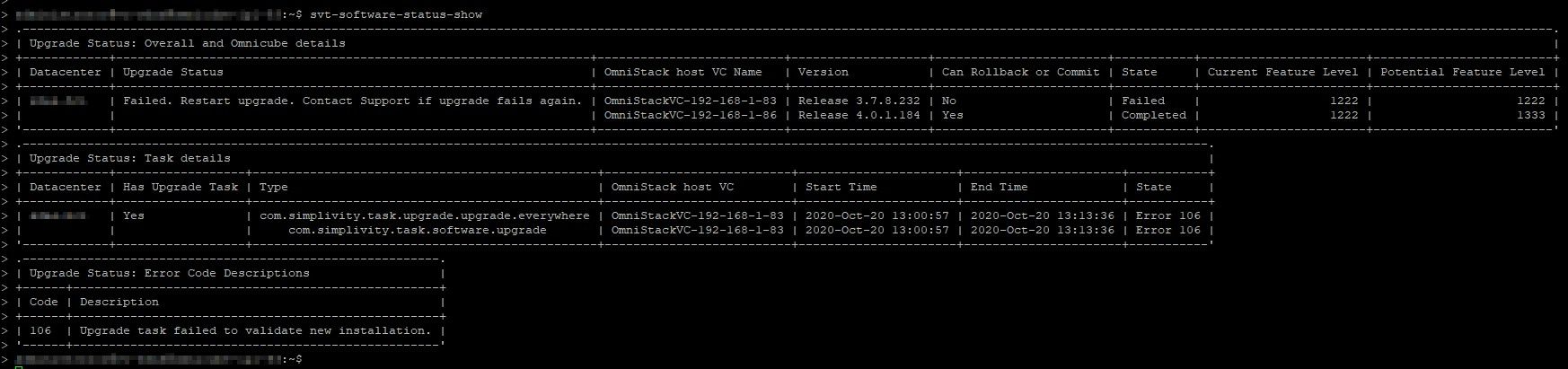

I am trying to upgrade from 3.7.8.232 to 4.0.1.184 with CLI. The first OVA upgraded correctly, but the second one gives me an error. See pic.

The upgrade process looks good, but after the last restart the OVA rolls back to 3.2.8.232. The same problem with the Upgrade Manager.

Has anyone an idea?

Kind regards

Andreas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-21-2020 05:58 AM

10-21-2020 05:58 AM

Re: Problem with Upgrade 3.7.8.232 to 4.0.1

Hello @Andy3812

Thank you for using HPE Simplivity Forum.

I think it would be necessary to look at the upgrade log file to determine what caused the failed upgrade. You can inspect the file and paste the output of the log here. The file is located at /var/log/svt-upgrade.log.

To get access to the file you need to elevate to root in the OVC:

sudo su

source /var/tmp/build/bin/appsetup

Grep for FAILED or ERROR messages during your attempt to update. This file can have output from previous upgrades so make sure you just select the most recent output. You could also just attach the entire log file.

Thanks.

I work at HPE

HPE Support Center offers support for your HPE services and products when and how you need it. Get started with HPE Support Center today.

[Any personal opinions expressed are mine, and not official statements on behalf of Hewlett Packard Enterprise]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-21-2020 11:43 PM

10-21-2020 11:43 PM

Re: Problem with Upgrade 3.7.8.232 to 4.0.1

Thanks for your help.

In svt-upgrade.log i found the following:

...

2020/10/20 13:08:28 INFO> SSACLI.pm:744 Controller::Factory::HP::SSACLI::buildPhysicalDrives - Found 9 physical drive(s)

2020/10/20 13:08:28 INFO> HP.pm:121 System::HP::overrideCustomerVisibleModelString - System Model: ProLiant DL380 Gen10; Customer Visible Model: HPE SimpliVity 380 Series 4000

2020/10/20 13:08:28 INFO> Base.pm:181 System::Base::_setCustomerVisibleModelStrings - Model strings [customerVisibleModel: HPE SimpliVity 380 Series 4000] [model: ProLiant DL380 Gen10]

2020/10/20 13:08:28 WARN> SystemManifest.pm:107 SystemManifest::SystemManifest::init - No system manifest could be loaded

2020/10/20 13:08:28 INFO> PreflightPostflight.pm:44 Common::PreflightPostflight::printSuccess - +Passed+ Instantiate HAL and update manifest

2020/10/20 13:08:28 INFO> postflight.pl:182 Postflight::run - Postflight action: Check if system supports Media Lifetime Manager and set svtfs static config entry

2020/10/20 13:08:28 INFO> Statusfile.pm:268 Util::Statusfile::atStep - 70% through step 1 (Booting up with new version): 3% of total

2020/10/20 13:08:28 INFO> PreflightPostflight.pm:44 Common::PreflightPostflight::printSuccess - +Passed+ Init Media Lifetime Manager config setting

2020/10/20 13:08:28 INFO> postflight.pl:182 Postflight::run - Postflight action: Reset Passthru.map on the ESXi host to ensure passthru map LSI reset settings are correct

2020/10/20 13:08:28 INFO> Statusfile.pm:268 Util::Statusfile::atStep - 74% through step 1 (Booting up with new version): 4% of total

root@192.168.1.82: Permission denied (publickey,keyboard-interactive).

2020/10/20 13:08:32 ERROR> PreflightPostflight.pm:34 Common::PreflightPostflight::printFailed - !FAILED! Reset Passthru.map on the ESXi host

2020/10/20 13:08:32 ERROR> PreflightPostflight.pm:39 Common::PreflightPostflight::printFailed - Could not establish SSH connection to ESXi host 192.168.1.82: unable to establish master SSH connection: bad password or master process exited unexpectedly

2020/10/20 13:08:32 ERROR> postflight.pl:228 Postflight::printFailed - PostFlight failure reason: Error in postflight failed: Could not establish SSH connection to ESXi host 192.168.1.82: unable to establish master SSH connection: bad password or master process exited unexpectedly

2020/10/20 13:08:32 WARN> postflight.pl:238 Postflight::printFailed - PostFlight failure detected; Initiating automatic rollback

2020/10/20 13:08:32 INFO> StateMachine.pm:29 Util::StateMachine::Factory - Returning Partition-based state machine instance

2020/10/20 13:08:32 INFO> Partition.pm:73 Util::StateMachine::Partition::init - At init, the current state is Pre-commit

2020/10/20 13:08:32 INFO> Partition.pm:99 Util::StateMachine::Partition::dumpState - /dev/sda4 -> /

2020/10/20 13:08:32 INFO> Partition.pm:103 Util::StateMachine::Partition::dumpState - /dev/sda4: LABEL="/" UUID="26cb0a6f-0bf7-492b-8a53-f0b3f5e6e663" TYPE="ext4" PARTUUID="4484968c-3b8d-4148-b5e6-284ce9a337f1"

2020/10/20 13:08:32 INFO> Partition.pm:103 Util::StateMachine::Partition::dumpState -

2020/10/20 13:08:32 INFO> Partition.pm:103 Util::StateMachine::Partition::dumpState -

2020/10/20 13:08:32 INFO> Partition.pm:99 Util::StateMachine::Partition::dumpState - /dev/sda1 -> /old

...

At 13:08:32 the error says that the OVC can not connect to the ESX host. Well, I can login to the host with putty, so i think the OVC use a wrong password or maybe a wrong key? How can i check that?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2020 05:52 AM

10-28-2020 05:52 AM

Re: Problem with Upgrade 3.7.8.232 to 4.0.1

Hello @Andy3812

Sorry for the delayed response. I believe the error is related to this IP:

Could not establish SSH connection to ESXi host 192.168.1.82

Most likely this IP is not the Management IP of the ESXi host but either the storage or fed, could you confirm?

I work at HPE

HPE Support Center offers support for your HPE services and products when and how you need it. Get started with HPE Support Center today.

[Any personal opinions expressed are mine, and not official statements on behalf of Hewlett Packard Enterprise]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-29-2020 05:42 AM

10-29-2020 05:42 AM

Re: Problem with Upgrade 3.7.8.232 to 4.0.1

Hi @Andy3812 ,

Thank you for using HPE SimpliVity Forum.

Can you check if you are able to get the failure count as 0 while executing the below command from DCUI screen of the affected node?

pam_tally2 --user=root

The desired output should look like:-

Login Failures Latest failure From

root 0 mm/dd/yy xx:xx:xx xx.xx.xx.xx

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-09-2020 12:16 AM

11-09-2020 12:16 AM

Re: Problem with Upgrade 3.7.8.232 to 4.0.1

Thank you for your answer.

the ESXi host has the IP 192.168.1.82. The IP of the OmnistackVC is 192.168.1.83.

In the vCenter i can see errors on the host "Logon from root@192.168.1.83 not possible". I think the OmnistackVC tries with a wrong password. How can i check that or how can i change the Password? I am able to connect to the host with putty.

Kind regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-09-2020 12:38 PM

11-09-2020 12:38 PM

Re: Problem with Upgrade 3.7.8.232 to 4.0.1

Hi @Andy3812 ,

We need to first make the failure count of host login retry to zero and then update the digital vault on the respective OVC.

As there is a need for the execution of a few elevated commands, I would request you to log a support case for this.

They would be happy to assist you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-06-2021 08:36 AM - edited 02-06-2021 08:37 AM

02-06-2021 08:36 AM - edited 02-06-2021 08:37 AM

Re: Problem with Upgrade 3.7.8.232 to 4.0.1

Hi,

we are facing some other Strange issue which was near the same. Our UpdatePath was 3.7.10 to 4.0.1 U1

and we saw during the Update the "CL Name" of one Node was missing with Error Code 103.

both commands that helped us out of this situation...

dsv-update-vcenter --server vcsaip --username 'administrator@vsphere.local' --password 'vcsapassword'

dsv-digitalvault-init --hmsuser 'administrator@vsphere.local' --hostip esxiip --hostuser 'root' --hmspassword 'vcsapassword' --hostpassword 'esxipassword'

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-13-2024 03:18 PM - last edited on 09-16-2024 02:19 AM by support_s

09-13-2024 03:18 PM - last edited on 09-16-2024 02:19 AM by support_s

Re: Problem with Upgrade 3.7.8.232 to 4.0.1

Going from omnistack 4.2 to 5.1 i had the same issue on a few nodes, but running these two command fixed it, and the Omnistack upgrade completed.

dsv-digitalvault-init --hmsuser administrator@vsphere.local --hostip 10.1.9.xxx --hostuser root

dsv-update-vcenter --username administrator@vsphere.local --server 172.25.x.x

(the last one requires a reboot)

The error i had was in the cli output:

FATAL> ClusterFedData.pm:146 Util::ClusterFedData::getClusterNodeToMgmtIpMap - Failed to get map: TaskException magic code '38' (see errors.xml to decode) at /scratch/upgrade/src/os/upgrade/install/Util/SvtControl.pm line 116

- Tags:

- virtualization