- Community Home

- >

- Servers and Operating Systems

- >

- Servers & Systems: The Right Compute

- >

- Autonomous vehicle development drives extreme stor...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

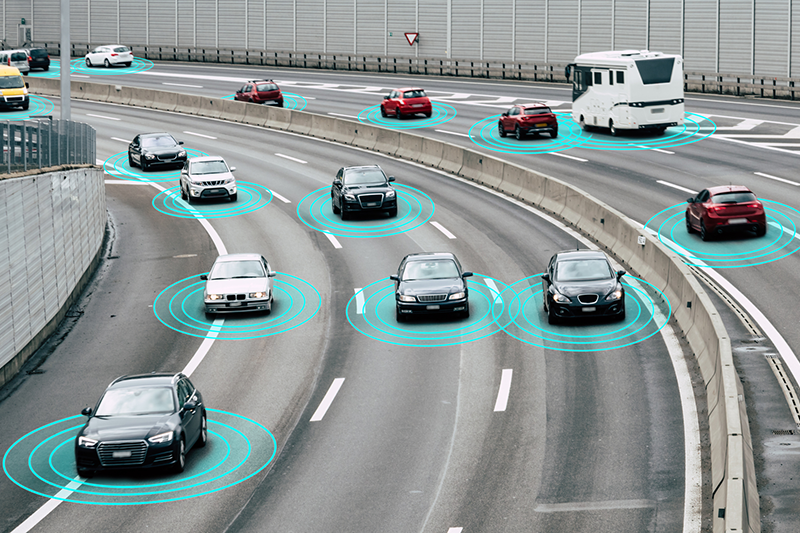

Autonomous vehicle development drives extreme storage requirements

Designing an AI model for autonomous vehicles driving is incredibly complex. It requires simulating every possible scenario and handling vast data volumes. Cray ClusterStor HPC storage is the only solution that can address the extreme requirements of autonomous driving applications.

We can probably all agree that driving a car today is a radically different experience than it was just a few years ago. The next generation of vehicles—self-driving cars—are poised to take modern automotive features a quantum leap forward and provide totally different ways to get from point A to point B.

One of the reasons fully autonomous vehicles are still a few years off is that designing an AI model that can safely and accurately drive a car is turning out to be an incredibly complicated task. The two main challenges are simulating every possible driving scenario that could occur so the model can be trained to identify the appropriate reaction, and handling the vast volume and variety of data generated by fleets of training cars.

These challenges are driving (no pun intended) some pretty extreme compute and storage requirements, which is where Cray comes in. We’re committed to supporting autonomous driving projects by providing highly scalable, efficient, and cost-effective technologies that enable customers to arrive at faster results with larger datasets.

Our customers all reported a set of similar challenges when attempting to use enterprise storage technologies for their autonomous driving projects:

- Gaining enough storage capacity to handle multiple petabytes of data (scalability)

- Ingesting and moving data from storage to the compute engine in a timely manner to avoid starving the multi-GPU compute architecture (throughput)

- Providing a cost-effective way to achieve results in a shorter training window without blowing the storage budget (affordability)

To fully understand their requirements, we examined the typical AI workflow for autonomous driving, which is an extremely data- and compute-intensive process generally falling into five stages:

We quickly realized that each stage in the workflow has its own technical considerations and therefore requires a unique set of technologies, tools, and capabilities. And so our approach was born: We would examine this complex AI problem in the context of the workflow to help the relevant teams focus on implementing the most appropriate technology for the dominant task in that stage.

For extremely data-intensive storage applications such as autonomous driving, Cray ClusterStor high-performance storage is the only storage solution on the market today that can seamlessly address these extreme requirements. ClusterStor is HPC storage that was purpose-built to support applications that rely on an increase in data availability to generate better, faster, and more accurate results (which happens to be most supercomputing applications today). Providing this data availability means the storage architecture must be able to both provide higher aggregated throughput for streaming data and deliver more storage input/output per second (IOPS) for random access to smaller amounts of data.

ClusterStor’s unmatched ability to address these requirements has quickly catapulted it to the No. 1 position for storage systems used by the top 100 supercomputer sites in the world.

Our approach to AI has always been rooted in the belief that techniques like machine learning and deep learning are quickly becoming core capabilities for users of supercomputing systems. As traditional supercomputing applications increasingly converge with AI methods, these fundamentally different approaches are being performed on the same hardware and demand similar requirements in terms of sustained performance and efficiency. Not only do we provide the computing components that power today’s most complex supercomputing applications, but our customer-centric solutions approach means we look at every AI problem in terms of the entire workflow. This helps us to hand select and fine-tune each technology component until we’ve fully addressed each customer’s requirements.

This blog originally published on cray.com and has been updated and published here on HPE’s Advantage EX blog.

Uli Plechschmidt

Hewlett Packard Enterprise

twitter.com/hpe_hpc

linkedin.com/showcase/hpe-ai/

hpe.com/us/en/solutions/hpc

UliPlechschmidt

Uli leads the product marketing function for high performance computing (HPC) storage. He joined HPE in January 2020 as part of the Cray acquisition. Prior to Cray, Uli held leadership roles in marketing, sales enablement, and sales at Seagate, Brocade Communications, and IBM.

- Back to Blog

- Newer Article

- Older Article

- PerryS on: Explore key updates and enhancements for HPE OneVi...

- Dale Brown on: Going beyond large language models with smart appl...

- alimohammadi on: How to choose the right HPE ProLiant Gen11 AMD ser...

- ComputeExperts on: Did you know that liquid cooling is currently avai...

- Jams_C_Servers on: If you’re not using Compute Ops Management yet, yo...

- AmitSharmaAPJ on: HPE servers and AMD EPYC™ 9004X CPUs accelerate te...

- AmandaC1 on: HPE Superdome Flex family earns highest availabili...

- ComputeExperts on: New release: What you need to know about HPE OneVi...

- JimLoi on: 5 things to consider before moving mission-critica...

- Jim Loiacono on: Confused with RISE with SAP S/4HANA options? Let m...

-

COMPOSABLE

77 -

CORE AND EDGE COMPUTE

146 -

CORE COMPUTE

155 -

HPC & SUPERCOMPUTING

138 -

Mission Critical

88 -

SMB

169