- Community Home

- >

- Servers and Operating Systems

- >

- Servers & Systems: The Right Compute

- >

- Keep cool: Lower power usage effectiveness with di...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Keep cool: Lower power usage effectiveness with direct liquid cooling in HPE Apollo systems

Learn how the new, highly dense HPE Apollo 2000 Gen10 Plus system is the most efficient multinode platform delivering real space and power savings to data centers of any size.

Stay cool when you're feeling hot, hot, hot

Moving into the summer months means people are looking for ways to stay cool and beat the heat. But the heat is rising in data centers too—for different reasons.

Processors are now exceeding 200W and GPUs are operating at over 300W with components continuing to get even more power hungry going forward. High-density rack configurations in the high performance computing space are moving from 20kW to 40kW—with estimates reaching up beyond 70kW per rack in 2022. I have even seen power densities double in seven years. With server lifecycles lasting three-to-five years, you need to choose systems and adopt cooling strategies that can stand the test of time while still performing at the highest levels. This means data center managers are challenged to achieve optimal OpEx, increase density, and reduce power usage effectiveness* (PUE)—all while trying to tame this massive heat envelope.

How to keep your cool

Much like turning down the AC in your house to keep your drink cool, dropping the ambient air temperature in the data center to cool servers running at over 1kW is proving to be ineffective. Data center managers are taking a closer look at alternative cooling methodologies, including fully enclosed racks, rear-door heat exchangers, and direct liquid cooling (DLC). What do these technologies have in common? The use of water to cool servers.

There’s something in the water

Just as taking a dip in the pool helps you cool down on a hot day, liquid has 1000x the thermal capacity of air, so it is a logical alternative. Let’s take a look at a few liquid-cooled systems.

For those organizations who do not need the power density or performance of DLC systems, rear-door heat exchangers may be the right choice. Rear-door heat exchangers replace standard rear doors on IT rack enclosures. By using the rack-mount devices draw cool supply air through the chassis, the heated exhaust air passes through a liquid-filled coil, transferring heat to the liquid with cool neutralized air flowing back into the data center. These systems still use datacenter air to cool the servers, but then attempt to remove some or all of the heat into the datacenter chilled water system through the liquid to air heat exchanger.

A fully enclose rack solution, like the HPE Adaptive Rack Cooling System (ARCS), is a closed-loop cooling system designed to remove the high levels of heat generated by today’s servers, mass storage, and core networking systems. Equipped with rack infrastructure, cooling, and IT power distribution, the HPE Adaptive Rack Cooling System provides a standardized high performance cooling approach to allow full rack-power density without requiring extensive data center cooling upgrades. Because ARCS is self-contained, it does not use data center air and the cooling parameters can be targeted to the specific cluster being cooled. ARCS can use data center chilled water or warm water to remove the heat.

To meet the demands of the Exascale Era, Shasta, the next generation liquid cooled infrastructure from Cray, a Hewlett Packard Enterprises company is available. This cabinet architecture contains many innovative features that support the highest wattage CPUs and GPUs (in excess of 500W), dramatically reducing interconnect cabling requirements and reducing operational expense by up to 50% compared to standard air-cooled rack infrastructure.

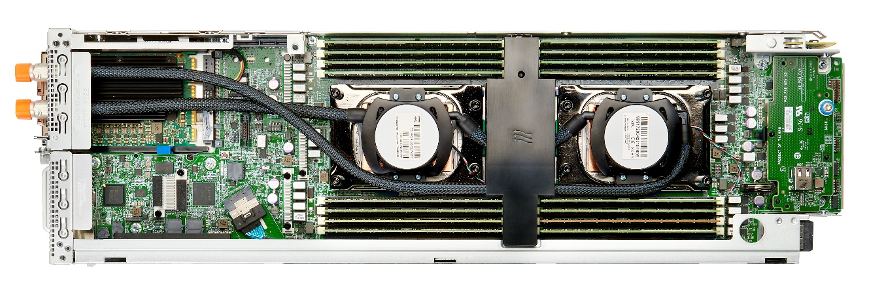

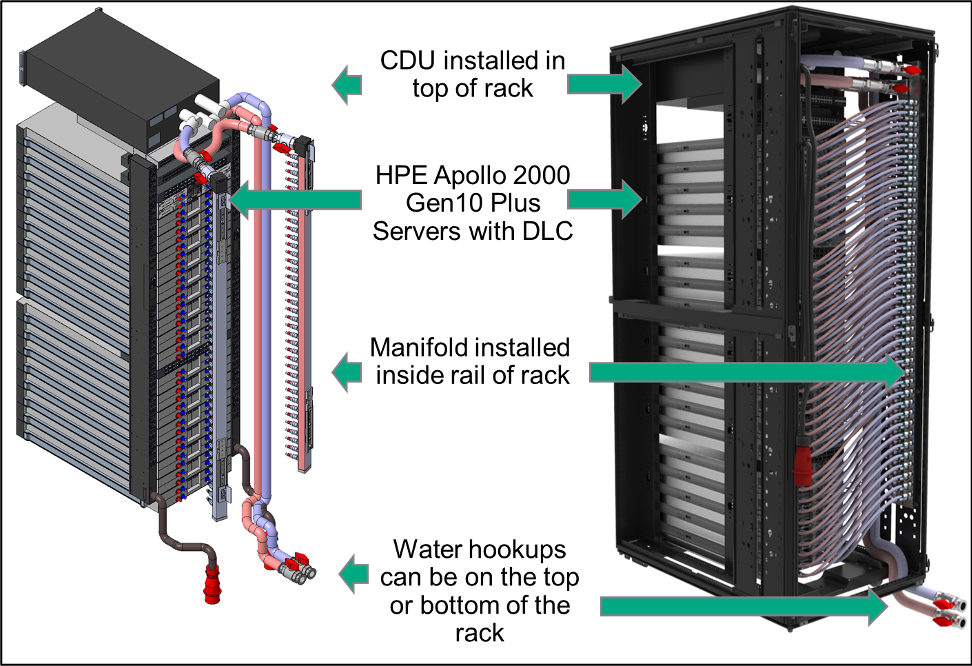

Now in the portfolio for the HPE Apollo 2000 Gen10 Plus System is the new direct liquid cooling (DLC) solution, available in August 2020. Apollo DLC consists of cold plate and tube running coolant over the components within the server and pulling the heat away from those components. A manifold runs the length of the rack bring the coolant supply to the servers, with the hot water traveling to a coolant distribution unit (CDU) that is contained within the rack. The CDU then connects to the facility water, up to 32°C (89.6°F) and water return from the servers.

The benefits of utilizing this DLC solution from HPE quickly add up. First and foremost, this solution is fully, integrated, installed, and supported by HPE. The plug-and-play design is as easy to install as a rear door heat exchanger, with only facility water to connect at your data center. Implementing DLC allows for the HPE Apollo Gen10 Plus system to support processors over 240W for HPC and AI applications so that you can deploy the highest performing CPUs. Cooler running systems result in reduced component failures (namely memory, CPUs, and NICs) which means increased availability, plus higher infrastructure performance and better reliability at an ultimately lower cost when compared to an air system. Adopting DLC solutions in the past were often too expensive to justify the return, but the Apollo DLC System from HPE is a low-cost solution that consumes up to 75% less fan power at the server. And because the system does not require in-row coolers or secondary plumbing, the price scales linearly with each rack.

Accelerating value in HPC

As you search for ways to beat the heat and maximize the performance of your systems, keep in mind that HPE offers a broad set of solutions to fit your unique needs for data centers of all sizes. The Apollo 2000 Gen10 Plus system with DLC is a plug-and-play system designed for the highest wattage CPUs, allowing you to increase power density and drastically lower overall energy usage—whether utilizing warm water or chill water systems.

Be ready for the Exascale Era

Look to the Apollo 2000 Gen10 Plus system to power the HPC workflows that are defining this next era of computing and enterprise digital transformation. Take a virtual 3D tour of the Apollo 2000 Gen10 Plus system now.

And do you need help determining which liquid-cooled solution is right for your business? HPE is here to help with valuable services and flexible financing options to get you on there right path.

*Power usage effectiveness (PUE) is a ratio that describes how efficiently a computer data center uses energy; specifically, how much energy is used by the computing equipment (in contrast to cooling and other overhead). PUE is the ratio of total amount of energy used by a computer data center facility to the energy delivered to computing equipment. The ideal number is 1.0 but on average the PUE number is 1.8.

Advantage EX Experts

Hewlett Packard Enterprise

twitter.com/hpe_hpc

linkedin.com/showcase/hpe-ai/

hpe.com/info/hpc

- Back to Blog

- Newer Article

- Older Article

- PerryS on: Explore key updates and enhancements for HPE OneVi...

- Dale Brown on: Going beyond large language models with smart appl...

- alimohammadi on: How to choose the right HPE ProLiant Gen11 AMD ser...

- ComputeExperts on: Did you know that liquid cooling is currently avai...

- Jams_C_Servers on: If you’re not using Compute Ops Management yet, yo...

- AmitSharmaAPJ on: HPE servers and AMD EPYC™ 9004X CPUs accelerate te...

- AmandaC1 on: HPE Superdome Flex family earns highest availabili...

- ComputeExperts on: New release: What you need to know about HPE OneVi...

- JimLoi on: 5 things to consider before moving mission-critica...

- Jim Loiacono on: Confused with RISE with SAP S/4HANA options? Let m...

-

COMPOSABLE

77 -

CORE AND EDGE COMPUTE

146 -

CORE COMPUTE

155 -

HPC & SUPERCOMPUTING

138 -

Mission Critical

87 -

SMB

169