- Community Home

- >

- Servers and Operating Systems

- >

- Servers & Systems: The Right Compute

- >

- Meet the world’s largest and fastest parallel file...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Meet the world’s largest and fastest parallel file system

The Oak Ridge Leadership Computing Facility has announced the deployment of the largest and fastest single file POSIX namespace file system in the world. Here’s what these record-setting specs mean.

This system, built on open source storage technologies like Lustre and ZFS, marks the fourth generation in OLCF’s long history of developing and deploying large-scale, center-wide file systems to power exploration of grand challenge scientific problems.

Orion is based on the hardware and software data path of the Cray ClusterStor E1000 storage system, but the OLCF team is doing the installation and deployment themselves. Not many organizations can do that, but the team at Oak Ridge is one of the most knowledgeable Lustre teams worldwide. Actually, Oak Ridge National Laboratory was the top contributing end user organization to the latest release of Lustre (2.14.) followed by Lawrence Livermore National Laboratory as shared in the latest Lustre Community Release Update.

The specifications of this record-setting, center-wide file system can be found here.

The usable capacity of Orion is planned to be more than 690 petabytes (PB). That is more than 690,000,000 gigabytes.

The human mind is exceptionally bad at interpreting large numbers. From an evolutionary perspective, our paleolithic ancestors did not have to worry about large numbers. Early humans only really needed to get a basic sense of small batches of quantities, like the number of people in the clan, or how many animals might occupy a certain area or other cavemanly concerns.

To compensate for our evolutionary shortcomings, we created a “fun fact” infographic that puts the large number of 690 PB in perspective. Did you know that it would take:

- 20,000 years to watch 690 PB of HD movies

- 550 million years for a person to send 690 PB of emails

- A row of 4-drawer filing cabinets lined up side-by-side 3,000 times around the earth to store 690 PB of text data

Not only is the Orion file system the largest, it’s also very fast with 10 TB/sec aggregate throughput. That is 10,000 GB/sec. In other words, it could download all the movies on Netflix US in less than three seconds1.

And if you think that this is very fast, consider that the even faster in-system all flash storage layer INSIDE the HPE Cray EX supercomputer of the Frontier exascale machine would be able to do this in a fraction of one second.

The predecessor to Orion was a 250 PB file system called “Alpine.” Why is even more storage capacity needed?

A good example for that is described in HPCwire’s article “Scientists Use Supercomputers to Study Reliable Fusion Reactor Design, Operation”. Each of simulations consisted of 2 trillion particles and more than 1,000 steps. The data generated by ONE simulation could total a whopping 200 PB, eating up nearly all of Alpine’s storage capacity.

It’s why scientists are anxiously awaiting the opportunity to run their simulation on the new Frontier supercomputer with its much larger parallel file system. In fact, humankind is anxiously awaiting the results of this kind of simulation. The team is pursuing research into nuclear fusion—a clean, abundant energy source that could be a game changer.

In an interview on the Frontier homepage Amitava Bhattacharjee, principal investigator for the Whole Device Modeling of Magnetically Confined Fusion Plasmas (WDMApp) project in the Exascale Computing Project says “Fusion, in as much as it produces virtually unlimited energy from seawater with no carbon footprint, would be the ultimate solution to mankind’s energy needs. I can think of no aspiration greater than this.”

At HPE we are proud to contribute to that aspirational goal a little bit with our HPE Cray EX supercomputers and our Cray ClusterStor E1000 storage systems as tools for the brightest minds of mankind. It aligns perfectly with HPE’s purpose… advancing the way people live and work.

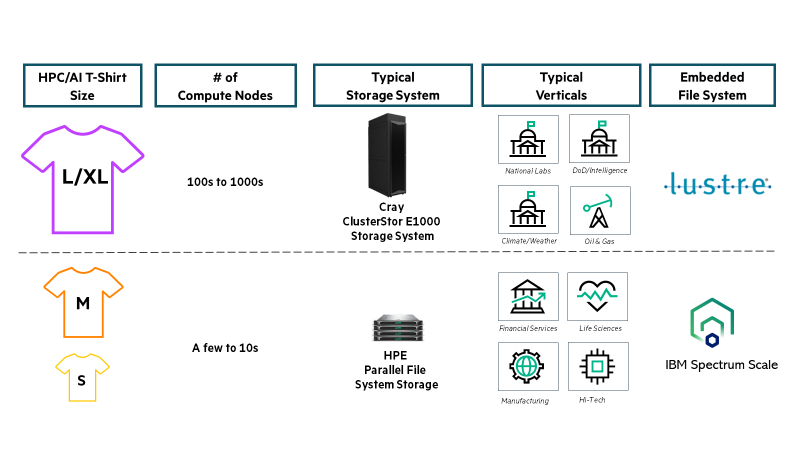

You don’t have to be solving the grand challenges of mankind to take advantage of HPC technologies. Maybe it’s to grow your business through simulation or AI. It’s all important and we have a full portfolio of parallel storage systems for systems of clustered CPU/GPU compute nodes spanning from a few to thousands. The picture below illustrates the positioning.

- Job pipeline congestion due to input/output (IO) bottlenecks leading to missed deadlines/top talent attrition

- High operational cost of multiple “storage islands” due to scalability limitations of current NFS-based file storage

- Exploding costs for fast file storage – at the expense of GPU and/or CPU compute nodes or of other critical business initiatives

There is no better partner for you for end-to-end HPC & AI solutions than HPE. Contact your HPE representative today!

Learn more now

Infographic: Accelerate your innvoation – with HPE as your end-to-end partner

White paper: Spend less on HPC/AI storage and more on CPU/GPU compute

And more HPC & AI storage information online at www.hpe.com

Uli Plechschmidt

Hewlett Packard Enterprise

twitter.com/hpe_hpc

linkedin.com/showcase/hpe-ai/

hpe.com/us/en/solutions/hpc

1) Assuming 6 GB/movie and roughly 4,000 movies (and of course a hypothetical network connection that would be fast enough).

- Back to Blog

- Newer Article

- Older Article

- PerryS on: Explore key updates and enhancements for HPE OneVi...

- Dale Brown on: Going beyond large language models with smart appl...

- alimohammadi on: How to choose the right HPE ProLiant Gen11 AMD ser...

- ComputeExperts on: Did you know that liquid cooling is currently avai...

- Jams_C_Servers on: If you’re not using Compute Ops Management yet, yo...

- AmitSharmaAPJ on: HPE servers and AMD EPYC™ 9004X CPUs accelerate te...

- AmandaC1 on: HPE Superdome Flex family earns highest availabili...

- ComputeExperts on: New release: What you need to know about HPE OneVi...

- JimLoi on: 5 things to consider before moving mission-critica...

- Jim Loiacono on: Confused with RISE with SAP S/4HANA options? Let m...

-

COMPOSABLE

77 -

CORE AND EDGE COMPUTE

146 -

CORE COMPUTE

171 -

HPC & SUPERCOMPUTING

140 -

Mission Critical

88 -

SMB

169