- Community Home

- >

- Servers and Operating Systems

- >

- Servers & Systems: The Right Compute

- >

- New HPE storage system with embedded IBM Spectrum ...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

New HPE storage system with embedded IBM Spectrum Scale: 4 ways it’s a win for HPC and AI

Learn about the expansion of the parallel storage portfolio that fuses two iconic enterprise brands—IBM Spectrum Scale (FKA GPFS) and HPE ProLiant DL rack servers—in the HPE factory for HPC and AI clusters running modeling and simulation, AI, and high performance data analytics.

An HPE storage system with embedded IBM Spectrum Scale. Really? Yes. Really!

With the new HPE Parallel File System Storage product, HPE is now introducing unique parallel storage for customers that use clusters of HPE Apollo Systems or HPE ProLiant DL rack servers with HPE InfiniBand HDR/Ethernet 200Gb adapters for modeling and simulation, artificial intelligence (AI), machine learning (ML), deep learning (DL), and high-performance analytics workloads.

HPE Parallel File System Storage is the first and only storage product in the market that offers a unique combination of:

- The leading enterprise parallel file system1 (IBM® Spectrum Scale™) in Erasure Code Edition (ECE)

- Running on the leading, cost-effective x86 enterprise servers (HPE ProLiant DL rack servers)

- Shipping with preloaded software from the HPE factory with HPE Operational Support Services for the full HPE product (both hardware and software)

- Without the need to license storage capacity separately by capacity—as the IBM Spectrum Scale licensing is included in the HPE product

Here’s why this really is a four-way winning scenario for all stakeholders—customers, channel partners, IBM, and HPE.

What’s in it for customers who are using clusters of GPU- and/or CPU-powered compute nodes like HPE Apollo systems or HPE ProLiant DL rack servers today?

Many of those customers are struggling with the architectural (performance and scalability) or economical ($/TB) limitations of their Scale-Out NAS storage (for example Dell EMC™ PowerScale™ or NetApp® AFF). They now have access to a new more performant, more scalable, and more cost-effective parallel storage option—with “one hand to shake” from procurement to support for their high-performance computing (HPC) and AI infrastructure stack from compute to storage.

If they already are using IBM Spectrum Scale today for the shared parallel storage that feeds their clusters of HPE Apollo or HPE ProLiant compute nodes with data, they get a more cost-effective option to do so—with “one hand to shake” from procurement to support for their full HPC and AI infrastructure stack from compute to storage.

What’s in it for HPE channel partners who are delivering the HPC and AI compute nodes today but the fast storage for the HPC and AI environment is provided by somebody else?

Increased revenue and expanded end customer value creation by providing the full HPC and AI infrastructure stack—while earning more benefits from the HPE Partner Ready program.

What’s in it for IBM? This partnership creates a completely new route to market for IBM Spectrum Scale, the leading enterprise parallel file system, through HPE, the number one HPC compute vendor.2 What’s in it for HPE?

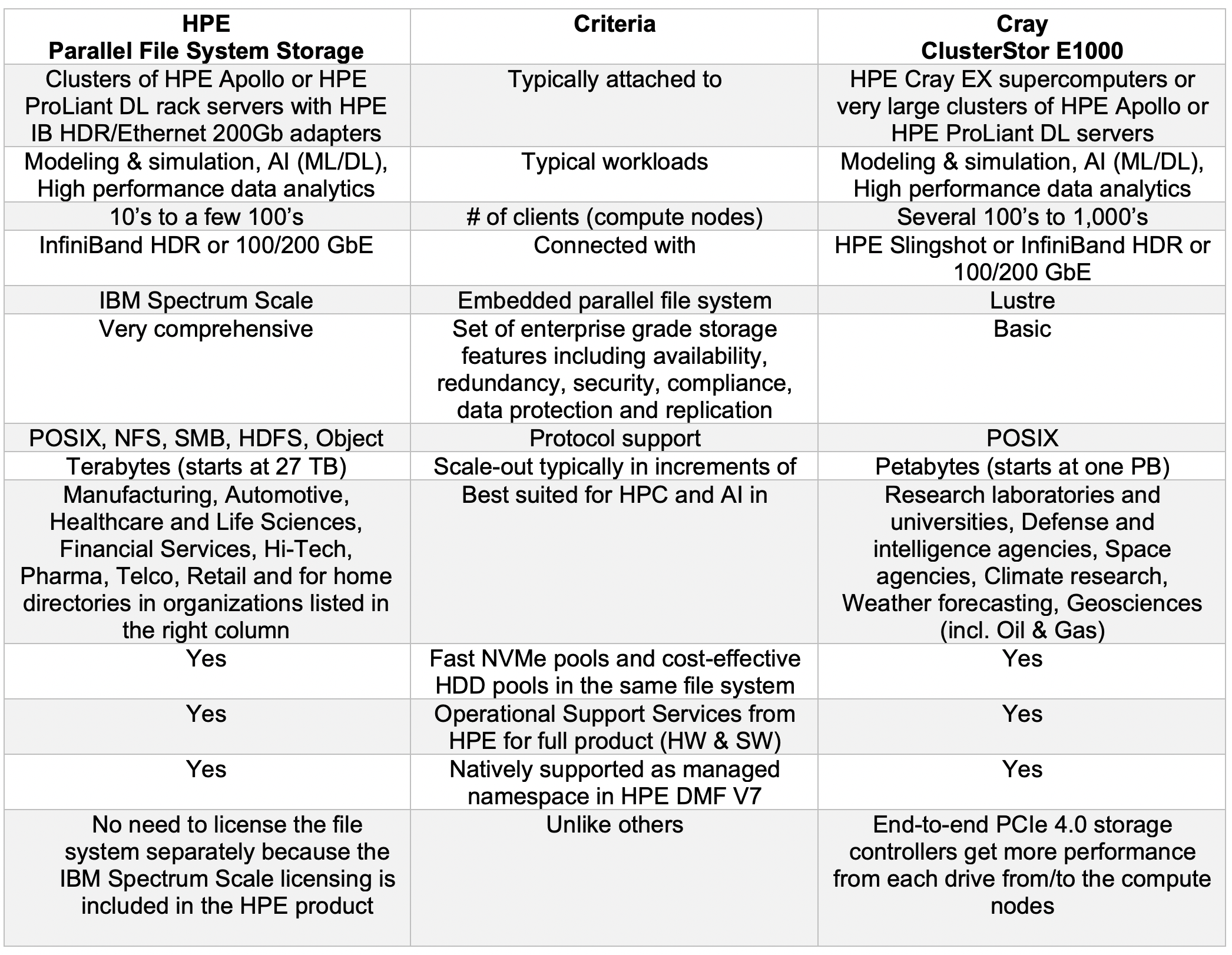

HPE is expanding its parallel storage portfolio for HPC and AI beyond our Lustre-based Cray ClusterStor E1000 high-end storage system. Unlike others who only have Lustre-based storage systems, we now always can attach the ideal parallel storage solution to our leading HPC & AI compute systems for all use cases across all industries. Find more details in this table.

The Cray ClusterStor E1000 storage system has been generally available since August 2020 and has achieved the two exabyte milestone shortly after that. HPE Parallel File System Storage will be generally available in May 2021.

While both HPE storage systems are embedding the leading parallel file system of their respective target market and have different implementations, they share the same fundamental design philosophy we passionately believe in: Limitless performance delivered in the most cost-effective way with single point of contact operational support.

Here’s a look at each of the three components of this HPC and AI storage “manifesto” and the benefits they bring.

Limitless performance

Both storage systems are parallel storage systems embedding parallel file systems that enable tens or even thousands of high performance CPU/GPU compute nodes to read and write their data in parallel at the same time.

Unlike NFS-based Network Attached Storage (NAS) or Scale-out NAS, there are (almost) no limitations regarding the scale of storage performance or storage capacity in the same file system. NFS-based storage is great for classic enterprise file serving (e.g. home folders of employees on a shared file server). But when it comes to feeding modern CPU/GPU compute nodes with data at sufficient speeds to ensure a high utilization of this expensive resource—then NFS no longer stands for Network File System but instead, it’s Not For Speed.

Are you in one of the organizations that has started your HPC and AI journey with NFS-based file storage attached to your HPE Apollo 2000, HPE Apollo 6500 or HPE ProLiant DL clusters? And now—after experiencing massive data growth, you are struggling to cope with the architectural (performance/scalability) or economic ($/terabyte) limitations of Scale-out NAS systems like Dell EMC PowerEdge or NetApp AFF, then look no further. Our new HPE Parallel File System Storage is the ideal solution for you. Go parallel with us!

Delivered in the most cost-effective way

Our parallel storage systems are unlike others who:

- Embed IBM Spectrum Scale running on cost-effective x86 rack servers in their products—HPE Parallel File System Storage does not require additional licenses for the file system that are based on the number and type of drives in the system.

- Embed the Lustre file system in their products—Cray ClusterStor E1000 is based on “zero bottleneck” end-to-end PCIe 4.0 storage controllers that get more file system performance out of each drive and that support 25 Watt NVMe SSD writes.

- Only offer all-flash systems that do not support cost-effective HDDs in the file system—Both HPE Parallel File System Storage and Cray ClusterStor E1000 support fast NVMe flash pools and cost-effective HDD pools in the same file system.

Seymour Cray once said: "Anyone can build a fast CPU. The trick is to build a fast system.” Adapted to our HPC and AI storage topic: "Anyone can build fast storage. The trick is to build a fast, but also cost-effective system.”

To achieve the latter, HPE leverages “medianomics” in both our parallel storage systems. What is medianomics? It is a portmanteau word that combines the words “media” and “economics” into a single word. It means: Leveraging the strengths of the different storage media: for NVMe SSD performance and for HDD cost-effective capacity. It also means to do this while minimizing the weaknesses: For NVMe SSD, it’s high cost per terabyte. For HDD, it’s the inability to efficiently serve small, random input/output (IO).

IDC forecasts3 that even in the year 2024 the price per terabyte of SSD will be seven times higher than the price for terabyte of HDDs. For this very economic reason, we believe that hybrid file systems will become the norm for the foreseeable future.

Both our parallel storage systems enable customers to have two different media pools in the same file system:

- NVMe Flash pool(s) to drive the required performance (throughput in gigabyte per second/IOPs) and

- HDD pool(s) to provide most of the cost-effective storage capacity

It is important to state that we do this within the same file system and do not tier different file systems to leverage medianomics. In our space the saying goes: “More file system tiers, more customer tears.”

With single point of contact customer support

We passionately believe that you should focus on business and your primary mission—and not on managing finger-pointing vendors during the problem identification and problem resolution process.

This is why with our two HPC/AI storage systems you are only speaking to Operational Support Services from HPE for both hardware and software support issues—and never to IBM (in case of HPE Parallel File System Storage) or the Lustre community (in case of Cray ClusterStor E1000). That is true no matter which operational support service level you chose: HPE Pointnext Tech Care or HPE Datacenter Care.

If you are using non-HPE file storage for your HPE Apollo and HPE ProLiant DL clusters today but would like to unify the operational support for your end-to-end cluster including storage, look no further. Our portfolio of parallel HPC and AI storage systems fit the HPC and AI needs of organizations of all sizes, in all industries or mission areas.

Can’t wait to get to the new desired state of the future in which you have unified accountability of one operational support provider for your whole stack? Then accelerate your transition to an end-to-end HPC and AI infrastructure stack from HPE with HPE Accelerated Migration from HPE Financial Services. Create investment capacity by turning your ageing non-HPE storage into cash!

Another choice: consume the whole stack “as a service” as fully managed private HPC cloud in your premises or in a colocation facility. HPE GreenLake for High Performance Computing enables you to do exactly that!

Gartner has noted that “there will be no way to put the storage beast on a diet.”4

HPC storage is forecasted to grow with a 57% higher compound annual growth rate (CAGR) than HPC compute.5

Current course and speed, HPC storage is projected to consume an ever-increasing portion of the overall budget – at the expense of CPU and GPU compute nodes. Unlike storage-only companies we think that this is unacceptable and have expanded our portfolio of HPC and AI storage systems with high performance, but cost-effective options for both leading parallel file systems.

With our parallel HPC and AI storage systems, you can put the storage beast on an effective diet—independent of the size of your organization or the mission or business your organization is pursuing. In addition, you get the benefits of one hand to shake from procurement to support for your full HPC and AI technology stack (software, compute, network, storage) from HPE—with the option to consume the whole stack as the “cloud that comes to you” with HPE GreenLake.

If you want to learn more

Read the first and only white paper in the history of the IT industry that was published by a storage business unit and that has the call to action to spend less on storage and more on CPU/GPU compute nodes. And check out the infographic for a history of the leading parallel file systems.

Get more CPU/GPU mileage from your existing budget by putting the HPC and AI storage beast on an effective diet. Accelerate your innovation and insight within the same budget. Contact your HPE representative today.

Discover more about HPC storage for a new HPC era.

Uli Plechschmidt

Hewlett Packard Enterprise

twitter.com/hpe_hpc

linkedin.com/showcase/hpe-ai/

hpe.com/us/en/solutions/hpc

1 Hyperion Research, Special Study: Shifts Are Occurring in the File System Landscape, June 2020

2 Hyperion Research, SC20 Virtual Market Update, November 2020

3 IDC, WW 2019-2023 Enterprise SSD and HDD Market Overview, June 2019

4 Gartner Research, Market Trends: Evolving HDD and SSD Storage Landscapes, October 2013

5 Hyperion Research, SC20 Virtual Market Update, November 2020

- Back to Blog

- Newer Article

- Older Article

- PerryS on: Explore key updates and enhancements for HPE OneVi...

- Dale Brown on: Going beyond large language models with smart appl...

- alimohammadi on: How to choose the right HPE ProLiant Gen11 AMD ser...

- ComputeExperts on: Did you know that liquid cooling is currently avai...

- Jams_C_Servers on: If you’re not using Compute Ops Management yet, yo...

- AmitSharmaAPJ on: HPE servers and AMD EPYC™ 9004X CPUs accelerate te...

- AmandaC1 on: HPE Superdome Flex family earns highest availabili...

- ComputeExperts on: New release: What you need to know about HPE OneVi...

- JimLoi on: 5 things to consider before moving mission-critica...

- Jim Loiacono on: Confused with RISE with SAP S/4HANA options? Let m...

-

COMPOSABLE

77 -

CORE AND EDGE COMPUTE

146 -

CORE COMPUTE

170 -

HPC & SUPERCOMPUTING

139 -

Mission Critical

88 -

SMB

169