- Community Home

- >

- Company

- >

- Advancing Life & Work

- >

- HPE’s Natural Language Processing platform for Que...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

HPE’s Natural Language Processing platform for Question and Answer

When COVID-19 started spreading in the US, there was a call from HPE’s senior management on how we can harness the power of AI to help the global community mitigate this imminent threat. Around this time the US Government, NIH, and a coalition of leading research groups released a continuously updated COVID-19 Open Research Dataset (CORD-19) of scholarly publications on COVID-19, from all over the world. This started with 33,000 publications, but now have exceeded 100,000 articles.

AI Labs team stepped up to help with the emergency at hand to develop an AI Natural Language Processing based Question and Answer research tool for CORD-19. We used a combination of smart machine learning analytics, latest research from Natural Language Processing, to create this tool. The tool can provide medical researchers with insights on topics like COVID-19 risk factors, transmission, evolution of the virus, vaccines, medical care, etc. We published our Q&A tool on a web portal that can be accessed at https://covid19.labs.hpe.com/

On the COVID-19 initiative, HPE has also worked on PharML, a graph neural network trained on BindingDB, a public database of binding affinities of drug-target proteins. The other initiative has been on pharmacological response based on knowledge graph using Cray graph engine.

HPE NLP Question and Answer tool and a platform

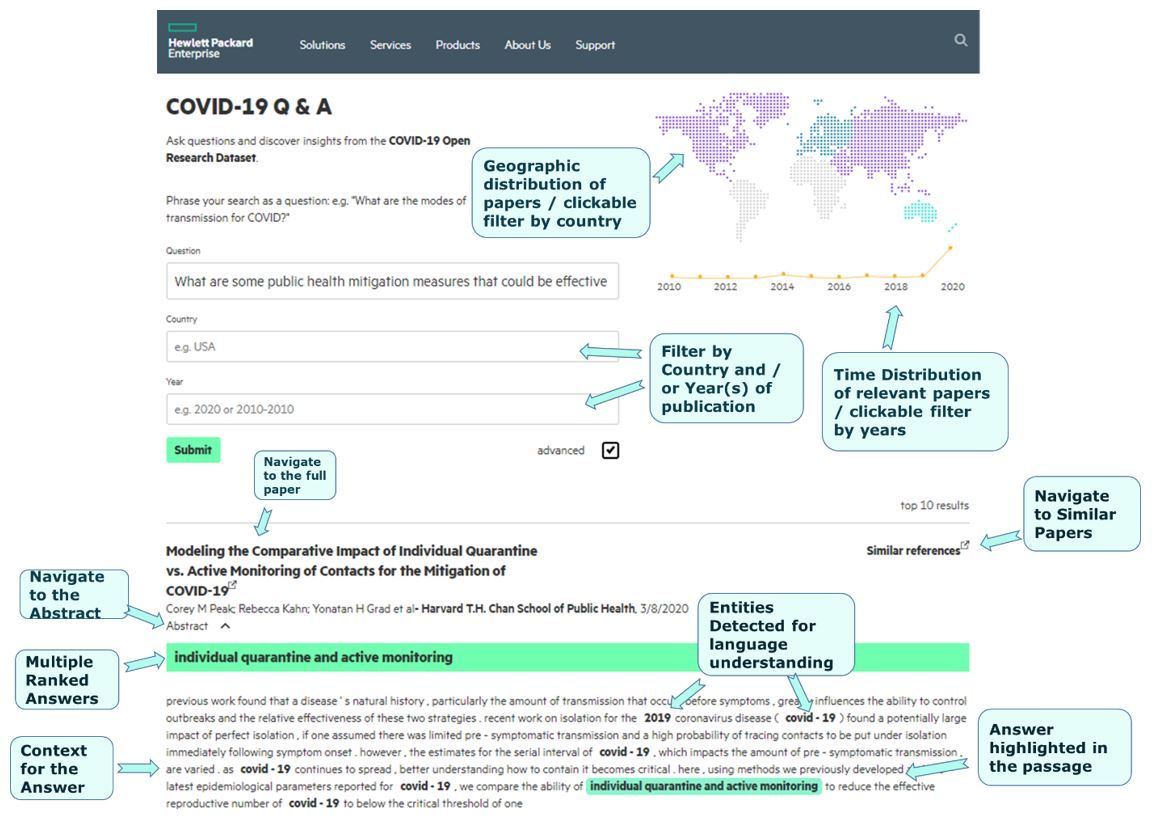

We refined this to a comprehensive NLP Q&A platform enhancing performance and quality, adding features, and making it more generic so that it can be used in a wide variety of business applications across various industry verticals. We also made the platform flexible and optimized to run distributed on server clusters, scaling up to multiple GPU accelerators, and support for web interfaces. The web portal is running on a backend of HPE’s Memory-Driven Computing (MDC) Sandbox on a Superdome Flex platform. This facilitates a scalable architecture for the advanced caching schemes used for faster response time, at all stages of the NLP pipeline.

The platform supports a fast search through thousands of documents, extracts precise answers, reconciles answers from multiple sources, ranks answers, and supports location and time based search. It also supports API to interface with external software, mobile applications, and UI.

The web UI also supports easy navigation to original documents, and automatic domain specific highlighting of keywords.

For the reference, AI Labs is part of the Hewlett Packard Labs, with a charter to build a leadership capability in AI, develop AI solution IPs, and drive strategic AI R&D engagements at HPE.

How Q&A is superior to Search

What really stands out about HPE’s NLP Q&A tool is that it extracts precise answers to your question, and not just a list of relevant resources like a search engine. It also helps researchers navigate through the context of the answers, and find similar publications to extend and aid their research.

A key difference between a “Search Engine” and “NLP Question & Answer application” is that research with Search involves 2 laborious steps, while Q&A is a 1 step process. When we use a search engine, we first get pages of results matching the key words, and then have to tediously study each of the linked content for the answer we are looking for.

For a Question & Answer application, we get the specific answers to our question, just like we would do when asking experts. Also “search” gets harder when we have to get answers on complex topics from a multitude of long research papers.

NLP Pipeline Architecture

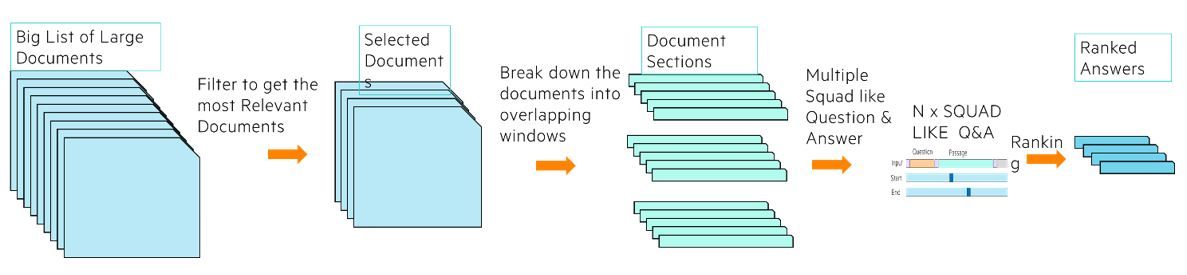

Most of the existing NLP Q&A solutions have been built around the Stanford Question Answer Dataset, SQUAD, the popular and widely used benchmark. SQUAD reading comprehension dataset is limited to Q&A for short passages which are distilled and from Wikipedia. So for a given question, we have a short passage and we determine what is the most effective answer from that short passage in terms of “start” and “end” markers in that passage, and if the question can be at all answered from that passage.

But in practical usage like CORD-19 research, some of the key challenges are high dimensionality with a huge number of documents and each document is long with several sections addressing different contexts. Also the complexity comes from specialized topics like medicine with jargons and complex domain specific semantics and understanding. As you can guess this clearly needed a pipeline with multiple stages.

In stage one, we use multiple NLP techniques and ensemble of models to filter down the large corpus, to the most promising publications and then to the precise paragraphs which has the highest likelihood for the best answers for the question asked.

In stage two, we again use a different set of NLP techniques and models and their ensemble to extract the best answers from within this shortlisted set of paragraphs from publications.

In stage three, we do post-processing refinements on answers, find semantic similarity between multiple answers and rank them with clustering techniques. The ranking also uses the collective insights from analysis at every step of the pipeline and external sources of information like institutional reputation, etc.

Enabling Business Opportunity

COVID-19 is just the first use case for this NLP platform. This platform can solve other use cases that businesses need for a variety of Question Answer and other NLP applications, for business process optimization, retail, first responders, enabling insights and actionable analytics.

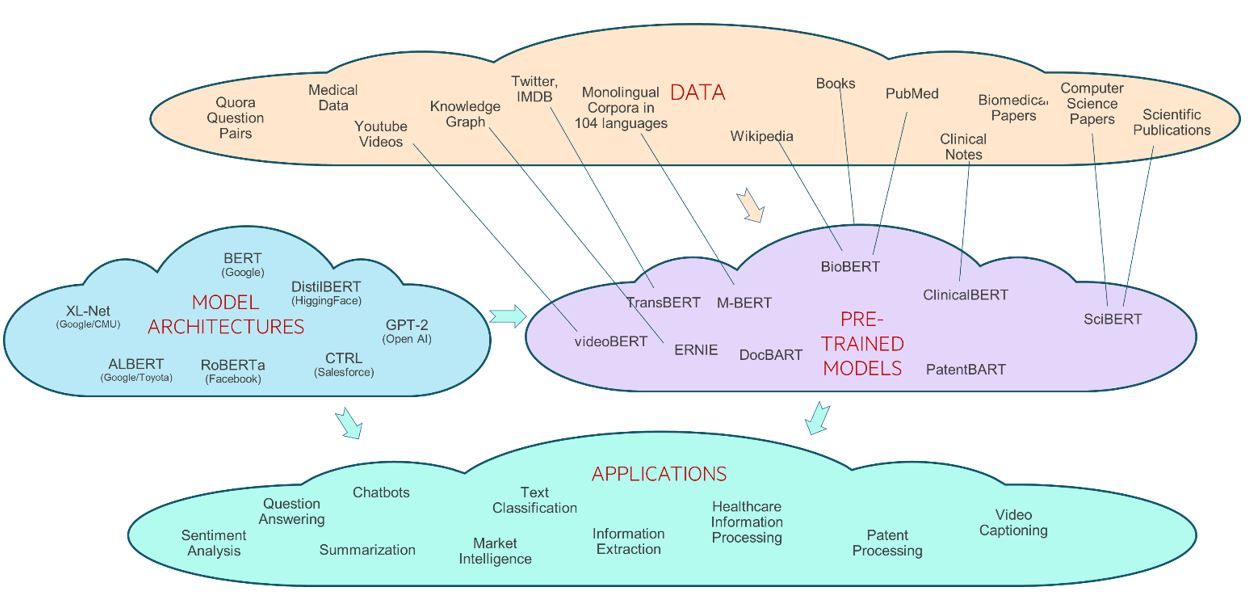

For one of the NLP models that has been used in this platform, BERT, the outer layers can be modified to enable a variety of applications like classification, sentiment analysis, named entity recognition, question answering, summarization, etc.

Also this platform can incorporate specialized BERT-based models to solve domain specific problems. The specialized models are pre-trained on domain/application specific corpus like BioBERT with biomedical text, SciBERT with scientific publications, ClinicalBERT with clinical notes, DocBERT with document classification, and PatentBERT with patent classification. Simple modification to the base model and training with small amounts of domain specific data enable specific business usages.

As an example in healthcare, the pre-trained models can be applied with transfer learning to enable measuring of patient satisfaction. This also enables a host of applications like clinical document processing, quality measures, and risk and outcome assessment.

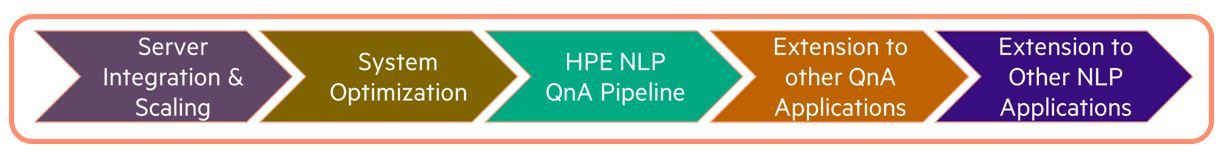

Making NLP real and deployable and NLPaaS

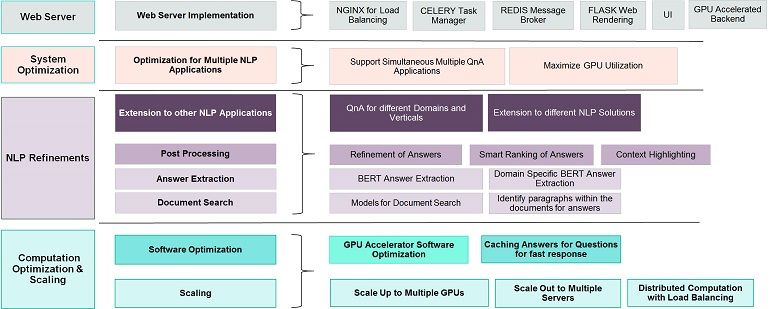

The HPE NLP Platform has focused on server integration, system optimization, web integration, and an architecture which can accommodate simultaneous execution of multiple NLP applications on the same server cluster, while sharing resources. This helps implement service offerings like NLP as a Service (NLPaaS).

Server Integration: The HPE NLP platform software can run distributed on a server cluster scaling up to multiple GPUs for acceleration. It has implemented a load balancing web interface with on the fly software updates.

System Optimization: The NLP platform has implemented adaptive performance optimization, smart caching for all pipeline stages for fast response & cost reduction, and software pipelining. The architecture also supports system optimizations for cost and power.

NLP Pipeline Refinements: The flexible pipeline architecture accommodates multiple model choices, domain specific optimizations, and model reusability across multiple applications.

As discussed earlier the flexibility of the pipeline makes it easily extendible to a variety of NLP applications other than Q&A.

More more information, see HPE's Speech and Natural Language Processing Solution

Soumyendu

Soumyendu Sarkar is a Senior Distinguished Technologist of Artificial Intelligence at Hewlett Packard Labs. Before joining HPE, he had worked for the Intel Corporation and Bell Labs at Lucent Technologies. He and his team develop Machine Learning solutions at AI Labs on Reinforcement Learning, Trustworthy AI, Natural Language Processing, Video Analytics, and a variety of other areas.

- Back to Blog

- Newer Article

- Older Article

- Back to Blog

- Newer Article

- Older Article

- MandyLott on: HPE Learning Partners share how to make the most o...

- thepersonalhelp on: Bridging the Gap Between Academia and Industry

- Karyl Miller on: How certifications are pushing women in tech ahead...

- Drew Lietzow on: IDPD 2021 - HPE Celebrates International Day of Pe...

- JillSweeneyTech on: HPE Tech Talk Podcast - New GreenLake Lighthouse: ...

- Fahima on: HPE Discover 2021: The Hybrid Cloud sessions you d...

- Punit Chandra D on: HPE Codewars, India is back and it is virtual

- JillSweeneyTech on: An experiment in Leadership – Planning to restart ...

- JillSweeneyTech on: HPE Tech Talk Podcast - Growing Up in Tech, Ep.13

- Kannan Annaswamy on: HPE Accelerating Impact positively benefits 360 mi...