- Community Home

- >

- HPE AI

- >

- AI Unlocked

- >

- A better approach to major data motion: Efficient,...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

A better approach to major data motion: Efficient, built-in mirroring

Mirroring is a key process for moving large amounts of data, and it needs to do so in a manner that is convenient, efficient, and accurate. Now for anyone thinking, “What’s the big deal? I can just use rsync and make a copy”, you may not be familiar with the challenges of doing this at very large scale.

Imagine, for example, having to move several petabytes of data from edge cluster locations to an on-premises data center daily for sophisticated AI training, as is done in some large industrial IoT edge use cases. Or maybe you are moving large amounts of data from on premises to a cloud deployment for a hybrid architecture or copying production data onto another cluster (or even a copy on the same cluster) to be used as a test environment. In all of these situations, it’s important to consider the time and resources involved. Simple copying by programs such as rsync may not be sufficient; it may take too long or interfere with other essential processes. Worse, it exposes partially copied data. And what happens if the connection is interrupted?

At this scale, major data motion is better handled by a solution engineered to insure an accurate copy at the destination. Additionally, it must be done in a timely manner by automatically optimizing network occupancy. Built-in data mirroring, a feature of the HPE Ezmeral Data Fabric, is much faster than traditional data copying. But as important as data fabric mirroring is, there are times when it needs to get out of the way of other essential processes. Here’s how that works.

Mirrors are copies of a data fabric volume

To better understand the efficiency of data fabric mirroring, a bit of background will be helpful. HPE Ezmeral Data Fabric is a software-defined and hardware-agnostic data infrastructure to store, manage, and move data in systems running diverse applications for large-scale analytics and AI /ML. The data fabric has distributed files, NoSQL database tables, and event streams all engineered together into the same software and organized via a key data management construct known as a data fabric volume. A variety of policies are set at the volume level, and many are configurable, if so desired.

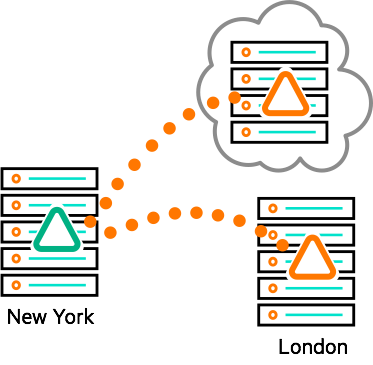

For example, a data fabric volume can be used to assign topology, placing data on specialized hardware. Fine-grained control over data access – both inclusion and exclusion – is also set at the volume level via Access Control Expressions (ACEs). And data fabric volumes serve as the basis for making snapshots of data and for placing mirror volumes at specified locations, as indicated in Figure 1.

Figure 1. HPE Ezmeral Data Fabric volumes are copied via mirroring.

In moving data via mirroring, data in a source volume is copied to a destination volume located on a data fabric cluster at another location, whether on premises or in a cloud deployment. Mirroring can be conveniently scheduled to occur automatically or created manually as needed.

Data fabric mirroring is incremental and accurate

When the mirror volume is first established at the destination, a full copy of data in the source volume (directories, files, tables, event streams) is made. But periodic updates of the mirrored volume are incremental: the data fabric only moves the blocks that have changed since the last mirroring event. This efficient design results in much less data being moved.

Data fabric mirrors are particularly accurate as well. A mirror is made via a snapshot, and it’s important to note a data fabric snapshot is a true point-in-time, read-only image of a volume. Thanks to the intermediate snapshot step, the state of data in the mirror volume is exactly the same as in the source volume at the time mirroring (or an incremental update) was initiated. In other words, data is either entirely in or entirely out of the mirror, eliminating the problem of partial copying due to a write that was in progress. A write won’t be included in the snapshot unless it is complete. The data fabric also automatically recovers and proceeds in the case of a network interruption or hardware failure. This means built-in mirroring ensures accuracy and efficiency.

Data fabric mirroring optimizes network utilization

How does data fabric mirroring move large scale data so quickly and yet accurately? The secret lies in the fact that while the final state will be exposed atomically (an exact match to the snapshot image of the source volume), the pieces do not have to be moved in order. That freedom allows the system to automatically copy data across as many network links as possible, moving data in parallel.

Because data fabric’s built-in mirroring automatically optimizes source links and destination links, it can optimize network occupancy, thus moving even huge amounts of data with extraordinary speed. For very large scale and complex systems, this platform-level optimization not only means data is moved faster, it can even make the difference between that level of data motion being practical or essentially not feasible.

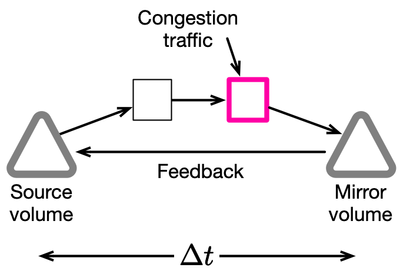

But what happens when some other essential process needs to share the network links? That’s when mirroring needs to get out of the way, at least temporarily. The beauty of data fabric optimization is this is done automatically, as illustrated in Figure 2.

Figure 2. HPE Ezmeral Data Fabric mirroring automatically adjusts in response to network congestion.

The data fabric uses many network links to transfer data during mirroring. These network links and associated network switches (represented by the arrows and rectangles in the figure) are subject to variable performance due to congestion. The data fabric monitors round trip transit time and uses that information to detect incipient congestion. If non-data fabric processes need more of the network capacity, injecting traffic at particular network switches (pink square in the figure), the data fabric detects the problem and temporarily decreases the volume of data being sent by the high capacity mirroring processes at their source. When the conflicting traffic eases, data fabric automatically resumes mirroring at speed. Congestion is not just a networking problem, so the data fabric has to optimize for conflict at the storage device level as well.

This platform level optimization of the mirroring process is an important aspect of making these processes practical at large scale and in multi-tenant systems. But, it’s not the only situation that processes are automatically adjusted to keep out of the way.

Mirroring is not the only platform-level optimization

A number of essential processes in the data fabric automatically adjust to changes in workload pressures in similar ways. A good example is the self-healing feature of the HPE Ezmeral Data Fabric. Data in the data fabric is, by default, replicated three times. Replicas in this data infrastructure are distributed across different machines for data resiliency.

In the event of a disk failure and damage to one replica, the system automatically begins copying a surviving replica onto a healthy disk. But if other workloads are putting a lot of pressure on the system, the replica self-healing process is throttled back to consume no more than 10% of available network capacity. The re-replication process will still complete, but not as quickly as it might otherwise in an idle cluster. But if a second disk failure damages a second replica before the self-healing of the first is complete, the data fabric immediately changes strategy and ramps up replication from the remaining replica to full network speed to ensure data survival.

Similar adjustments are made when in the data fabric is deleted by deleting an entire volume. Bulk data deletion is an important challenge in very large-scale systems. This essential process is triggered by pre-set policies but it is carried out behind the scenes and adjusts according to other workloads.

To learn more about platform-level optimization and other features, read the technical paper “HPE Ezmeral Data Fabric: Modern infrastructure for data storage and management.”

Ellen Friedman

Hewlett Packard Enterprise

Ellen_Friedman

Ellen Friedman is a principal technologist at HPE focused on large-scale data analytics and machine learning. Ellen worked at MapR Technologies for seven years prior to her current role at HPE, where she was a committer for the Apache Drill and Apache Mahout open source projects. She is a co-author of multiple books published by O’Reilly Media, including AI & Analytics in Production, Machine Learning Logistics, and the Practical Machine Learning series.

- Back to Blog

- Newer Article

- Older Article

- Dhoni on: HPE teams with NVIDIA to scale NVIDIA NIM Agent Bl...

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.

-

AI

18 -

AI-Powered

1 -

Gen AI

2 -

GenAI

7 -

HPE Private Cloud AI

1 -

HPE Services

3 -

NVIDIA

10 -

private cloud

1