- Community Home

- >

- Company

- >

- Advancing Life & Work

- >

- A new memory circuit capable of learning

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

A new memory circuit capable of learning

At Hewlett Packard Labs, we recently invented a new memory circuit, called “differentiable content addressable memory (dCAM)”, which mimics the operation of associative learning memory in our brain, and we believe it could lead to a novel computing paradigm, able of adapting itself while performing different tasks.

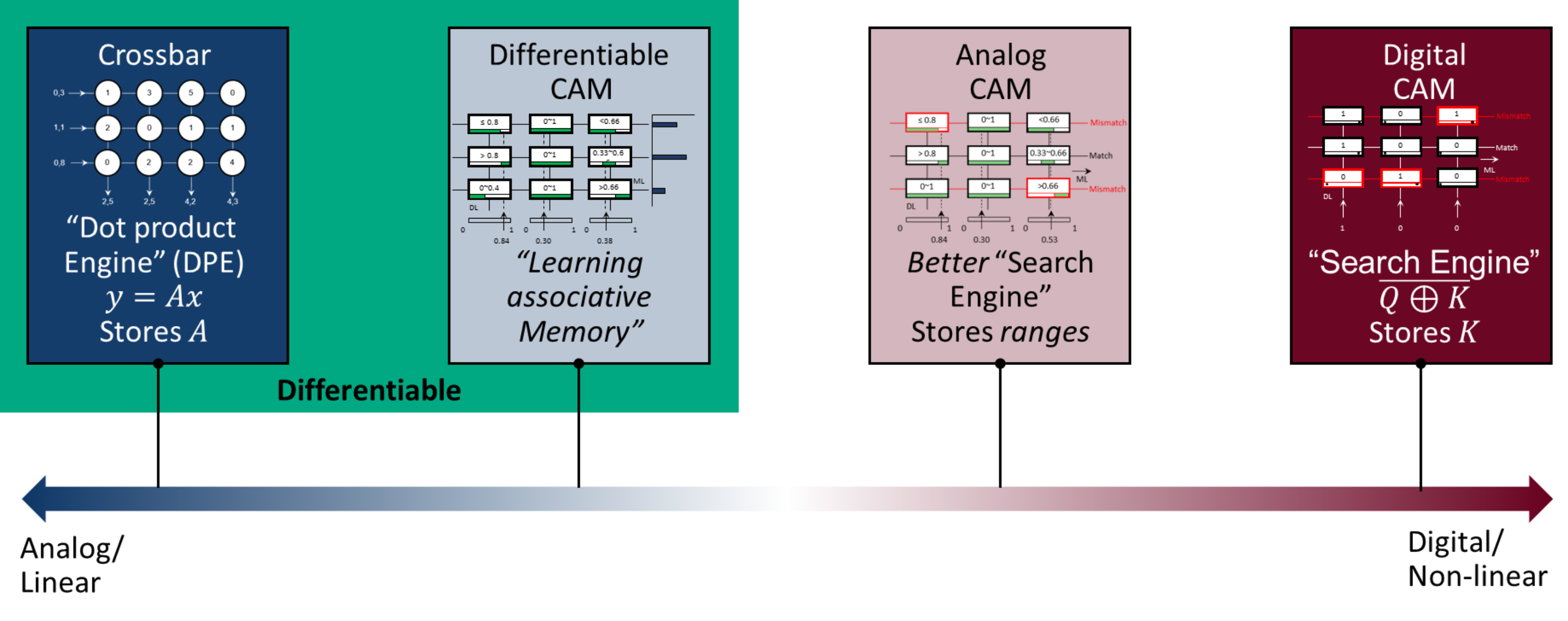

Fig. 1 Spectrum of in-memory computing primitives

Previous research has shown that memory devices can perform a variety of tasks that accelerate machine learning. For example, Figure 1 shows the spectrum of in-memory computing primitives, new circuits concept that can map different types of problems.

On the far left of the figure, crossbar arrays offer a compact implementation of an arbitrary analog matrix. By taking advantage of current-voltage physics laws, previous research has demonstrated that these devices can efficiently implement direct matrix vector multiplication, such as what we demonstrated in our previous work on the Dot Product Engine (DPE) with ReRAM (memristor) devices.

On the right of the figure, content-addressable memories (CAMs) search for a word inside the memory. CAMs are used in a variety of fields, where fast lookups are important, such as networking and security. Unlike traditional random-access memories (RAMs), a CAMs receives data (query) as input and compares it against each stored word (key), returning a match (‘1’ at the output) only if the query is equal to the key.

Usually, CAMs are paired with RAMs to associate your input with another piece of information, and the match produced by the CAM retrieves some other data from a RAM array. This combination effectively creates an “associative memory” which is very similar to what happens in the brain, where larger memories and experiences are retrieved starting from a small piece of data, such as a smell, or a sound from our childhood. However, traditional digital CAM circuits are strong memories: either a piece of data is found, or not.

Recently, at Hewlett Packard Labs we came up with a novel analog CAM, based on ReRAM devices (memristors), which enables searching an analog value that falls within a range, while still producing a digital output (either mismatch or match, if any).

While the analog CAM has several important uses, it still cannot operate as a learning associative memory, nor implement neural network learning algorithms. The most common learning technique in a neural network is through backpropagation, which consist of comparing the output of the model with a ground truth (e.g., the classification of an image), and propagating the comparison difference backward to the layers that form the network. The necessary condition for that operation is for the model to be differentiable, meaning that the input/output relationship should be continuous. The core idea is that a CAM used as a neural network implementing back-propagation should be able to learn associations directly from incoming data. Unfortunately, an analog CAM producing a binary match/mismatch value is not differentiable, and it cannot be used as the building block for a backpropagation-based learning algorithm..

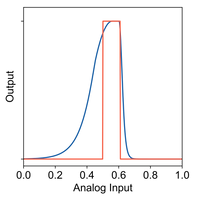

Fig. 2 - Comparison between a single analog CAM (aCAM) and differentiable CAM (dCAM) input/output relationship when storing the range [0.5,0.6]

Our research discovered that a simple modification to the analog CAM circuit could produce a differentiable CAM (dCAM), which has a continuous relationship between input and output (as shown in Fig. 2), allowing the new cell to be used for learning through backpropagation and other known techniques.

We believe this is an important advancement that has a variety of disparate uses. As an example, dCAM has performance advantages compared to a similarly designed aCAM in terms of storing capacitance. In fact, after chip production several non-idealities and variations due the microelectronic process, are present limiting in particular the analog operation, which is directly correlated with the number of patterns that aCAM/dCAM can store. By programming the dCAM and sensing the analog output, an error can be computed, backpropagated and analog memristor conductance can be updated in order to compensate for such errors, increasing the memory performance and density. In another one, the same circuit can perform Boolean satisfiability optimization, an NP-complete problem which is at the bases of important applications (such as formal verification of planning) , in an efficient way and with limited resource scaling with problem size. In this case, the dCAM stores the clauses, and the inputs that satisfy such clauses are learned thanks to stochastic gradient descent.

We believe we are just scratching the surface for the potential of a dCAM. For example, a dCAM could be paired with other neuromorphic blocks (such as a dot-product-engine) to develop fully differentiable computing systems able of learning complex tasks without prior knowledge or need for heavy programming. A hybrid memory which can learn to be more like a DPE, or a CAM could change its behavior based on the workload. The same revolution that happened to software and modeling with AI, could happen to compute systems and hardware, with AI designed and optimized circuits along with architectures which obey AI learning rules for performing efficient computation.

We published a paper on our work, “Differentiable content addressable memory with memristors,” in Advanced Electronic Materials, authored by Giacomo Pedretti, Cat Graves, Thomas Van Vaerenbergh, Sergey Serebryakov, Martin Foltin and Xia Sheng from Hewlett Packard Labs, Ruibin Mao and Can Li from Hong Kong University and John Paul Strachan from Forschungszentrum Jülich.

- Back to Blog

- Newer Article

- Older Article

- MandyLott on: HPE Learning Partners share how to make the most o...

- thepersonalhelp on: Bridging the Gap Between Academia and Industry

- Karyl Miller on: How certifications are pushing women in tech ahead...

- Drew Lietzow on: IDPD 2021 - HPE Celebrates International Day of Pe...

- JillSweeneyTech on: HPE Tech Talk Podcast - New GreenLake Lighthouse: ...

- Fahima on: HPE Discover 2021: The Hybrid Cloud sessions you d...

- Punit Chandra D on: HPE Codewars, India is back and it is virtual

- JillSweeneyTech on: An experiment in Leadership – Planning to restart ...

- JillSweeneyTech on: HPE Tech Talk Podcast - Growing Up in Tech, Ep.13

- Kannan Annaswamy on: HPE Accelerating Impact positively benefits 360 mi...