- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Storage performance benchmarks are useful – if you...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Storage performance benchmarks are useful – if you read them carefully

A few months ago I posted a blog titled Busting the Myth of Storage Block Size that originally posted on nimblestorage.com. Since that site is not longer available, we grabbed some of the more interesting blog posts. That Busting the Myth one was by David Adamson, PhD. I have another interesting post from him today that was saved from nimblestorage.com. This post from June 2015 is still relevant and interesting, so check it out!

Storage performance benchmarks are useful – if you read them carefully

When building or refreshing a data center, IT managers today are confronted with a huge range of products and

With data storage arrays, for example, IT buyers rely on performance benchmarks provided by vendors and analysts – but those benchmarks don’t always match the real-world workloads that companies run to support their businesses.

One key variable that influences performance (and thus performance benchmarking) is IO size. Specifically, when talking about how many IOPS (input/output operations per second) an array can achieve, test workloads tend towards smaller-sized IO (since arrays typically achieve more IOPS at smaller IO sizes). But when talking about how much throughput an array can achieve (in terms of megabytes per second), test workloads tend towards larger-sized IO, as arrays typically achieve more throughput at larger IO sizes.

In the real world, workloads perform a mixture of small and large IO operations, and, thus will be doing less IO than a purely small-IO workload, and less throughout than a purely large-IO workload. Even so, these benchmarks serve to map out the limits of what an array can achieve, both in terms of individual operations on one end and overall data flux on the other.

IOPS, Throughput, and IO Size

Some storage vendors assert that measuring the performance of a storage array at small IO sizes (e.g. 4KB or 8KB) is irrelevant to performance benchmarking, on the basis that real-world IO sizes are typically much larger (e.g. 32KB). From experience, we were skeptical of this claim, but to see for ourselves we turned to HPE InfoSight.

HPE InfoSight is a deep data analytics platform that ingests more than 30 million data points a day from each of thousands of HPE Nimble Storage customer arrays. The InfoSight engine is the foundation of our customer portal, where customers can get a 360-degree view of their deployed arrays, and also serves our support organization by automatically generating and resolving more than 80 percent of customer-facing cases.

For this investigation, we tapped into InfoSight data to sum the number of host-requested IO operations at different IO sizes across thousands of HPE Nimble Storage customer systems at once. IO was totaled from Feb. 1 to Feb. 28, 2015. Using that data, we determined what percentage of that total IO we observed that had sizes within the intervals shown below.

From the histograms in Figure 1 we can see that:

- >60% of writes are less than 8k,

- >50% of reads are less than 16k, and

- the distribution of IO sizes is bimodal (has two distinct peaks):

- >50% of IOs have sizes between 4k (inclusive) and 16k (exclusive)

- >20% of IOs have sizes between 64k (inclusive) and 256k (exclusive)

- only ~15% of IOs have sizes between 16k (inclusive) and 64k (exclusive)

According to this data, it seems clearly incorrect to claim that it is only relevant to benchmark an array’s performance at 32k, or even only at high IO sizes in general (e.g. 32k+). Real-world workloads are performing a significant fraction of their IO below that level. This begs the question: why does this data seem to disagree with what others in the storage space have presented?

Examining Methodology

Based on the presented evidence that we at HPE Nimble Storage have seen on production arrays reporting into InfoSight, the dubious claim others have made, that real-world IO sizes are primarily around 32k is based purely upon an examination of array-level average IO size. The problem with looking only at averages is that doing so weights the larger IOs more and removes the subtleties of bimodality (i.e.: when the distribution of IO size includes many small IOs, many large IOs and fewer IOs of intermediate size).

As an example: If a workload performed 10 operations that were 4k in size and 3 operations that were 128k in size, the average overall IO size for that set of operations would be 32.6k. This is despite the fact that more than 75% of the IO performed was at the 4k size (and that none of the IOs performed were near 32k in size). To use an analogy: this average is like seeing a bunch of cyclists and a double-decker bus driving alone down the road and calling the average vehicle a minivan.

Moving past hypotheticals, we can do the same analysis that other vendors have done (using our same InfoSight data on the same set of customer IO) and achieve the same erroneous and easy-to-misinterpret result.

Averages Can Be Misleading

Looking at our own HPE Nimble Storage customer data again (the same set of arrays over the same month-long time period as was used to create the distributions in Figure 1), we can aggregate our information as others have done to illustrate how that analysis distorts the actual picture. If instead of looking directly at the distribution of IO sizes that our customers performed, we instead (1) determined the average IO size for each customer array and then (2) counted the number of arrays (rather than the number of IOs) that fell into each size interval, we see this:

Looking only at this picture, we might incorrectly infer that ~75% of IO was being performed between 16k and 64k – (with ~12% below and ~12% above) but as we have seen from the histogram of actual IO sizes, only ~15% of the IO fell into that range (with ~61% below and ~24% above). Similarly, one might incorrectly conclude that IOs of 32k were more relevant for benchmarking than 4k. In fact, benchmarking at both high IO sizes (e.g. 64k+) and low IO sizes (e.g. ~4k) is important to accurately reflect the bulk of IO performed by real-world applications.

Most operations happen at low IO sizes (between 4k and 8k) and most throughput happens at high IO sizes (64k+). For this reason, benchmarking for IOPS (with low IO sizes) and for throughput (at high IO sizes) are both important for understanding an array’s performance because they trace out the opposite extremes of what an array can do. That is unlikely to change anytime soon.

It is disheartening to see storage vendors publish data that is coerced to downplay their own platform’s weak points, particularly when this data so starkly misrepresents the underlying observations. Benchmarking with a distribution of IO that is representative of real-world workloads is important. To do so requires appropriate measurement of what representative IO distributions really are, and passing off a distribution of array-level averages as a meaningful quantification of IO size is pure baloney.

Application-Level Information

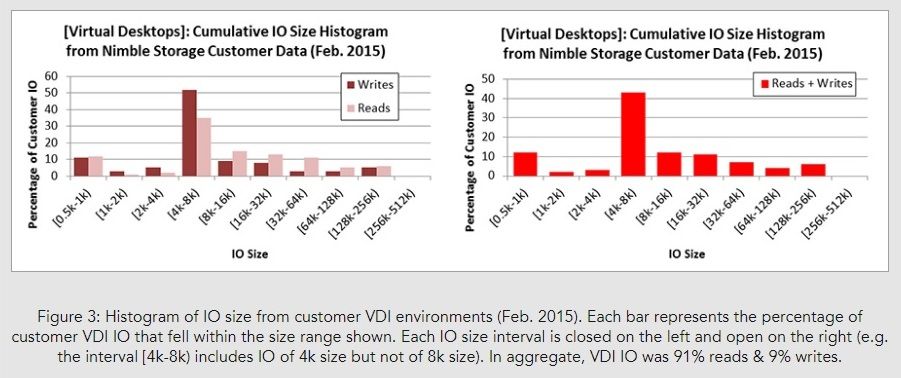

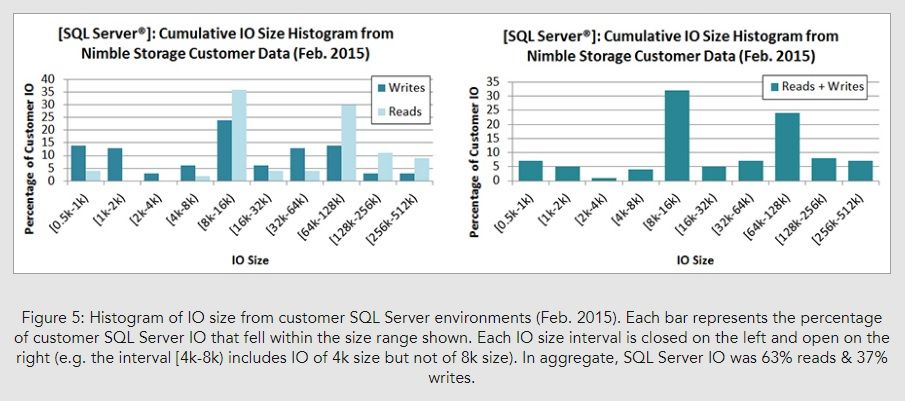

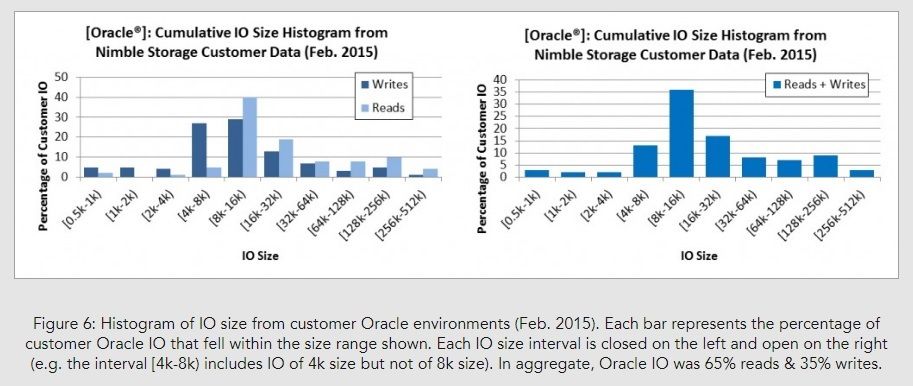

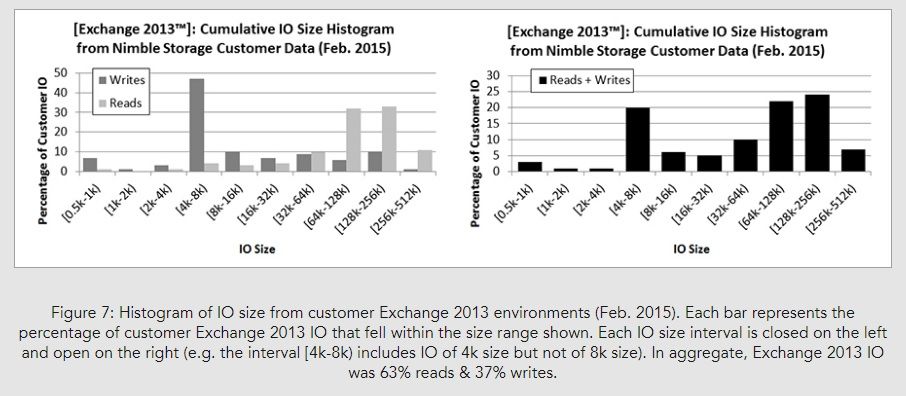

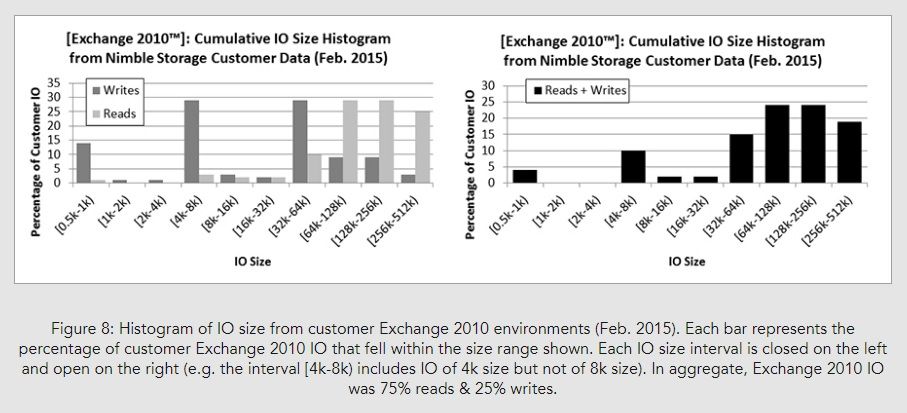

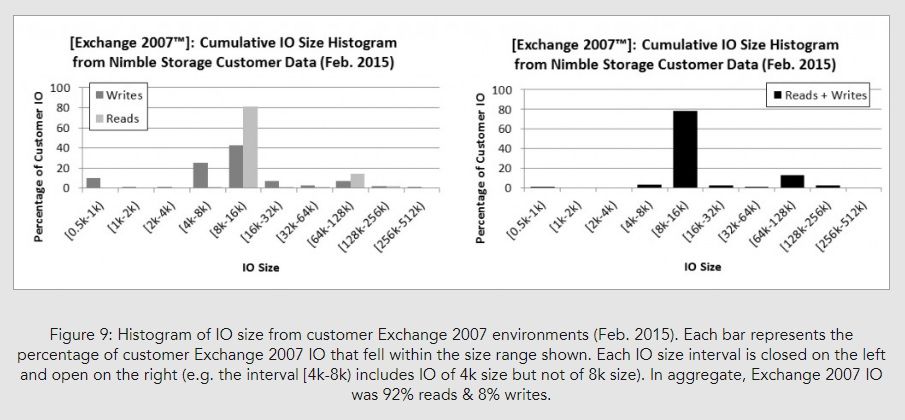

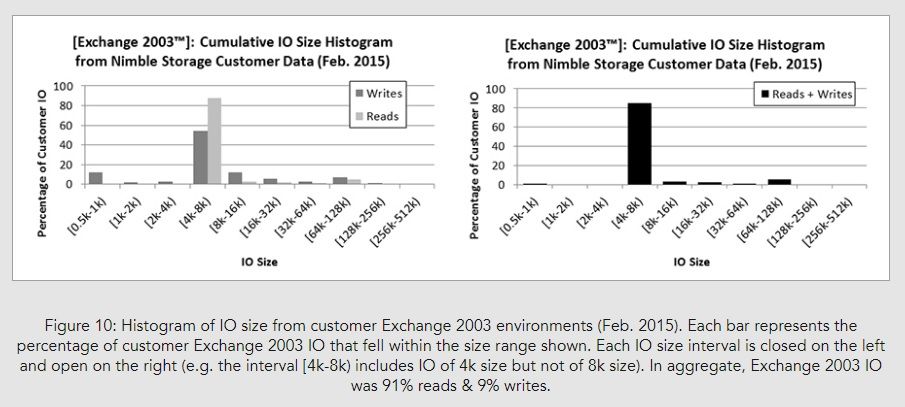

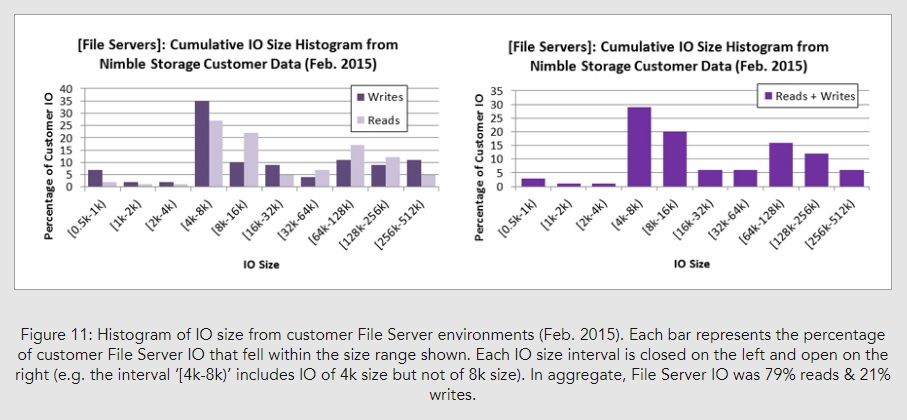

To further validate that our aggregations in Figure 1 are representative of real-world application activity, we have taken the additional step of breaking down our user IO into common application categories (Figures 3 through 11). From these additional histograms, we can see that the patterns we identified in the application-agnostic IO size histograms in Figure 1 (namely that [1] a large proportion of IO is below 16k in size and that a small proportion of IO is between 16k and 64k in size and [2] the IO size histogram is bimodal) are borne out on an application-by-application basis as well.

For those interested in benchmarking arrays for particular applications or combinations thereof, the histograms below can provide important insight into what a realistic distribution of IO would look like. Furthermore, tools like vscsiStats can enable storage administrators to quantify the IO size distributions within their own environments.

xxx

I work at HPE

HPE Support Center offers support for your HPE services and products when and how you need it. Get started with HPE Support Center today.

[Any personal opinions expressed are mine, and not official statements on behalf of Hewlett Packard Enterprise]

- Back to Blog

- Newer Article

- Older Article

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...