- Community Home

- >

- Company

- >

- Advancing Life & Work

- >

- Solving intractable problems using in-memory compu...

Categories

Company

Local Language

Forums

Discussions

Knowledge Base

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Solving intractable problems using in-memory computing with analog non-volatile devices

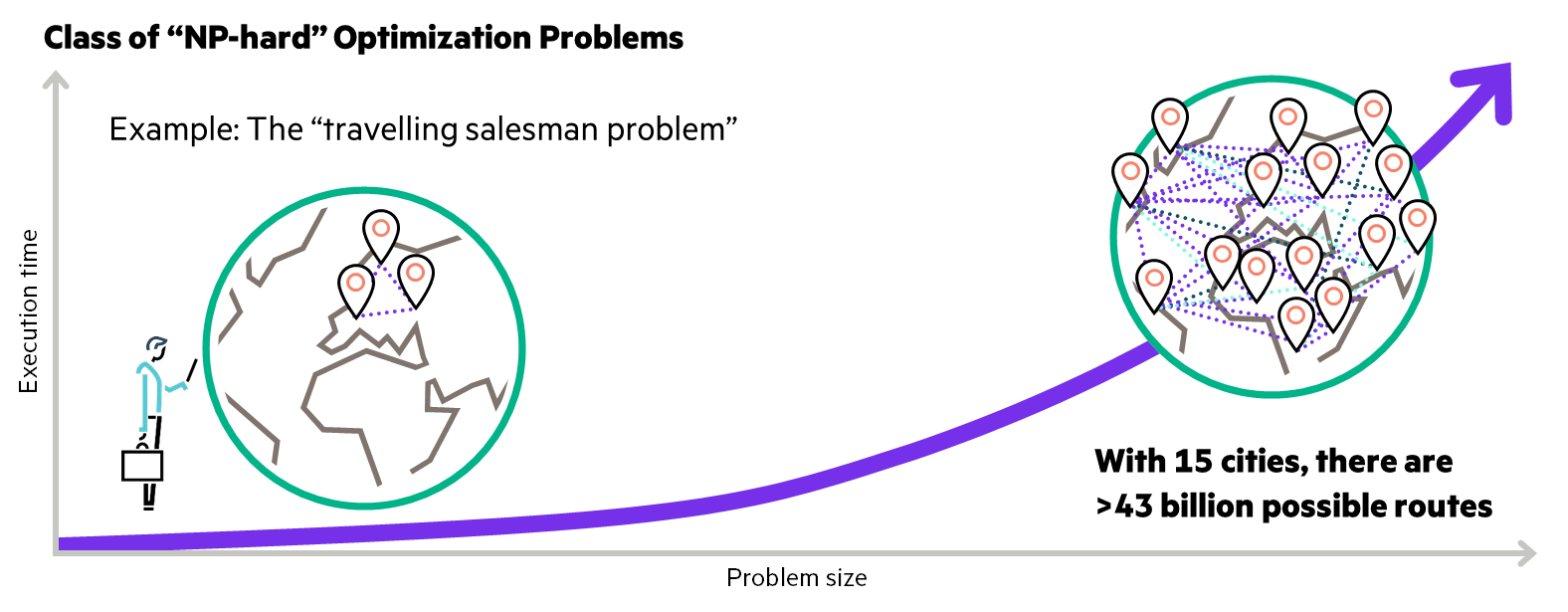

There is intense research to reinvent the way computers work and keep us on the exponential performance curve to which we’ve all become accustomed. This remarkable trend has resulted in an exponentially growing appetite for computing, particularly to extract insights from the tremendous amounts of digital data produced every day. Nonetheless, there has been an ever-present class of computing problems, known as NP-hard problems, which has stymied even modern systems. For problems in this class, the time to solve them grows exponentially when the problem size itself only grows linearly. For instance, what do you think it takes to schedule the NFL games among 32 teams? Answer: a computer that fills up an entire living room, with 1000 cores, running non-stop for 3 months, to produce a handful of candidate schedules that can satisfy all of the constraints. In other words, the work of constructing next year’s schedule has to begin soon after the prior season ends. With 3 teams, this problem would have been trivial, but already with 32, it is monstrous – this is the challenge of NP-hard problems, and why they can choke our best computers. There are many other NP hard problems of profound and urgent importance, including planning a postal delivery route (aka, the traveling salesman problem, illustrated in Figure 1), sequencing of viral genomes for drug discovery, scheduling the crews on airline flights, routing in VLSI designs, and bandwidth optimization in satellite communication.

Figure 1: Illustration of the NP-hard Traveling Salesman problem. A salesman wants to visit N cities and find the shortest route. The execution time to find this grows exponentially with the number of cities to visit.

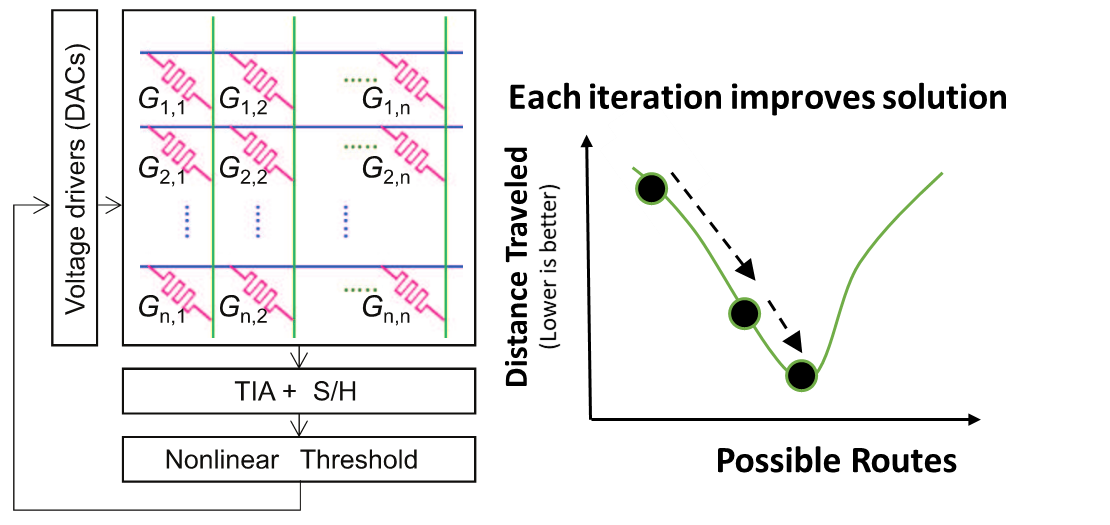

One of the foundational developments in the history of AI research came when John Hopfield developed a model for how associative memories could be constructed using recurrent (feedback-enabled) networks. These came to be called Hopfield neural networks (see Figure 2), and have since found applications beyond memories, including a means to encode challenging optimization problems and, in an iterative process, slowly converge on solution minima. We had previously hypothesized that simple crossbar arrays of analog non-volatile devices (including memristors, two terminal devices that store information in their resistance state) could be used to construct very fast-running and low power Hopfield networks (Nature 548, 318 (2017)). Over the next years, we refined the ideas and developed the needed tricks to not just perform the dominant linear algebra operations in the analog domain, but to inject and control the amount of noise in the network to dislodge the system from local minima, which are sub-optimal solutions. While our previous work on the Dot Product Engine used a similar crossbar system to accelerate matrix operations for today’s machine learning and signal processing applications, in those cases we went to great lengths to mitigate any noise issues, including keeping the arrays smaller and only representing a few bits in each device. For the Hopfield network, we discovered that such noise could be advantageous and developed ways to turn it on and off as needed.

Figure 2 (Left): A Hopfield neural network can be built around analog crossbar arrays to solve such NP-hard problems. (Right) The Hopfield network is a recurrent (feedback) network in which candidate solutions are rapidly explored iteratively and improved upon, with a guarantee to converge on minima. The work described here found ways to efficiently inject and control noise to speed-up convergence to globally optimal solutions.

We have now built prototype experimental systems to run these Hopfield networks and shown they can solve these NP-hard optimization problems. Based on these experiments, and expanded simulation studies, we project the performance of the system at a 16nm CMOS node to exceed the best CPUs and GPUs by a factor of 10,000 in energy consumed to solve any given optimization problem. In fact, we project our memristor Hopfield network to outperform any competing quantum or table-top optical approaches by many orders of magnitude as well.

The work published recently in Nature Electronics has been a productive collaboration across Hewlett Packard Labs and several universities, including Fuxi Cai from University of Michigan who started the work as an intern at Labs (read the Nature Community blog post about the paper). Additionally, we have leveraged significant prior work from Labs in understanding and engineering the material stack to build high-performance RRAM/memristors, as well as circuit and board designs for driving and sensing the crossbar arrays in a highly parallel mode of operation. Our collaborators also helped us perform rigorous benchmark comparisons to other technologies, including non-traditional systems like DWave, and supported the scaled forecasts of our chips. The present work is one milestone in the exploration of new ways to speed-up old problems. We look forward to sharing progress in this exciting field, as well as other emerging accelerators.

- Back to Blog

- Newer Article

- Older Article

- MandyLott on: HPE Learning Partners share how to make the most o...

- thepersonalhelp on: Bridging the Gap Between Academia and Industry

- Karyl Miller on: How certifications are pushing women in tech ahead...

- Drew Lietzow on: IDPD 2021 - HPE Celebrates International Day of Pe...

- JillSweeneyTech on: HPE Tech Talk Podcast - New GreenLake Lighthouse: ...

- Fahima on: HPE Discover 2021: The Hybrid Cloud sessions you d...

- Punit Chandra D on: HPE Codewars, India is back and it is virtual

- JillSweeneyTech on: An experiment in Leadership – Planning to restart ...

- JillSweeneyTech on: HPE Tech Talk Podcast - Growing Up in Tech, Ep.13

- Kannan Annaswamy on: HPE Accelerating Impact positively benefits 360 mi...