- Community Home

- >

- HPE AI

- >

- AI Unlocked

- >

- Data versioning for data science: data fabric snap...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Data versioning for data science: data fabric snapshots improve data and model management

But can you do it again? What happens when you need the exact same training data used to train a particular model at a particular point in time – and you need to identify it among hundreds of models you have stored? Because at some point, you will need to be able to find and reuse a specific set of training data if you are to continue to succeed.

The ability to keep accurate point-in-time versions of data is particularly important in AI and machine learning systems, but it’s not limited to those projects. This key capability is also useful for analytics in general.

Is it possible to effectively deal with data versioning in a complex system at large scale built across multiple locations? The answer is yes.

Why data versioning matters

One reason data version versioning matters is because machine learning and AI are iterative processes. Code is written for a new model, and then the model is trained by exposure to carefully selected training data. The nature of the resulting model literally depends on the data used to train it as well as the learning algorithm and parameter settings. Model performance is evaluated and, to get the desired performance, often it’s necessary to tweak the learning algorithm or adjust training data and then carry out the training process again and again.

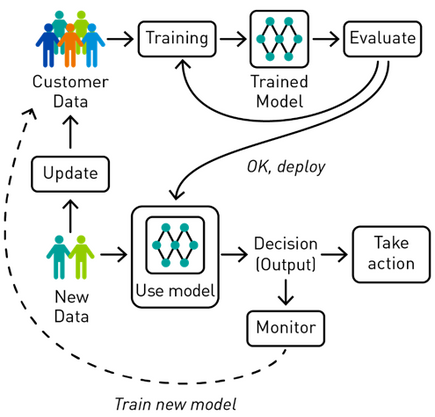

Even after a model is deployed in production, the process continues. Ongoing monitoring of model behavior may trigger the need to start over and train new models. The world constantly changes, so the fitness of a trained model may also change, requiring retraining on new data, with new settings or may require an entirely new model. But if everything is changing, how can you tell what is working and what is not? Being able to access exactly the data set used to train a particular model is also important for accurate model-to-model comparisons. This ongoing process of training - evaluation - adjustment - retraining or development of new models is depicted in Figure 1.

Figure 1. Machine learning is an iterative process for which training data is key

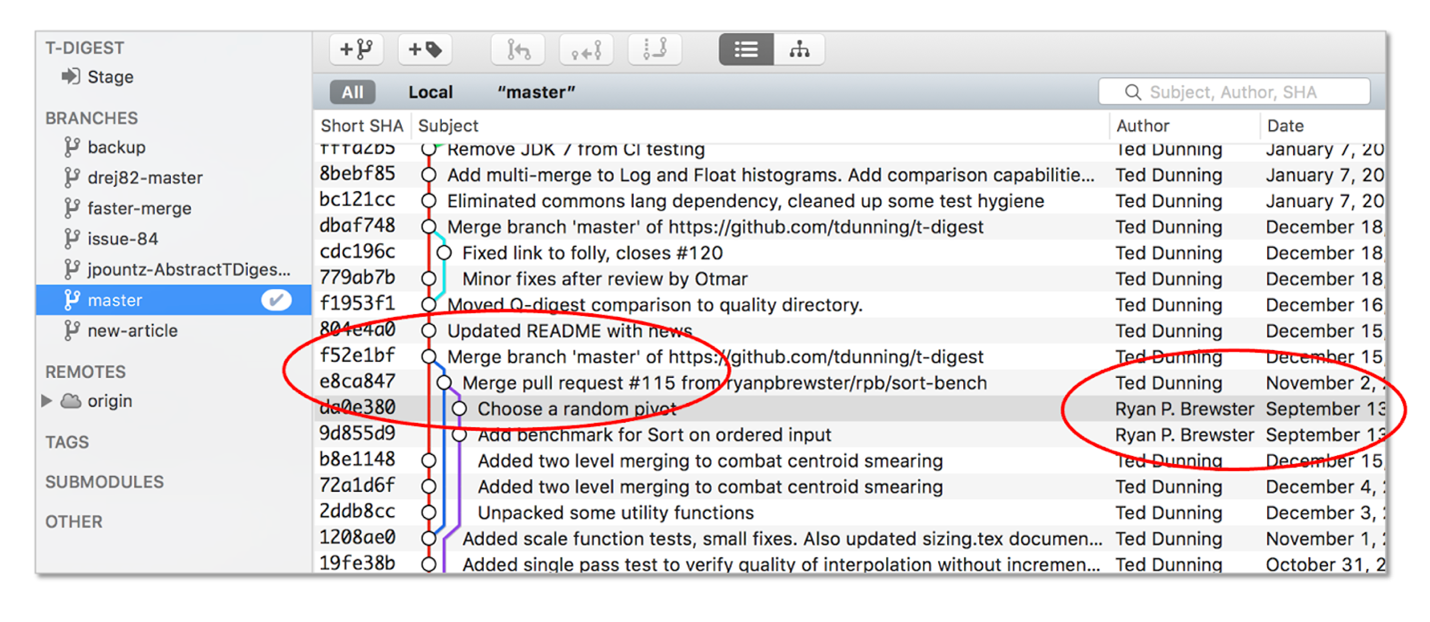

To be successful, data scientists need to easily and accurately find the exact data seen by a particular model or outputs for a specific iteration of the system so they can control what changes in their tests. Think of it this way: Data versioning is the complement to version control for code, a familiar concept. A tool such as Github may be used to keep track of different versions of code and who has forked a new version, as shown in Figure 2.

Just as version control is essential for code, the same applies to data. Data version control can be challenging even at moderate scale, in part because so many models are involved, but the challenge of data versioning becomes much greater in large-scale, complex systems.

Data fabric snapshots: built-in data versioning at scale

Data infrastructure designed for unified analytics and data science in large scale systems should provide efficient built-in data versioning. HPE Ezmeral Data Fabric File and Object Store is a software-defined solution engineered to handle data storage, management, and motion in a unified layer from edge to data center, on-premises, or in cloud.

Data version control is efficient and convenient using data fabric snapshots because they. provide an accurate point-in-time version of data, even if that data is enormous. Snapshots in the data fabric are done at the level of the data fabric volume, which is like a directory, but with management handles on it. The data fabric volume is your superpower for data management. Files, directories, tables, objects, and event streams are all engineered as part of the data fabric and are stored and managed using data volumes.

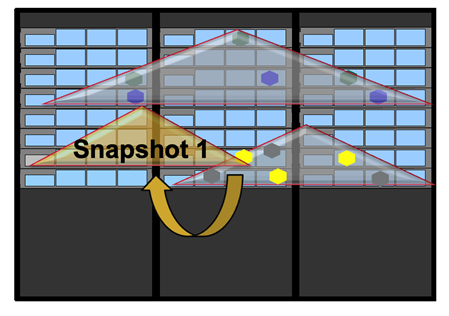

Data fabric volumes, by default, spread across an entire data fabric cluster for better data safety and performance, as shown in Figure 3. Volumes are represented in the figure by large transparent triangles containing multiple data replicas depicted as colored hexagons.

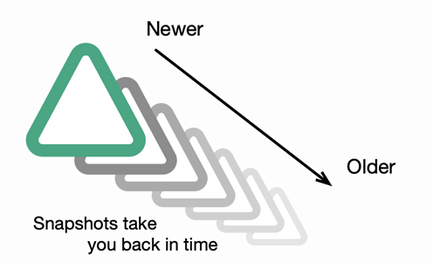

Snapshots of each volume can be created manually or on a schedule, making them ideal for preserving versions of data. Having this repeatable process is essential to build and train models successfully over time as well as to serve as data protection against human or machine errors that could unintentionally delete or alter data. In a way, the data fabric snapshots serve as a time machine for data, as suggested by Figure 4 (green triangle is the original data fabric volume, and each grey triangle represents a different snapshot made at different points in time.)

Making snapshots is easy and takes less time than forking a repository on Github, so they don’t impose a penalty on time budgeted for machine learning/AI projects.

Efficient data version control in a large-scale system solves a major challenge for data science and unified analytics, but deploying models is also a challenge. Being able to control the deployment of trained models with accuracy and consistency, even across multiple locations including edge, is also key to success. Once again, data fabric volumes provide a built-in solution.

Mirroring: deploy models to multiple locations with confidence

Data fabric mirroring is an efficient way to move any kind of data, including code or executable programs. This capability makes mirroring ideal to deploy the exact same model to multiple locations, a process that often is important for systems with edge computing.

In addition, mirrors use snapshots internally so when you mirror a data fabric volume, changes to the entire volume are applied atomically. No partial changes are ever visible. As a result, coordinated changes across multiple files will stay coordinated at the mirror destination. This tight coordination is particularly useful in deploying models such that the model code and configuration files can be kept consistent as new models are deployed.

Successful data and model management

Keeping track of exactly what data was used to train each successful model can be challenging when working at large scale. Data fabric snapshots and mirroring make data version control and model deployment much more predictable. And that, in turn, makes success more likely to be repeatable.

For more on working with data at large scale for AI/machine learning and analytics:

Watch the video replays of presentations from HPE Data World December 2021

Read the blog post “Escape the maze: manage large-scale complex systems with flexibility and simplicity”

Read the blog post “Dataspaces: how an open metadata layer can establish a trustworthy data pipleline”

Download free pdf of O’Reilly ebook AI and Analytics at Scale: Lessons from Real-World Production Systems © 2021 by Ted Dunning and Ellen Friedman

Ellen Friedman

Hewlett Packard Enterprise

Ellen_Friedman

Ellen Friedman is a principal technologist at HPE focused on large-scale data analytics and machine learning. Ellen worked at MapR Technologies for seven years prior to her current role at HPE, where she was a committer for the Apache Drill and Apache Mahout open source projects. She is a co-author of multiple books published by O’Reilly Media, including AI & Analytics in Production, Machine Learning Logistics, and the Practical Machine Learning series.

- Back to Blog

- Newer Article

- Older Article

- Dhoni on: HPE teams with NVIDIA to scale NVIDIA NIM Agent Bl...

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.