- Community Home

- >

- Software

- >

- HPE Ezmeral: Uncut

- >

- Data in action: How to remove barriers to data use

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Data in action: How to remove barriers to data use

The answer is almost certainly “both” given that there is a cost in both resources and human effort to collect, store, maintain and use it. When people talk about the large scale of their data, they usually are talking about the total amount of data they store, not the amount of data they actually use. Unfortunately, these two quantities are usually very different.

Research groups estimate that, of the data organizations hold, between 55% to 75% goes unused. That’s a major amount of costly data waste. And the cost is not just the expense of storing and maintaining data at large scale. It’s also an opportunity cost. Furthermore, unused data, sometimes called “dark data”, can also increase security risks.

The good news is that there are ways to remove the barriers to data use. Before looking at how you can do that, let’s first consider why so much data goes unused.

What stands in the way of data use?

To get value from data, you need the right approach with modern analytics or AI applications that can unlock the value of data and provide insights or even take direct action. But before you think of what type of algorithms and approaches may help, look first to the data: if you don’t have easy access to data you need, usable by the tools you choose,

Data silos present a major barrier to data use by multiple groups. In a recent article “Harness data’s value from edge to cloud”, Joann Starke, HPE Ezmeral subject matter expert, pointed out that “Delivering connected data across siloed initiatives is hard.” It’s hard because data is stored in different formats, across different environments, and many different platforms. Each of these entities operate as independent silos which inhibit an organization’s ability to harness value locked within the data.

Getting the data you need also requires knowing that the data exists. This sounds simple, but it’s actually a challenge, both inside and between organizations. Data producers may not know how data will be used later on, so data may not be easily identifiable for new uses. Janice Zdankus and Robert Christensen, both from the Office of the CTO at HPE, explained this challenge in the article “Getting value from data shouldn’t be this hard”. They said, “If a data producer has consumers—which can be inside or outside the organization—they have to connect to the data. That's both an organizational and a technology challenge”. One aspect of the technology challenge is having data - and being able to access and use it - where you need it, whether that’s at edge locations, on-premises, or in multiple clouds.

Another technology challenge that can stand in the way of data use and particularly of valuable data re-use is being able to afford to keep large data sets, especially unstructured data, that could be valuable for speculative AI and machine learning projects. Of course, large amounts of data are retained because they have been processed for specific uses or for compliance to regulations or governance reasons. But the raw data is often discarded after initial use. Unless your data infrastructure is sufficiently scalable and cost-efficient to make it reasonable not to just discard data after the first use, you might miss out on a 2nd project advantage.

These potential barriers to data use don’t have to be show-stoppers, however. There are ways you can reduce or remove these obstructions to improve the percent of your data that gets used to advantage.

How your data platform can remove barriers to data use

Developing a comprehensive data strategy in an enterprise environment where data is spread from edge to cloud is an organizational approach that can improve access to data. And better access leads to better data use. This approach starts by unifying different data types across edge to cloud. Joann Starke points out that a “single logical data layer enables reuse of data across different use cases and applications reducing the need to create and maintain separate infrastructures by data type.”

HPE Ezmeral Data Fabric File and Object Store is the industry’s first edge to cloud data fabric that unifies, stores and processes different data types into a single logical infrastructure. This increases data integrity and trust in the analytics driving your business performance.

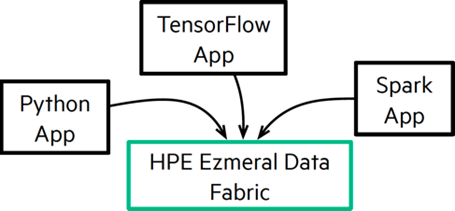

Data fabric provides a unified data layer with widely flexible data access across your enterprise.

Different applications directly access the same data layer provided by HPE Ezmeral Data Fabric.

Data fabric’s open multi-API access discourages the creation of data silos and makes workflows simpler and more efficient. It makes it easier to share services and core functionality reducing data management complexity and dedicated infrastructure to each data type. Multi-protocol access allows multiple use cases and workloads to leverage the same data sets, reducing analytics infrastructure. And it delivers faster time to insights to eliminating the endless cycle of merging, remerging, and redeploying existing silos into new silos, removing these potential barriers to data use.

Another challenge to data use is knowing what data exists and finding the data you need. While there are many facets to this challenge, data fabric’s global namespace makes it much easier to find data wherever it is located. And then brings us to another technology challenge for data use: getting convenient access to data where you need it to be, from edge locations to multi-cloud to different on-premises data centers. Data fabric’s built-in mirroring provides an efficient way to do major data motion, while data fabric also gives you the option for remote access, even from data center or cloud back to data at the edge.

HPE Ezmeral Data Fabric protects your investment in existing analytic infrastructures, such as data lakes, warehouses, cloud, edge, and on-premises. This enables companies to modernize without low-value refactoring. They can slowly retire existing infrastructure or simply build new on top of existing data sets. Data stored and managed by HPE Ezmeral Data Fabric is analytics-ready because the data is directly available via flexible, open access. The HPE Ezmeral Ecosystem Pack provides certified open-source tools and engines that layer directly on top of the data fabric to enable in-place analytics no matter where the data is located. It also reduces time spent integrating and configuring open source tools.

HPE Ezmeral Data Fabric’s extreme scalability makes it feasible and cost-effective to retain large amounts of files, objects, NoSQL database and streaming data and analyze it in real-time to provide the real-time and predictive models your business needs.

No more lazy data!

The best way to realize the full potential of modern analytics and AI/machine learning approaches is to put more of your data to use. Building a comprehensive data strategy that is supported by unifying data infrastructure can go a long way to waking up lazy data and putting it into action.

To learn more about closing the gap between data and data consumers, watch this short video about the “Modern Data Platform”.

Hewlett Packard Enterprise

twitter.com/HPE_Ezmeral

linkedin.com/showcase/hpe-ezmeral

hpe.com/software

Ellen_Friedman

Ellen Friedman is a principal technologist at HPE focused on large-scale data analytics and machine learning. Ellen worked at MapR Technologies for seven years prior to her current role at HPE, where she was a committer for the Apache Drill and Apache Mahout open source projects. She is a co-author of multiple books published by O’Reilly Media, including AI & Analytics in Production, Machine Learning Logistics, and the Practical Machine Learning series.

- Back to Blog

- Newer Article

- Older Article

- SFERRY on: What is machine learning?

- MTiempos on: HPE Ezmeral Container Platform is now HPE Ezmeral ...

- Arda Acar on: Analytic model deployment too slow? Accelerate dat...

- Jeroen_Kleen on: Introducing HPE Ezmeral Container Platform 5.1

- LWhitehouse on: Catch the next wave of HPE Discover Virtual Experi...

- jnewtonhp on: Bringing Trusted Computing to the Cloud

- Marty Poniatowski on: Leverage containers to maintain business continuit...

- Data Science training in hyderabad on: How to accelerate model training and improve data ...

- vanphongpham1 on: More enterprises are using containers; here’s why.

- data science course on: Machine Learning Operationalization in the Enterpr...