- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- HCI optimized for edge, part 5: Space and scalabil...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

HCI optimized for edge, part 5: Space and scalability

This technical blog series explains how AI and hyperconverged infrastructure simplify management and control costs at the edge. Part 5 explores HPE SimpliVity arbitration and its role in HA and datacenter space savings, then moves on to simple scalability in the HCI environment.

It’s time to talk about arbitration and its role in HA and datacenter space savings. I’ll also explain how the hyperconverged architecture is designed to prevent data corruption in a 2-node configuration. I’ll touch on simple scalability, as well: how the environment can seamlessly grow from 2 to N nodes.

Arbitration – the importance of a witness

IT organizations want to keep footprint as small as possible to save space. A minimum of two nodes is required to achieve HA, but in cases where the two nodes “disagree,” this architecture requires a third point of contact known as an arbiter (AKA witness). In the event of a node failure, this witness ensures data availability and prevents a condition called split brain which can lead to data corruption.

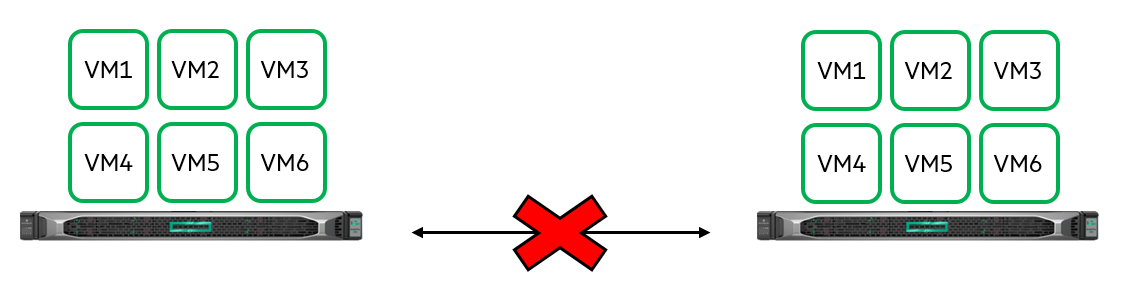

There are a number of scenarios which could result in a split brain condition. The most obvious is the loss of communication between the nodes. Remember, HPE SimpliVity is writing a copy of all data to both nodes in our 2-node cluster so the system can provide HA in the event of a node loss. The secondary copies of the VMs are available should a node fail, so VMware HA can simply bring them up.

Let’s imagine that these nodes cannot communicate for whatever reason, but they each continue to service read/write operations. Even worse, each node may have been brought online with all of their respective VMs, because each node “assumes” the other node is down.

In this split brain scenario (depicted above), we have multiple active copies of our VMs actively running and changing their data independently on each node. Once communication is restored between the nodes, things could go really badly. Which node has the most current VM data? How do we choose which side is best to restore from? It is very unlikely we can simply merge the data, so one dataset must be selected over the other.

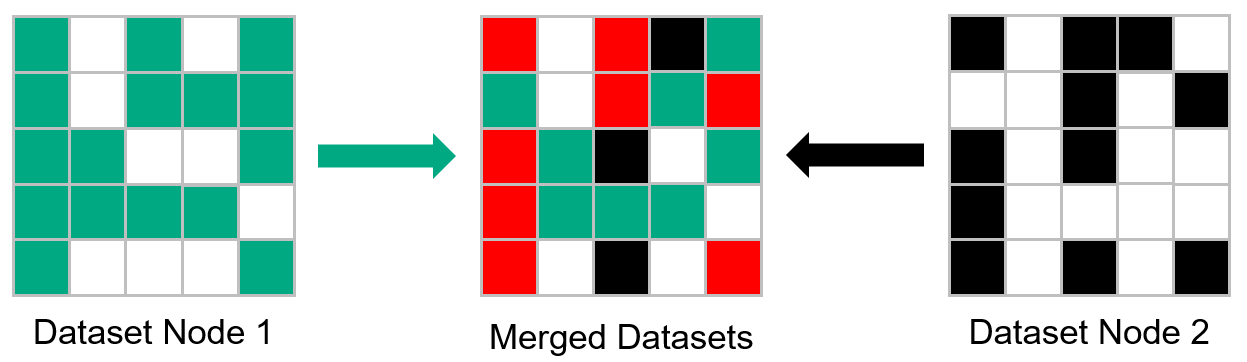

In this diagram, green blocks represent data on Node 1, and black blocks represent data on Node 2. These two datasets share common blocks which may or may not hold the same information. Remember, both sets were identical until the communication loss and have begun to diverge because they have been running independently. Attempting to simply merge these two data sets will corrupt overlapping blocks that have changed (represented by the red blocks), thereby corrupting the data.

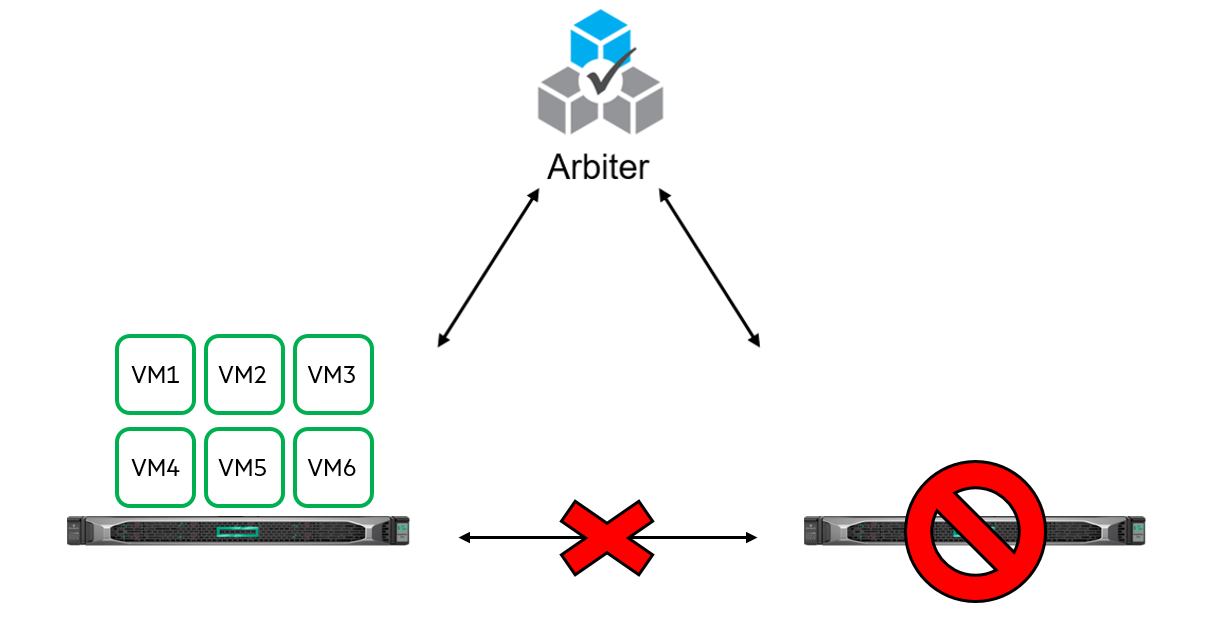

To prevent such a situation, we use the HPE SimpliVity Arbiter as a witness. In the event of a communication issue between the nodes, the Arbiter elects one node over the other through a number of logical rules, and that primary node remains online and restarts any VMs associated with the secondary node. The secondary node simply takes itself offline to prevent any chance of data being written. Once communication is restored, the secondary node is brought back up, and changes on the primary node are quickly synchronized to the secondary node.

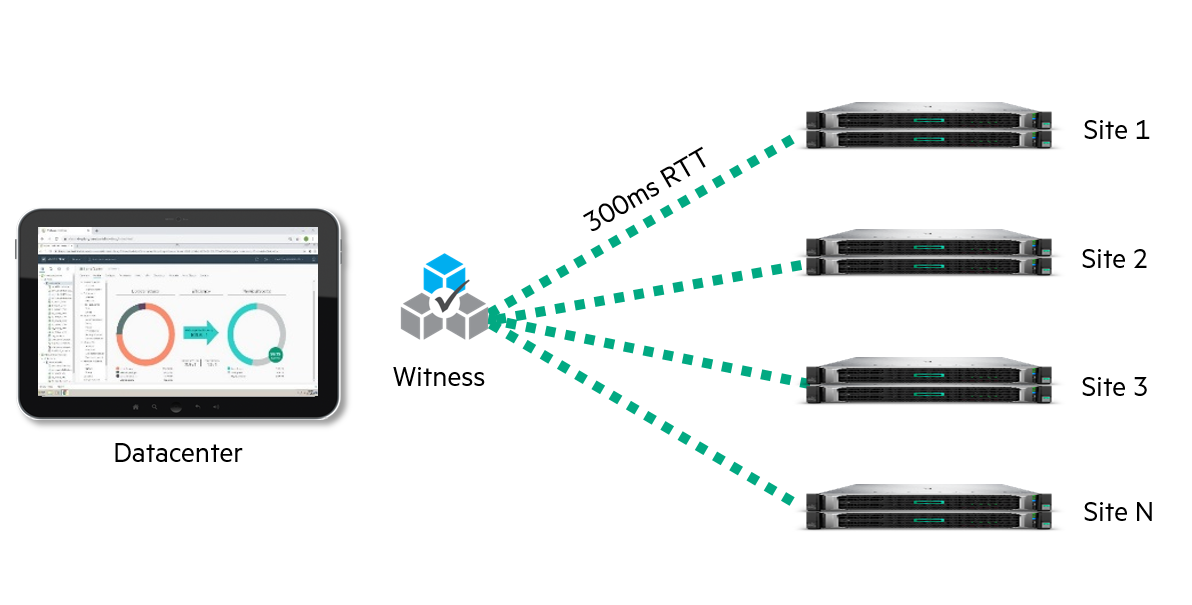

Good news for edge locations with limited physical space – you don’t need a third piece of hardware onsite to run this witness. The HPE SimpliVity Arbiter can be run remotely in a separate location. For example, you could host a witness in a central datacenter where the edge locations are centrally managed. The witness could also be hosted at adjacent sites. The flexibility is there, and it is simply a matter of determining what architecture is best for the use case.

The witness can support up to 4,096 VMs distributed across X number of remote locations with 300ms RTT latency. For example, if each remote location had 10 virtual machines, a single HPE SimpliVity Arbiter can support 409 locations. If your environment scales beyond this, simply deploy a second, third, fourth witness, etc. to support the number of VMs across your remote locations.

A solution for extremely space-constrained environments

No matter the size of your workload, you would be surprised by how much you can fit on a 2-node, 2U configuration.

Scalability made simple

Because this blog series has been focused on the simplicity of HCI, my primary focus has been on the smallest configurations. However, it’s worth noting that simplicity is just as important in larger configurations – maybe even more important.

As workloads and requirements grow, there may come a point when locations need to grow beyond just 2-nodes, and that can be a challenge. With limited onsite IT resources and multiple locations potentially growing at different rates, this process needs to be as simple as possible. Some industry leading HCI solutions require a complete rebuild when scaling beyond a certain node count because of how they are architected. As their hyperconverged systems grow, they want to distribute data differently across the nodes, and this can only be achieved by completely rebuilding the environment. Not ideal!

This is not the case with HPE SimpliVity. The seamless scale-out architecture is specifically designed to start small (2-nodes) and scale the cluster up as needed without disruption or requiring a rebuild. For example, let’s say you want to add a third node to a 2-node cluster. The new node can be added using the HPE SimpliVity Deployment Manager, which runs a series of pre-deployment validation checks to make sure there are no networking issues (among other tests). The third node is then automatically provisioned with the chosen ESXi image and added seamlessly into the VMware cluster. It’s as easy as that.

You don’t need to worry about balancing VMs between the nodes, either. HPE SimpliVity’s Data Virtualization platform automatically monitors and balances VMs using a component called Intelligent Workload Optimizer (IWO). IWO is comprised of two subcomponents, the Resource Balancer Service (RBS) and VMware Distributed Resource Scheduler (DRS), which balance the storage and compute. RBS ensures all resources in the cluster are properly utilized. It also works with DRS to enforce data locality through affinity rules, making sure VMs run on nodes where they are closest to their data. When a new node is added, IWO is aware of the new resource and will balance the workload appropriately.

The takeaway here is that there is no need to intervene! HPE SimpliVity takes care of all these details for you, simply, securely, and cost-effectively. Speaking of which, my next blog will cover cost-effective data security in the HCI platform.

Learn more about HPE hyperconverged infrastructure

Read the entire blog series and learn more about business continuity in HPE SimpliVity environments.

- Watch a lightboard demonstration of how HPE SimpliVity Arbiter works.

- For a deeper dive on IWO, I highly recommend Damian Erangey’s blog on the subject.

HCI Optimized for Edge

- Part 1: Edge and remote office challenges

- Part 2: Multisite management and orchestration

- Part 3: Cluster resiliency and high availability

- Part 4: Backup and disaster recovery

- Part 5: Space and scalability

- Part 6: Cost-effective data security

- Part 7: Simple edge configuration

Storage Experts

Hewlett Packard Enterprise

twitter.com/HPE_Storage

linkedin.com/showcase/hpestorage/

hpe.com/simplivity

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...