- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- HCI optimized for edge, part 3: Cluster resiliency...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Forums

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

HCI optimized for edge, part 3: Cluster resiliency and high availability

This technical blog series explains how AI and hyperconverged infrastructure simplify management and control costs at the edge. Part 3 explores unique resiliency features that make HPE SimpliVity an ideal fit for customers with multiple edge locations.

Edge locations are generally space constrained, and often times where you want the best availability for critical services, the footprint simply won’t allow it. For a business that depends on these services, an outage could be very costly, preventing customers from buying goods, or in more critical deployments, disabling services that monitor safety systems that protect people’s lives.

An HPE SimpliVity customer I know bought the solution for the latter scenario. The deployment sits on offshore oil rigs hosting bespoke safety monitoring systems. These systems are key to the oil rigs’ operation and are there to keep people safe. With the closest IT resources hundreds of miles away, hardware failures require components and engineers to be flown in on a helicopter – at significant cost and risk to lives and business. It is of the utmost importance that their storage solution be highly resilient on multiple levels and occupy the smallest possible footprint. That’s one of the main reasons they chose HPE SimpliVity.

HPE SimpliVity has a key focus on achieving high resiliency with the minimum node footprint. The RAIN + RAID (Redundant Array of Independent Nodes + Redundant Array of Independent Disks) architecture provides 5x9s availability in as small as a 2-node cluster. The platform requires a witness to achieve this 2-node resiliency (I will cover that in a future blog). Today I want to focus on the built-in resiliency and continuity features in HPE SimpliVity architecture.

Cluster resiliency and high availability

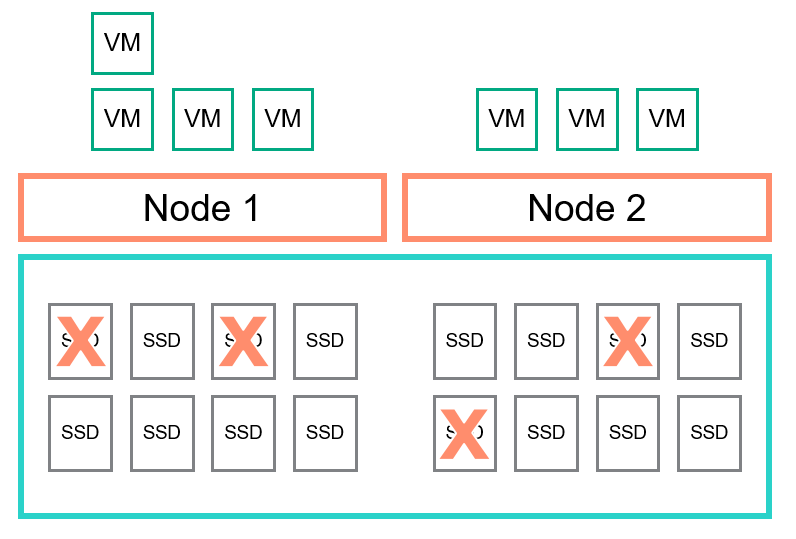

The RAID component provides node level resiliency at the disk level where HPE SimpliVity can sustain multiple disk failures without impact to performance or loss of data. Other HCI solutions distribute the data at the software defined storage (SDS) layer across nodes, but struggle with disk resiliency within the nodes themselves. This becomes a real problem in small cluster sizes like 2-nodes where a single disk failure can leave business apps and data very vulnerable. In this situation, some vendors are even unable to provision new VMs and other storage operations. Most of the leading HCI architectures require 4 nodes and above to achieve the same level of availability that HPE delivers.

RAID provides HPE SimpliVity with a traditional approach to node data protection which is still leveraged by enterprise storage arrays today. The hyperconverged nodes are available with different RAID configurations, depending on the capacity in the nodes. Built-in resiliency in our larger RAID 6 models can tolerate a loss of 2 disks per node without impact to your production workloads.

RAIN builds upon this node level redundancy providing storage high availability across multiple hyperconverged nodes. Virtual machines (VMs) hosted on the platform are automatically protected by HPE SimpliVity’s Data Virtualization Platform (DVP) that ensures there are multiple copies of the VMs’ data across the nodes, so in the event of a node failure, all VMs on a failed node can restart on a surviving node in the cluster. The powerful combination of RAID + RAIN allows HPE SimpliVity to lose disks in multiple nodes, experience a subsequent node failure, and still continue to provide data services for the VMs.

This level of resiliency should be a key part of any architect’s consideration when looking at solutions for edge-based workloads. Where hardware service level agreements are commonly longer than a few hours or – in the case of an offshore oil rig – days, you can be confident you are not moments away from a potential outage.

Data efficiency

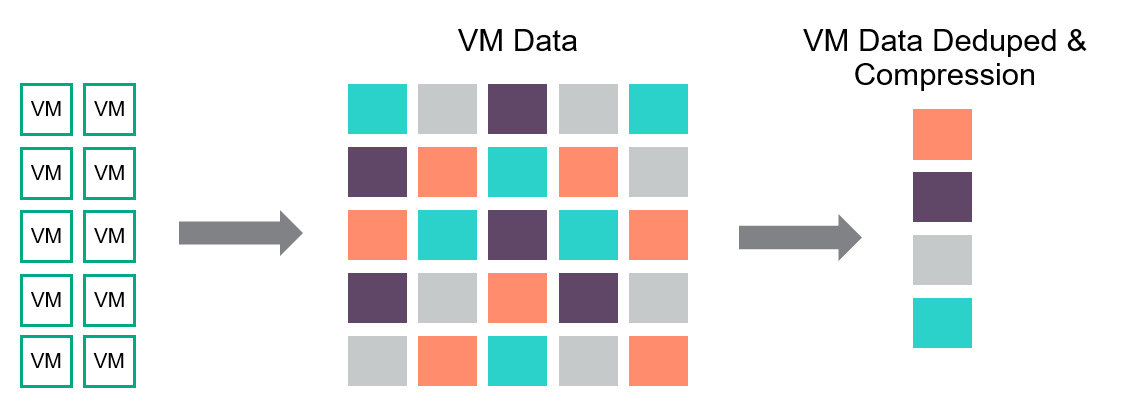

The HyperEfficient guarantee – part of the HPE SimpliVity HyperGuarantee – promises 90% capacity savings compared to traditional solutions. That’s because HPE SimpliVity deduplicates, compresses, and optimizes all data inline at inception in real time, eliminating unnecessary data processing and improving application performance.

Basically, when a VM writes some data, before it is written to any disk, the write is deduped against all of the data in the cluster and then compressed, optimizing the data for its entire life on the platform. This can significantly reduce write operations, which is where we generally see the most latency in storage operations. As Gene Amdahl said, “the best IO is the one you don’t have to do.” Note this is at inception and not “near inception.” Near inception would indicate this action is a post-process task, after the data has already been written once and incurs additional compute overhead on a platform as it is re-read, dededuped/compressed, and written again.

That’s great, but what does it mean for edge sites? Well it allows you to store more data on less storage, again reducing footprint and saving money by not having to buy storage or nodes that you don’t technically need. It improves the overall system performance and optimizes all the data.

It also allows HPE SimpliVity to do some really cool stuff – which I will explore in my next blog, as we continue our business continuity journey. I’ll show how HPE SimpliVity’s resilient data protection features, including local and remote backups, can support granular RPOs and fast RTOs, helping to prevent data loss if disaster should strike. But before that, check out this customer’s data efficiency hero numbers with just 2 nodes: 99.3% data savings across production and backup storage!

Learn more about HPE hyperconverged infrastructure

Read the entire blog series and learn more about business continuity in HPE SimpliVity environments.

- For those who would to like to know more about VM data placement, here’s a link to Damian Erangey blogs which discuss this in in-depth detail.

- See all 5 guarantees in the HPE SimpliVity HyperGuarantee

HCI Optimized for Edge

- Part 1: Edge and remote office challenges

- Part 2: Multisite management and orchestration

- Part 3: Cluster resiliency and high availability

- Part 4: Backup and disaster recovery

- Part 5: Space and scalability

- Part 6: Cost-effective data security

- Part 7: Simple edge configuration

Storage Experts

Hewlett Packard Enterprise

twitter.com/HPE_Storage

linkedin.com/showcase/hpestorage/

hpe.com/simplivity

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...